H-index: 73 | Google citation | DBLP | CS Rankings

|

6. Aditya Vora,

Sauradip Nag, Kai Wang, Hao Zhang,

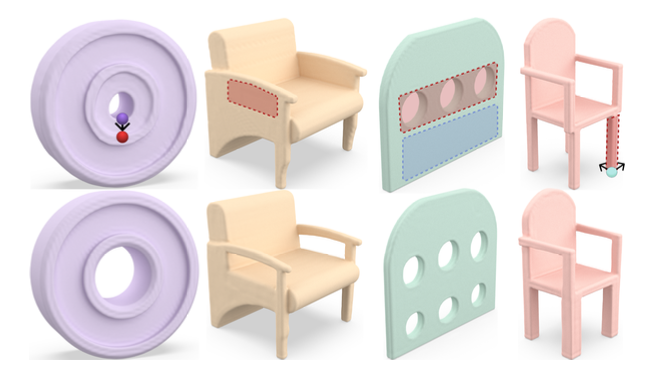

"Articulate That Object Part (ATOP): 3D Part Articulation via Text and Motion Personalization", PROVISIONALLY accepted to ACM Trans. on Graphics (TOG) , 2026.

[arXiv | bibtex]

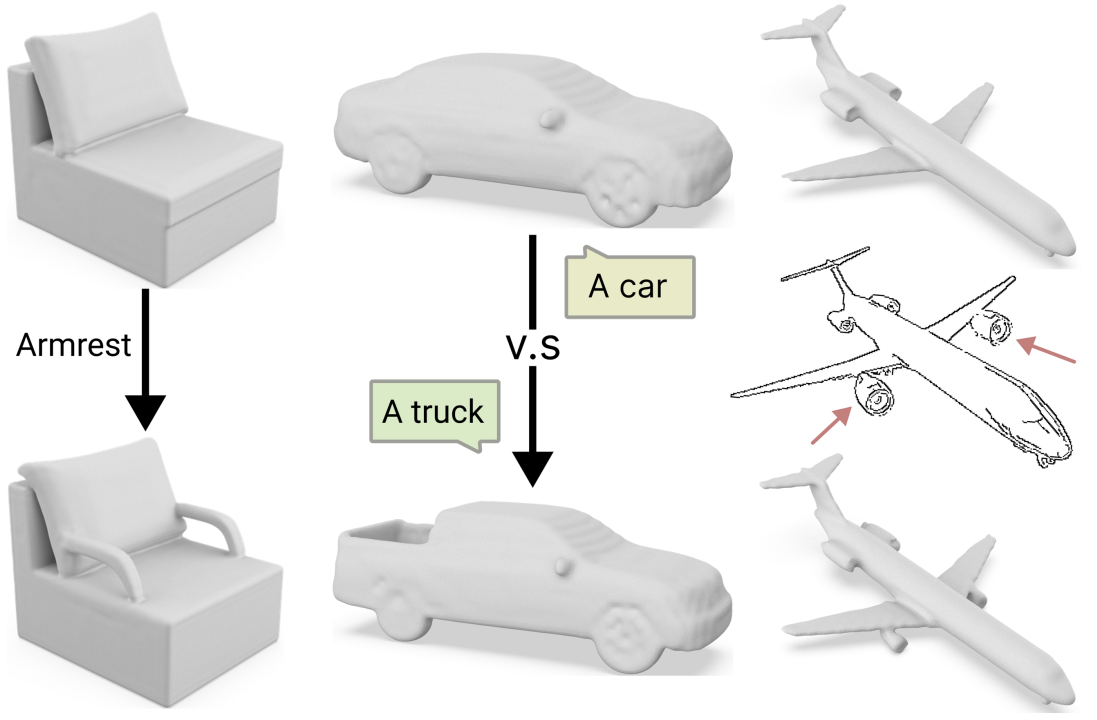

We present ATOP (Articulate That Object Part), a novel few-shot method based on motion personalization to articulate a static 3D object with respect to a part and its motion as prescribed in a text prompt. In our work, the text input allows us to tap into the power of modern-day diffusion models to generate plausible motion samples for the right object category and part. In turn, the input 3D object provides image prompting to personalize the generated video to that very object we wish to articulate. Our method starts with a few-shot finetuning for category-specific motion generation, a key first step to compensate for the lack of articulation awareness by current diffusion models. This is followed by motion video personalization that is realized by multi-view rendered images of the target 3D object. At last, we transfer the personalized video motion to the target 3D object via differentiable rendering to optimize part motion parameters by an SDS loss. |

|

5. Sai Raj Kishore Perla, Hao Zhang, and Ali Mahdavi-Amiri,

"Advances in Neural 3D Mesh Texturing: A Survey", Computer Graphics Forum, to be presented as Eurograhics State-of-the-Art Report (STAR), 2026.

[arXiv | bibtex]

Texturing 3D meshes plays a vital role in defining the visual realism of digital objects and scenes. Although recent generative 3D approaches based on Neural Radiance Fields and Gaussian Splatting can produce textured assets directly, polygonal meshes remain the core representation across modeling, animation, visual effects, and gaming pipelines. Consequently, 3D mesh texturing, particularly with neural methods—continues to be an essential and active area of research. This STAR survey provides a comprehensive overview of recent advances in neural 3D mesh texturing, reviewing methods for texture synthesis, transfer, and completion ... |

|

4. Mingrui Zhao, Sauradip Nag,

Kai Wang, Aditya Vora,

Guangda Ji,

Peter Chun, Ali Mahdavi-Amiri, and Hao Zhang,

"Advances in 4D Representation: Geometry, Motion, and Interaction", major revision to Computer Graphics Forum, 2026.

[arXiv | bibtex]

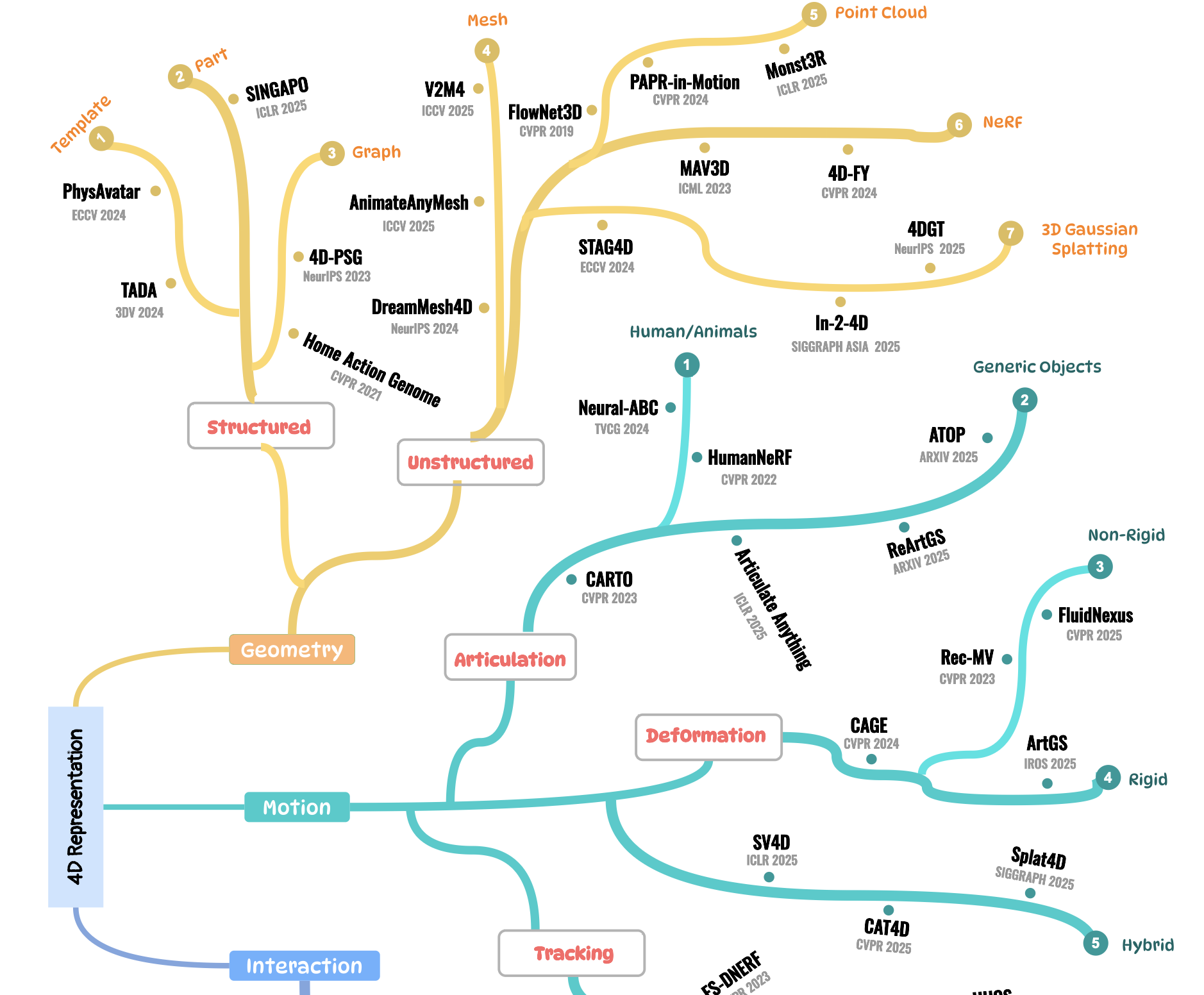

We present a survey on 4D generation and reconstruction, a fast-evolving subfield of computer graphics whose developments have been propelled by recent advances in neural fields, geometric and motion deep learning, as well 3D GenAI. We build our coverage of the domain from a unique and distinctive perspective of 4D representations (3D geometry + motion). Specifically, instead of offering an exhaustive enumeration of many works, we take a more selective approach by focusing on representative works to highlight both the desirable properties and ensuing challenges of each representation under different computation, application, and data scenarios. The main take-away message we aim to convey to the readers is on how to select and then customize the appropriate 4D representations for their tasks ... |

|

3. Maham Tanveer, Yang Zhou,

Simon Nicklaus, Ali Mahdavi-Amiri, Hao Zhang,

Krishna Kumara Singh, and Nanxuan Zhao,

"MultiCOIN: Multi-Modal COntrollable Video INbetweening", Computer Graphics Forum (Special Issue of Eurographics), 2026.

[Project page | arXiv | bibtex]

We introduce MultiCOIN, a video inbetweening framework that allows multi-modal controls, including depth transition and layering, motion trajectories, text prompts, and target regions for movement localization, while achieving a balance between flexibility, ease of use, and precision for fine-grained video interpolation. To achieve this, we adopt the Diffusion Transformer (DiT) architecture as our video generative model, due to its proven capability to generate high-quality long videos. To ensure compatibility between DiT and our multi-modal controls, we map all motion controls into a common sparse and user-friendly point-based representation as the video/noise input. Further, we separate content controls and motion controls into two branches to encode the required features ... | |

|

2. Yizhi Wang,

Mingrui Zhao (co-first author), and Hao Zhang,

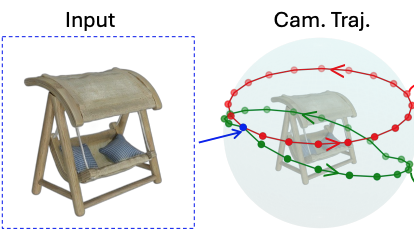

"ACT-R: Adaptive Camera Trajectories for Single View 3D Reconstruction", 3D Vision (3DV), 2026.

[Project page | arXiv | Code

| bibtex]

We introduce adaptive view planning to multi-view synthesis, aiming to improve both occlusion revelation and 3D consistency for single-view 3D reconstruction. Instead of generating an unordered set of views independently or simultaneously, we generate a sequence of views, leveraging temporal consistency to enhance 3D coherence. Most importantly, our view sequence is not determined by a pre-determined camera setup. Instead, we compute an adaptive camera trajectory (ACT), specifically, an orbit of camera views, which maximizes the visibility of occluded regions of the 3D object to be reconstructed. Once the best orbit is found, we feed it to a video diffusion model to generate novel views around the orbit ... |

|

1. Aditya Vora,

Lily Goli, Andrea Tagliasacchi, and Hao Zhang,

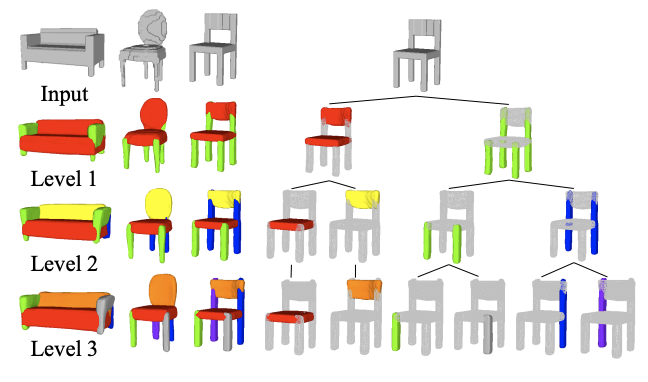

"HiT: Hierarchical Transformers for Unsupervised 3D Shape Abstraction", 3D Vision (3DV), 2026.

[Project page | arXiv | bibtex]

We introduce HIT, a novel hierarchical neural field representation for 3D shapes that learns general hierarchies in a coarse-to-fine manner across different shape categories in an unsupervised setting. Our key contribution is a hierarchical transformer (HIT), where each level learns parent–child relationships of the tree hierarchy using a compressed code-book. This codebook enables the network to automatically identify common substructures across potentially diverse shape categories. Unlike previous works that constrain the task to a fixed hierarchical structure (e.g., binary), we impose no such restriction ... |

|

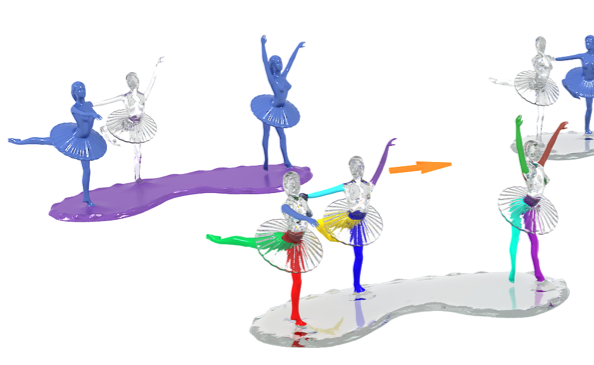

8. Sauradip Nag, Daniel Cohen-Or,

Hao Zhang, and Ali Mahdavi-Amiri,

"In-2-4D: Inbetweening from Two Single-View Images to 4D Generation", ACM SIGGRAPH Asia, 2025.

[Project page | arXiv |

Youtube video | bibtex]

We pose a new problem, In-2-4D, for generative 4D (i.e., 3D + motion) inbetweening to interpolate two single-view images. In contrast to video/4D generation from only text or a single image, our interpolative task can leverage more precise motion control to better constrain the generation. Given two monocular RGB images representing the start and end states of an object in motion, our goal is to generate and reconstruct the motion in 4D, without making assumptions on the object category, motion type, length, or complexity. To handle such arbitrary and diverse motions, we utilize a foundational video interpolation model for motion prediction and employ a hierarchical approach through keyframes to address large frame-to-frame motion gaps can lead to ambiguous interpretations ... | |

|

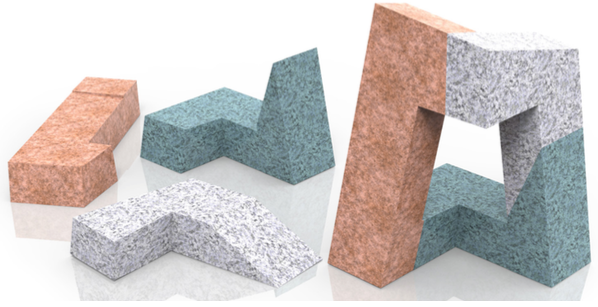

7. Qimin Chen, Yuezhi Yang,

Yifan Wang, Vladimir Kim,

Siddhartha Chaudhuri, Hao Zhang, and Zhiqin Chen,

"ART-DECO: Arbitrary Text Guidance for 3D Detailizer Construction", ACM SIGGRAPH Asia, 2025.

[Project page |

arXiv | bibtex]

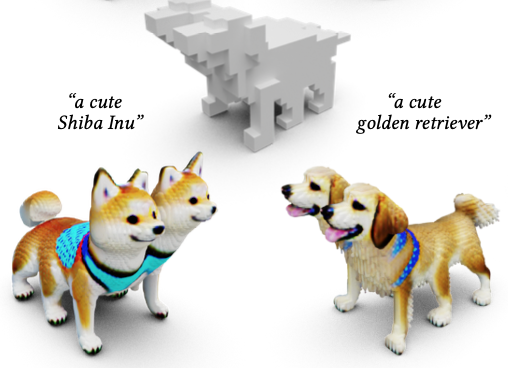

We introduce a 3D detailizer, a neural model which can instantaneously (in <1s) transform a coarse 3D shape proxy into a high-quality asset with detailed geometry and texture as guided by an input text prompt. Our model is trained using the text prompt, which defines the shape class and characterizes the appearance and fine-grained style of the generated details. The coarse 3D proxy, which can be easily varied and adjusted (e.g., via user editing), provides structure control over the final shape. Importantly, our detailizer is not optimized for a single shape; it is the result of distilling a generative model, so that it can be reused, without retraining, to generate any number of shapes, with varied structures, whose local details all share a consistent style and appearance. |

|

6. Sai Raj Kishore Perla, Aditya Vora,

Sauradip Nag,

Ali Mahdavi-Amiri, and

Hao Zhang,

"ASIA: Adaptive 3D Segmentation using Few Image Annotations", ACM SIGGRAPH Asia, 2025.

[Project page | arXiv | bibtex]

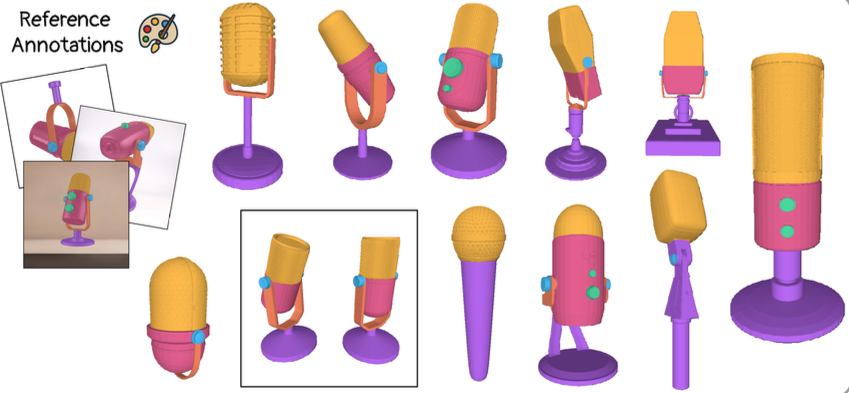

We introduce ASIA (Adaptive 3D Segmentation using few Image Annotations), a novel framework that enables segmentation of possibly non-semantic and non-text describable "parts" in 3D. Our segmentation is controllable through a few user-annotated in-the-wild images, which are easier to collect than multi-view images, less demanding to annotate than 3D models, and more precise than potentially ambiguous text descriptions. Our method leverages the rich priors of text-to-image diffusion models, such as Stable Diffusion, to transfer segmentations from image space to 3D, even when the annotated and target objects differ significantly in geometry or structure. During training, we optimize a text token for each segment and fine-tune our model with a novel cross-view part correspondence loss. |

|

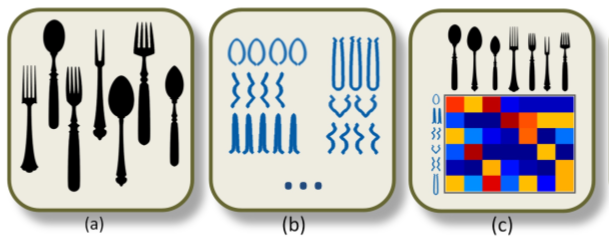

5. Ruiqi Wang and Hao Zhang,

"RESAnything: Attribute Prompting for Arbitrary Referring Segmentation", NeurIPS, 2025.

[arXiv | Project page | bibtex]

We present an open-vocabulary and zero-shot method for arbitrary referring expression segmentation (RES), targeting more general input expressions than those handled by prior works. Specifically, our inputs encompass both object- and part-level labels as well as implicit references pointing to properties or qualities of object/part function, design, style, material, etc. Our model, coined RESAnything, leverages Chain-of-Thoughts (CoT) reasoning, where the key idea is attribute prompting. We generate detailed descriptions of object/part attributes including shape, color, and location for potential segment proposals through systematic prompting of a large language model (LLM), where the proposals are produced by a foundational image segmentation model. |

|

4. Yilin Liu, Duoteng Xu, Xingyao Yu, Xiang Xu,

Daniel Cohen-Or, Hao Zhang, and

Hui Huang,

"HoLa: B-Rep Generation using a Holistic Latent Representation", ACM Transactions on Graphics (Special Issue of SIGGRAPH), 2025.

[arXiv | Project page | bibtex]

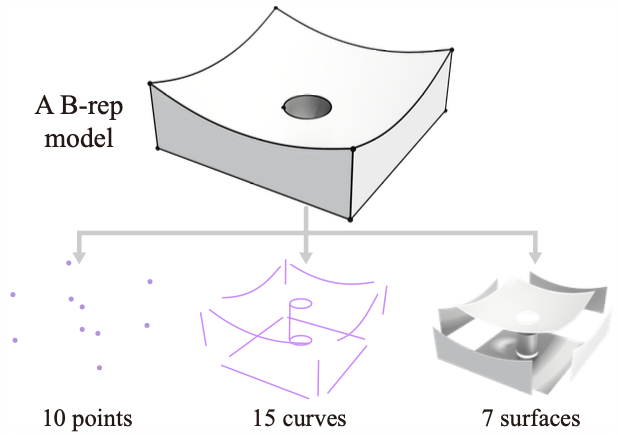

We introduce a novel representation for learning and generating Computer-Aided Design (CAD) models in the form of boundary representations (B-Reps). Our representation unifies the continuous geometric properties of B-Rep primitives in different orders (e.g., surfaces and curves) and their discrete topological relations in a holistic latent (HoLa) space. This is based on the simple observation that the topological connection between two surfaces is intrinsically tied to the geometry of their intersecting curve. Such a prior allows us to reformulate topology learning in B-Reps as a geometric reconstruction problem in Euclidean space. Specifically, we eliminate the presence of curves, vertices, and all the topological connections in the latent space by learning to distinguish and derive curve geometries from a pair of surface primitives via a neural intersection network ... |

|

3. Changhao Li, Yu Xin, Xiaowei Zhou,

Ariel Shamir, Hao Zhang,

Ligang Liu, and Ruizhen Hu,

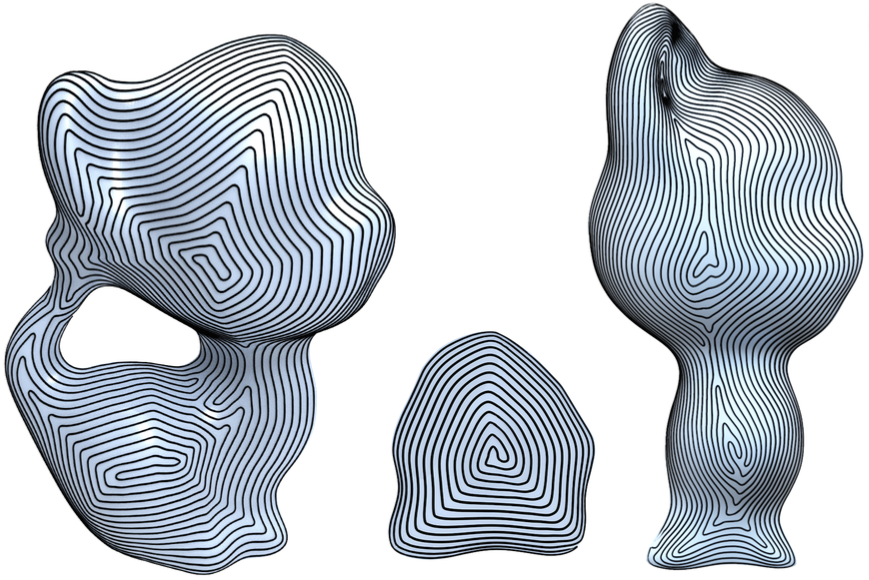

"MASH: Masked Anchored SpHerical Distances for 3D Shape Representation and Generation", ACM SIGGRAPH, 2025.

[Project page | arXiv | bibtex]

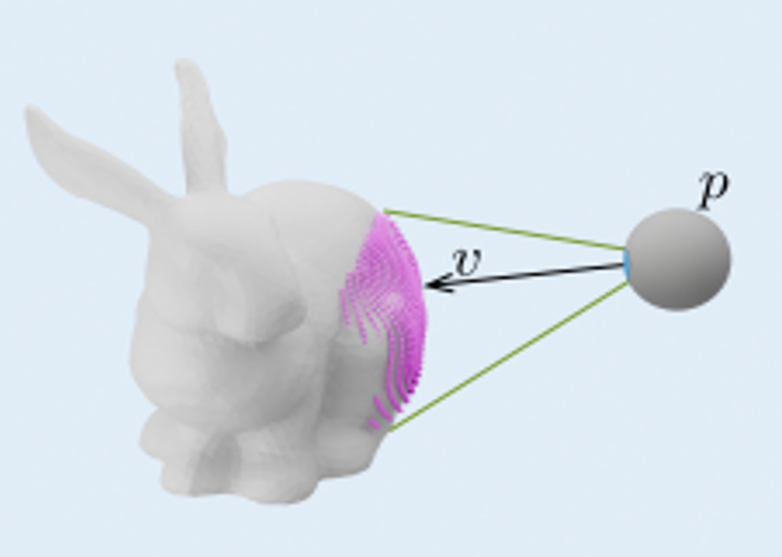

We introduce Masked Anchored SpHerical Distances (MASH), a novel multi-view and parametrized representation of 3D shapes. Inspired by multi-view geometry and motivated by the importance of perceptual shape understanding for learning 3D shapes, MASH represents a 3D shape as a collection of observable local surface patches, each defined by a spherical distance function emanating from an anchor point. We further leverage the compactness of spherical harmonics to encode the MASH functions, combined with a generalized view cone with a parameterized base that masks the spatial extent of the spherical function to attain locality. We develop a differentiable optimization algorithm capable of converting any point cloud into a MASH representation accurately approximating ground-truth surfaces with arbitrary geometry and topology ... |

|

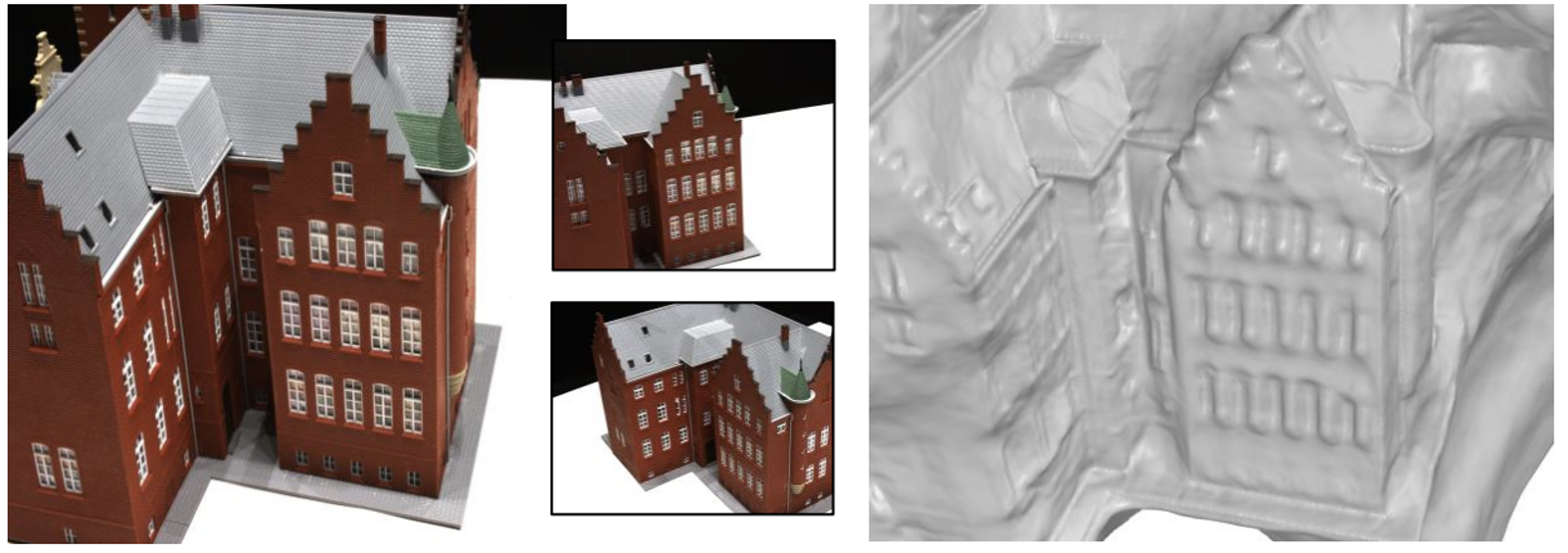

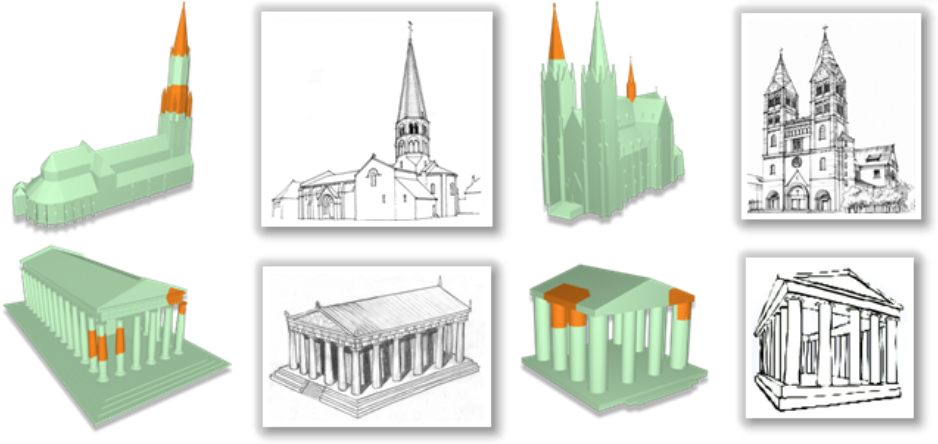

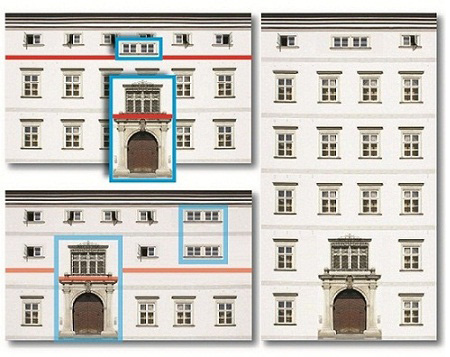

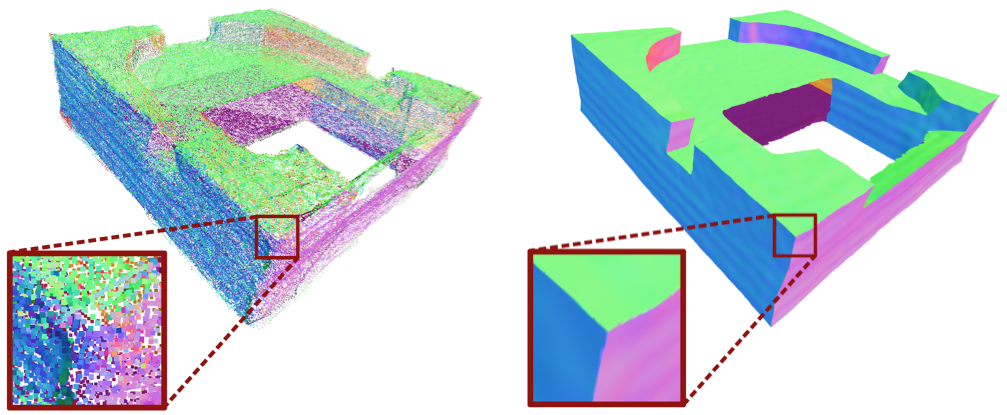

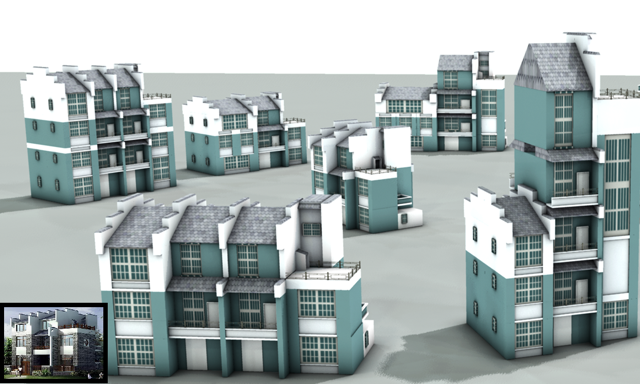

2. Qirui Huang, Runze Zhang, Kangjun Liu, Minglun Gong, Hao Zhang, and

Hui Huang,

"ArcPro: Architectural Programs for Structured 3D Abstraction of Sparse Points", CVPR (highlight), 2025.

[arXiv | Project | bibtex]

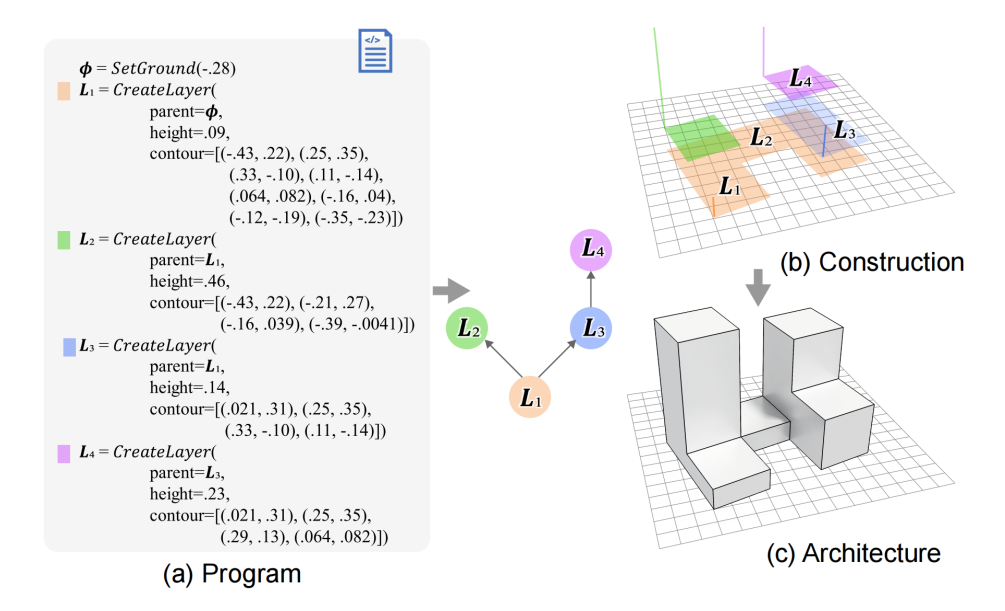

We introduce ArcPro, a novel learning framework built on architectural programs to recover structured 3D abstractions from highly sparse and low-quality point clouds. Specifically, we design a domain-specific language (DSL) to hierarchically represent building structures as a program, which can be efficiently converted into a mesh. We bridge feedforward and inverse procedural modeling by using a feedforward process for training data synthesis, allowing the network to make reverse predictions. We train an encoder-decoder on the points-program pairs to establish a mapping from unstructured point clouds to architectural programs, where a 3D convolutional encoder extracts point cloud features and a transformer decoder autoregressively predicts the programs in a tokenized form ... |

|

1. Dingdong Yang, Yizhi Wang,

Konrad Schindler, Ali Mahdavi-Amiri, and Hao Zhang,

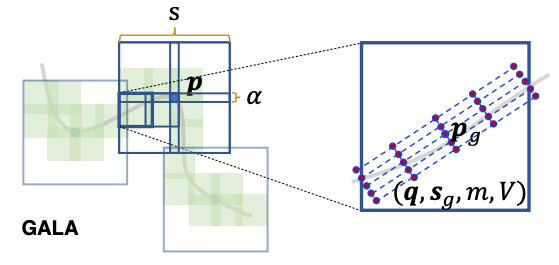

"GALA: Geometry-Aware Local Adaptive Grids for Detailed 3D Generation", ICLR, 2025.

[Project page | arXiv | bibtex]

We propose GALA, a novel representation of 3D shapes that (i) excels at capturing and reproducing complex geometry and surface details, (ii) is computationally efficient, and (iii) lends itself to 3D generative modelling with modern, diffusion-based schemes. The key idea of GALA is to exploit both the global sparsity of surfaces within a 3D volume and their local surface properties ... |

|

12. Liqiang Lin, Wenpeng Wu,

Chi-Wing Fu, Hao Zhang, and

Hui Huang,

"CRAYM: Neural Field Optimization via Camera RAY Matching", NeurIPS, 2024.

[Project page | arXiv |

bibtex]

We introduce camera ray matching (CRAYM) into the joint optimization of camera poses and neural fields from multi-view images. The optimized field, referred to as a feature volume, can be "probed" by the camera rays for novel view synthesis (NVS) and 3D geometry reconstruction. One key reason for matching camera rays, instead of pixels as in prior works, is that the camera rays can be parameterized by the feature volume to carry both geometric and photometric information. Multi-view consistencies involving the camera rays and scene rendering can be naturally integrated into the joint optimization and network training, to impose physically meaningful constraints to improve the final quality of both the geometric reconstruction and photorealistic rendering. We demonstrate the effectiveness of CRAYM for both NVS and geometry reconstruction, over dense- or sparse-view settings, with qualitative and quantitative comparisons to state-of-the-art alternatives. |

|

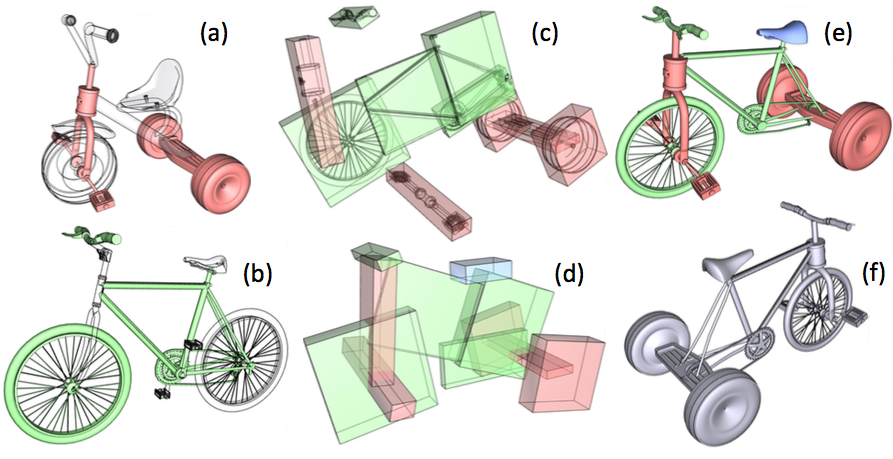

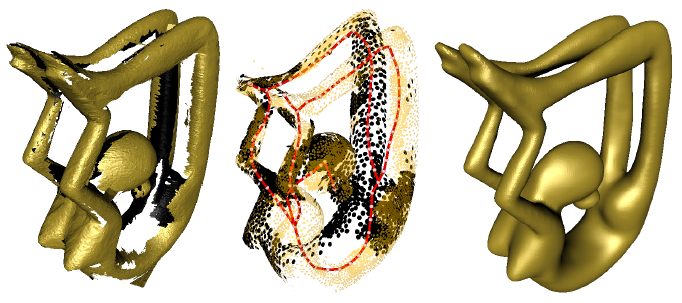

11. Fenggen Yu, Yiming Qian,

Xu Zhang, Francisca Gil-Ureta, Brian Jackson, Eric Bennett, and Hao Zhang,

"DPA-Net: Structured 3D Abstraction from Sparse Views via Differentiable Primitive Assembly", ECCV, 2024.

[arXiv | bibtex]

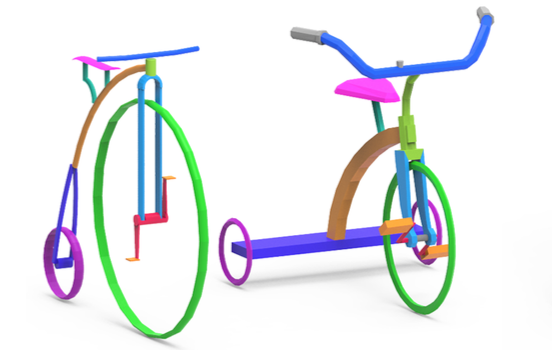

We present a differentiable rendering framework to learn structured 3D abstractions in the form of primitive assemblies from sparse RGB images capturing a 3D object. By leveraging differentiable volume rendering, our method does not require 3D supervision. Architecturally, our network follows the general pipeline of an image-conditioned neural radiance field (NeRF) exemplified by pixelNeRF for color prediction. As our core contribution, we introduce differen- tial primitive assembly (DPA) into NeRF to output a 3D occupancy field in place of density prediction, where the predicted occupancies serve as opacity values for volume rendering. Our network, coined DPA-Net, produces a union of convexes ... |

|

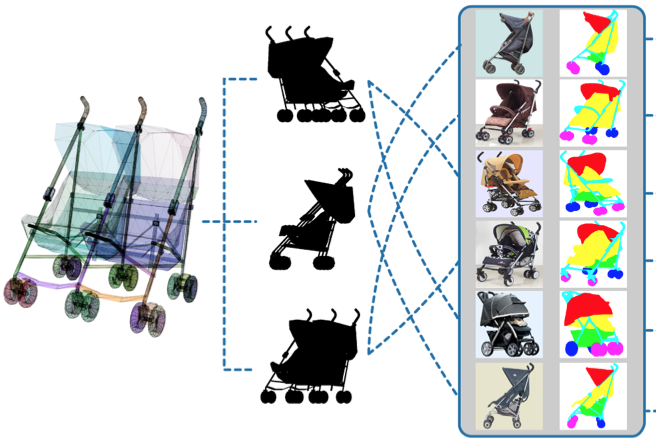

10. Ruiqi Wang,

Akshay Gadi Patil, Fenggen Yu, and

Hao Zhang,

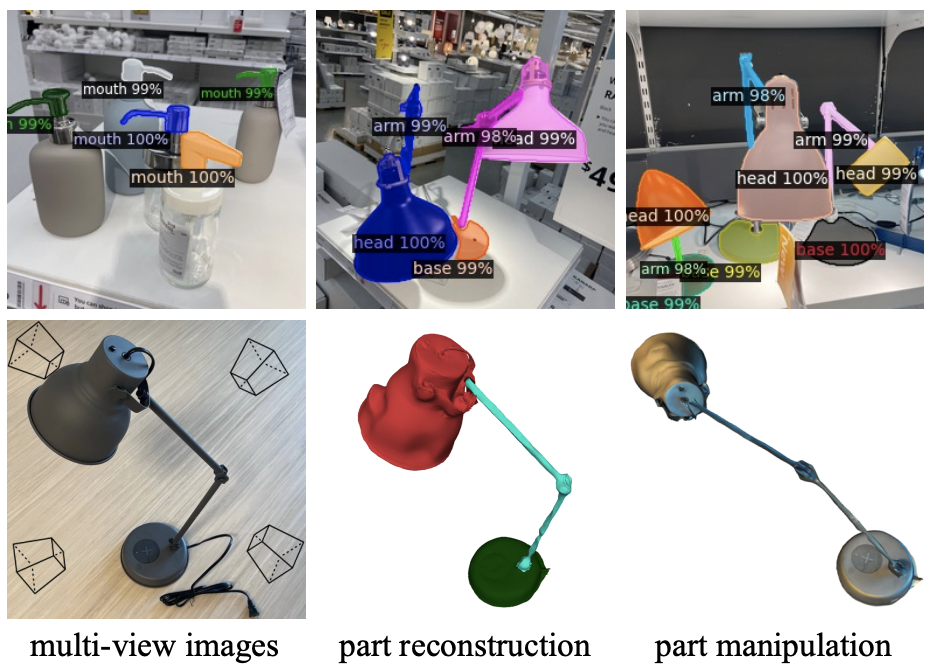

"Active Coarse-to-Fine Segmentation of Moveable Parts from Real Images", ECCV, 2024.

[Project page | arXiv |

bibtex]

We introduce the first active learning (AL) model for high-accuracy instance segmentation of moveable parts from RGB images of real indoor scenes. Specifically, our goal is to obtain fully validated segmentation results by humans while minimizing manual effort. To this end, we employ a transformer that utilizes a masked-attention mechanism to supervise the active segmentation. To enhance the network tailored to moveable parts, we introduce a coarse-to-fine AL approach which first uses an object-aware masked attention and then a pose-aware one, leveraging the hierarchical nature of the problem and a correlation between moveable parts and object poses and interaction directions. |

|

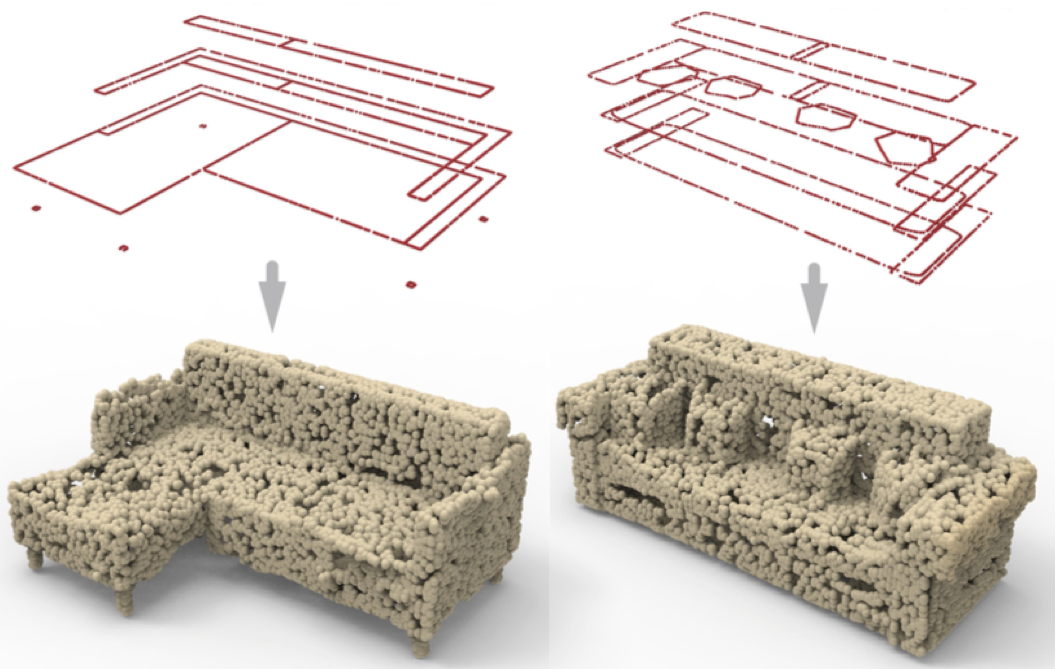

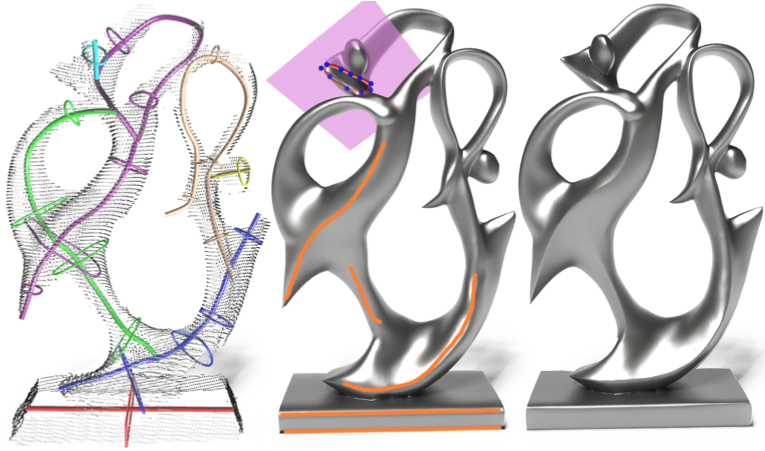

9. Qimin Chen, Zhiqin Chen, Vladimir Kim, Noam Aigerman,

Hao Zhang, and Siddhartha Chaudhuri,

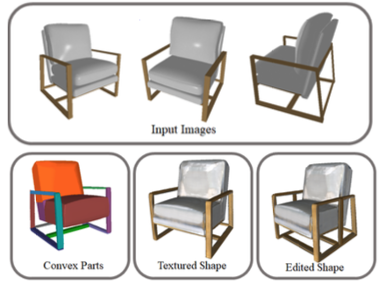

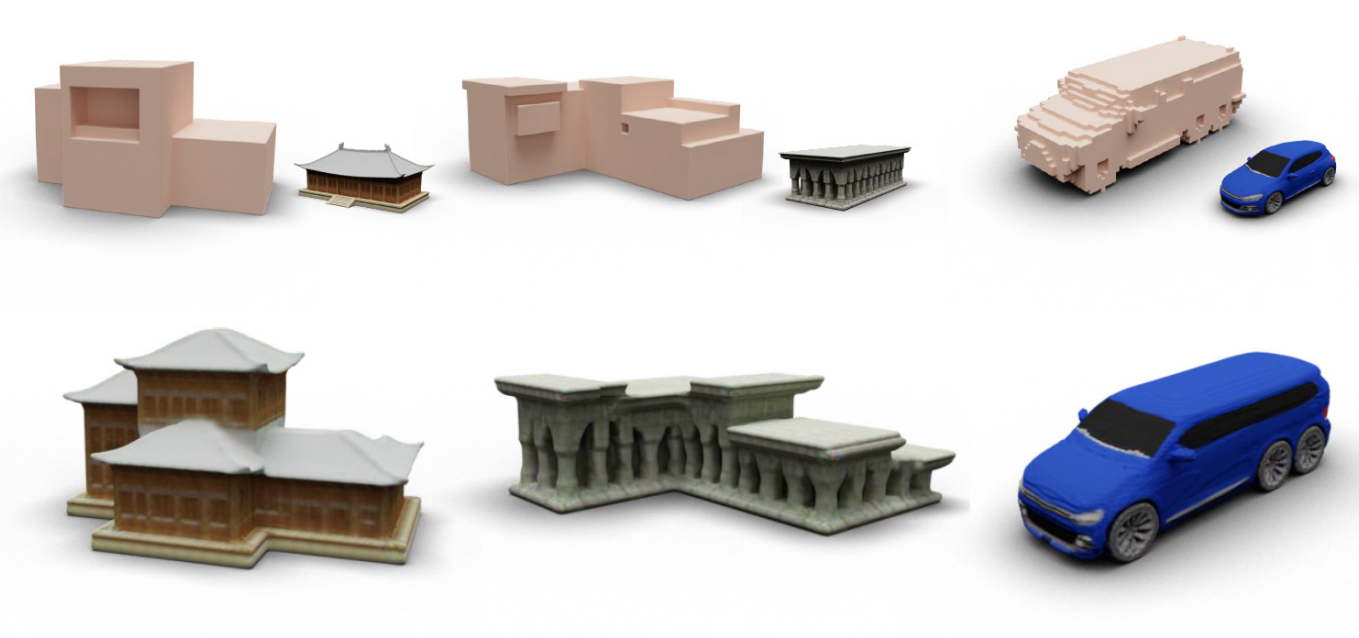

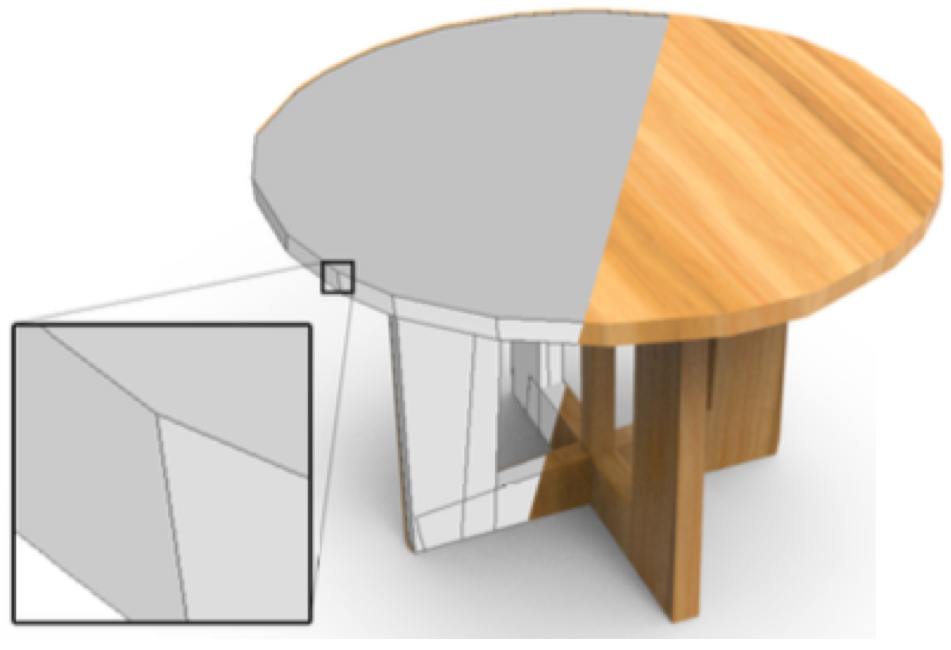

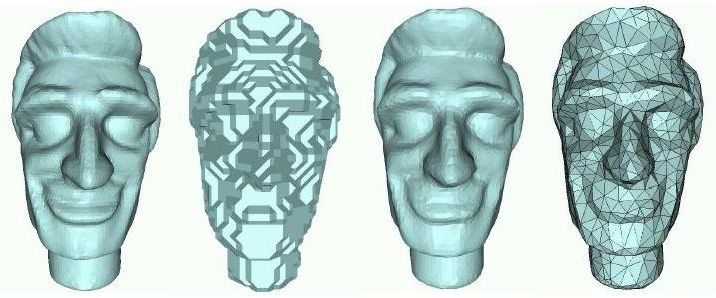

"DECOLLAGE: 3D Detailization by Controllable, Localized, and Learned Geometry Enhancement", ECCV, 2024.

[arXiv | bibtex]

We present a 3D modeling method which enables end-users to refine or detailize 3D shapes using machine learning, expanding the capabilities of AI-assisted 3D content creation. Given a coarse voxel shape (e.g., one produced with a simple box extrusion tool or via generative modeling), a user can directly "paint" desired target styles representing compelling geometric details, from input exemplar shapes, over different regions of the coarse shape. These regions are then up-sampled into high-resolution geometries which adhere with the painted styles. |

|

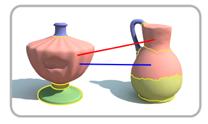

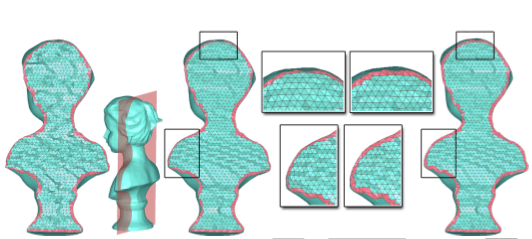

8. Sai Raj Kishore Perla,

Yizhi Wang,

Ali Mahdavi-Amiri, and

Hao Zhang,

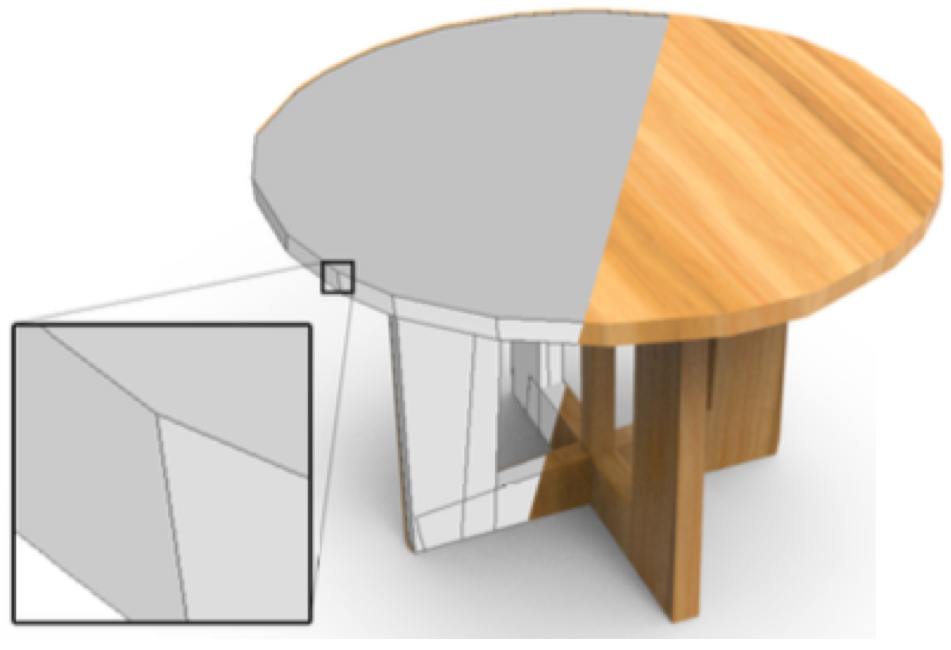

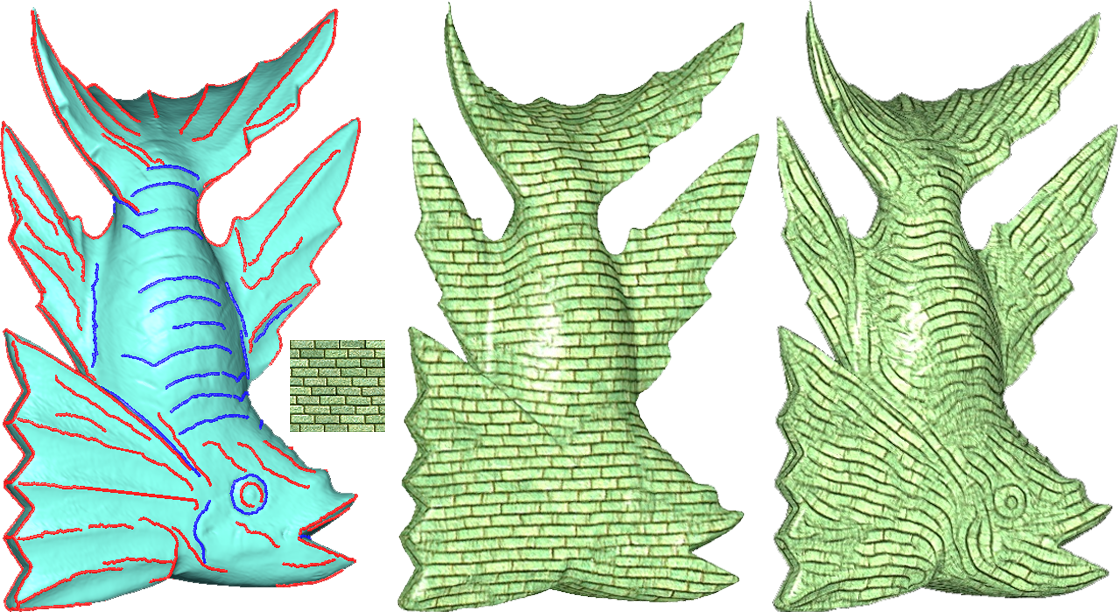

"EASI-Tex: Edge-Aware Mesh Texturing from Single Image", ACM Transactions on Graphics (Special Issue of SIGGRAPH), 2024.

[arXiv | bibtex]

We introduce a novel approach for single-image mesh texturing, which employs diffusion models with judicious conditioning to seamlessly transfer an object's texture from a single RGB image to a given 3D mesh object. We do not assume that the two objects belong to the same category, and even if they do, there can be significant discrepancies in their geometry and part proportions. Our method aims to rectify the discrepancies by respecting both shape semantics and edge features in the inputs to produce clean and sharp mesh texturization. Leveraging a pre-trained Stable Diffusion generator, our method is capable of transferring textures in the absence of a direct guide from the single-view image. |

|

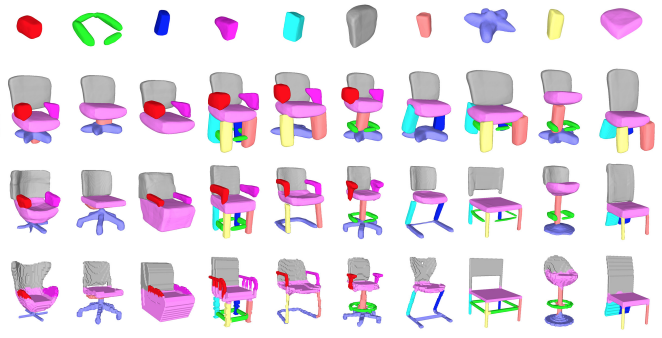

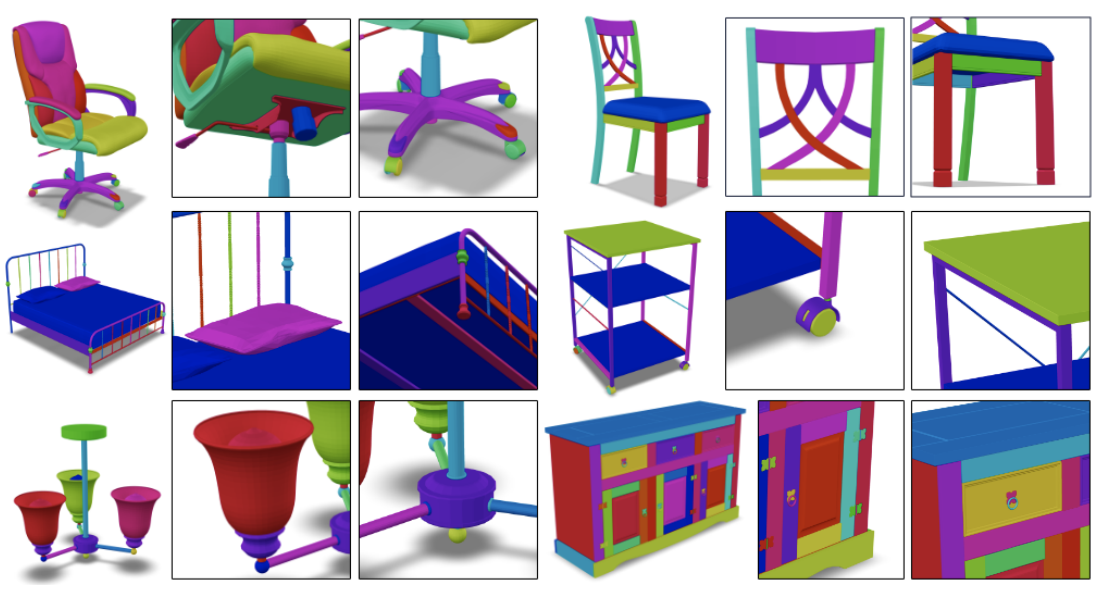

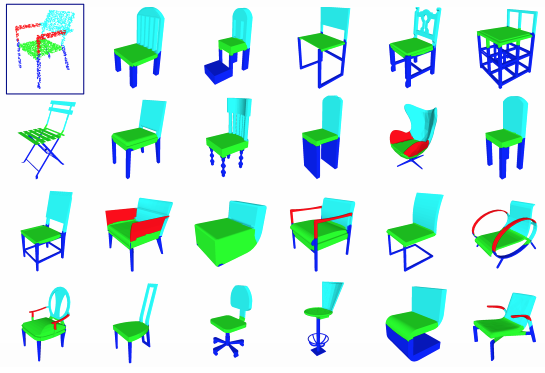

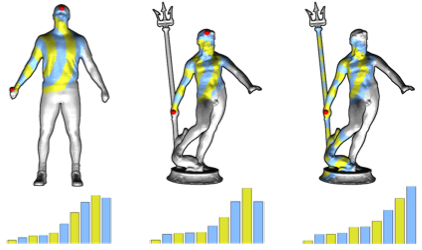

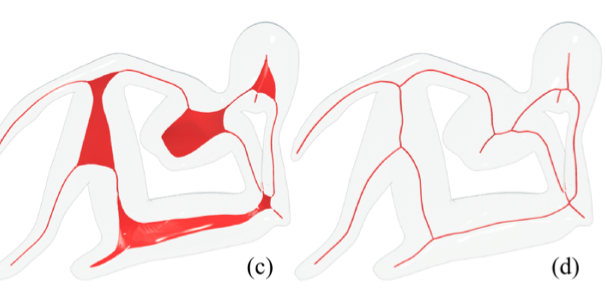

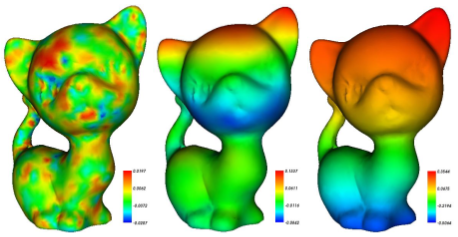

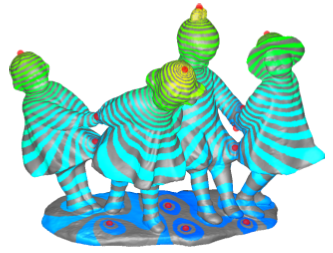

7. Zhiqin Chen,

Qimin Chen,

Hang Zhou, and

Hao Zhang,

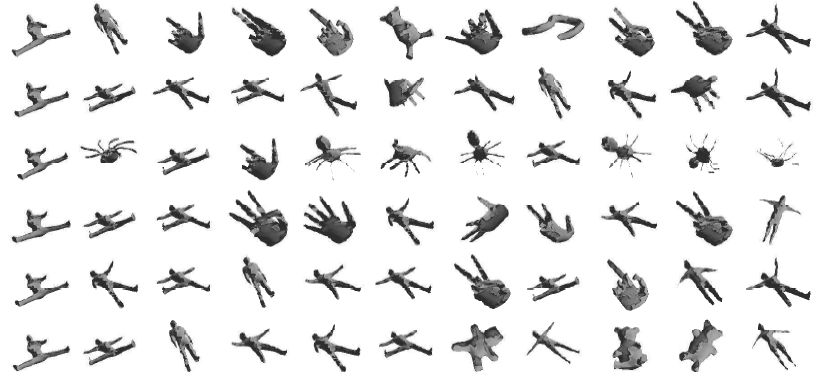

"DAE-Net: Deforming Auto-Encoder for fine-grained shape co-segmentation", ACM SIGGRAPH, 2024.

[arXiv | bibtex]

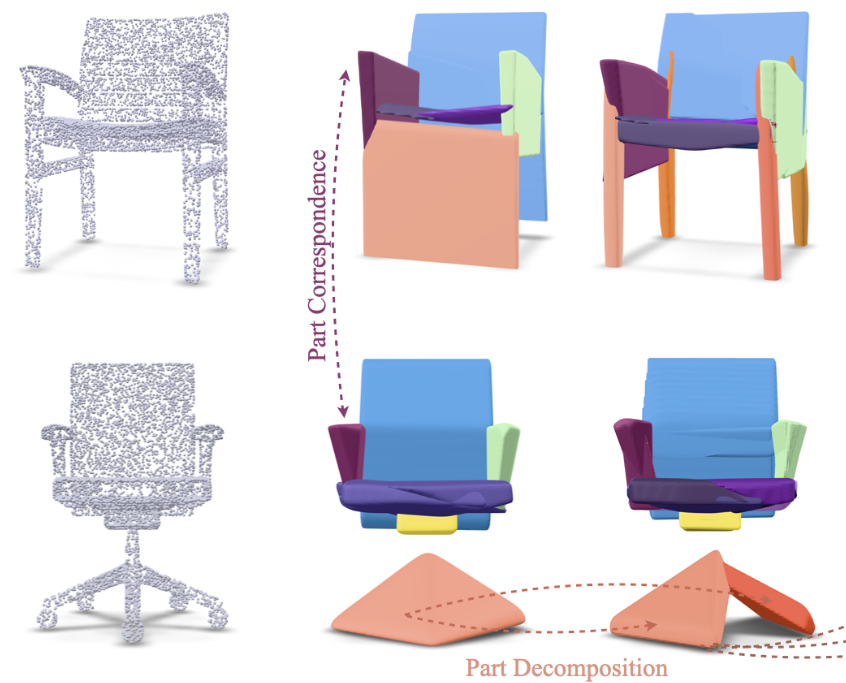

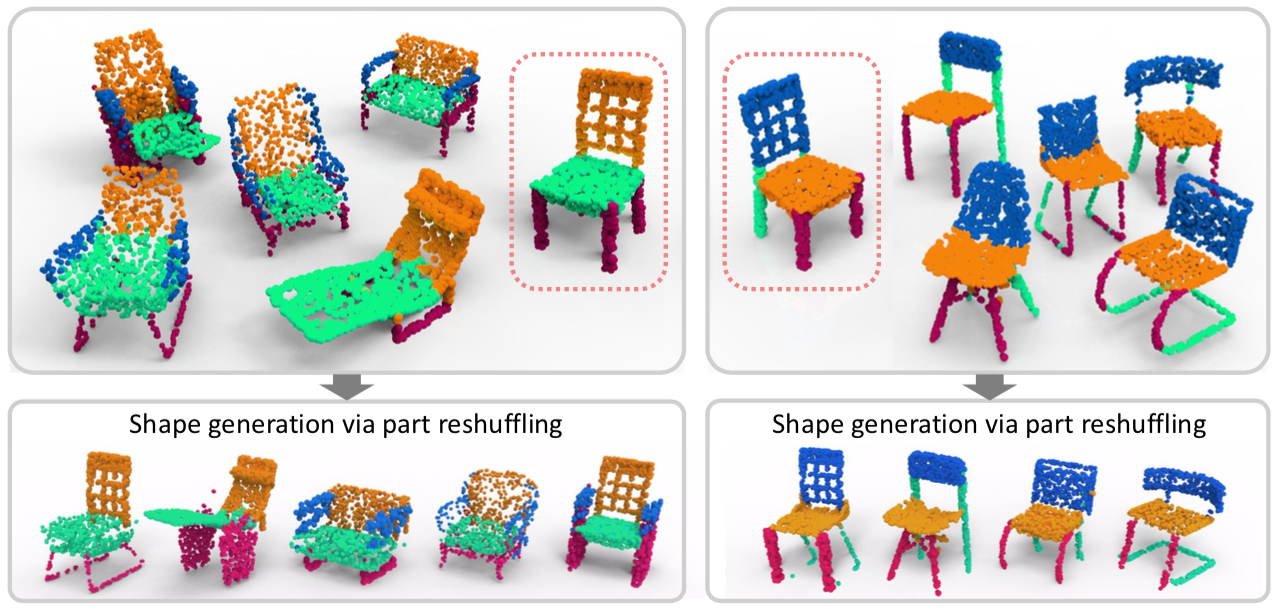

We present an unsupervised 3D shape co-segmentation method which learns a set of deformable part templates from a shape collection. To accommodate structural variations in the collection, our network composes each shape by a selected subset of template parts which are affine-transformed. To maximize the expressive power of the part templates, we introduce a per-part deformation network to enable the modeling of diverse parts with substantial geometry variations, while imposing constraints on the deformation capacity to ensure fidelity to the originally represented parts. |

|

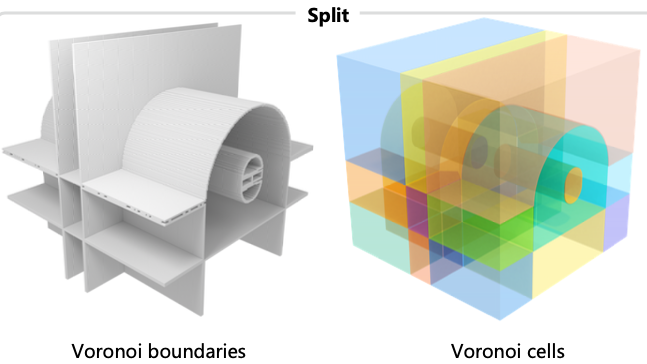

6. Yilin Liu, Jialei Chen, Shanshan Pan,

Daniel Cohen-Or,

Hao Zhang, and Hui Huang,

"Split-and-Fit: Learning B-Reps via Structure-Aware Voronoi Partitioning", ACM Transactions on Graphics (Special Issue of SIGGRAPH), 2024.

[arXiv | bibtex]

We introduce a novel method for acquiring boundary representations (B-Reps) of 3D CAD models which involves a two-step process: it first applies a spatial partitioning, referred to as the "split", followed by a "fit" operation to derive a single primitive within each partition. Specifically, our partitioning aims to produce the classical Voronoi diagram of the set of ground-truth (GT) B-Rep primitives. |

|

5. Jingyu Hu, Kai-Hei Hui, Zhengzhe liu,

Hao Zhang, and Chi-Wing Fu,

"CNS-Edit: 3D Shape Editing via Coupled Neural Shape Optimization", ACM SIGGRAPH, 2024.

[arXiv | bibtex]

We introduce a new approach based on a coupled representation and a neural volume optimization to implicitly perform 3D shape editing in latent space. This work has three innovations. First, we design the coupled neural shape (CNS) representation for supporting 3D shape editing. This representation includes a latent code, which captures high-level global semantics of the shape, and a 3D neural feature volume, which provides a spatial context to associate with the local shape changes given by the editing. Second, ... |

|

4. Yizhi Wang, Wallace Lira, Wenqi Wang,

Ali Mahdavi-Amiri, and Hao Zhang,

"Slice3D: Multi-Slice, Occlusion-Revealing, Single View 3D Reconstruction", CVPR, 2024.

[Project page | arXiv | bibtex]

We introduce multi-slice reasoning, a new notion for single-view 3D reconstruction which challenges the current and prevailing belief that multi-view synthesis is the most natural conduit between single-view and 3D. Our key observation is that object slicing is more advantageous than altering views to reveal occluded structures. Specifically, slicing is more occlusion-revealing since it can peel through any occluders without obstruction. In the limit, i.e., with infinitely many slices, it is guaranteed to unveil all hidden object parts. |

|

3. Dingdong Yang, Yizhi Wang,

Ali Mahdavi-Amiri, and Hao Zhang,

"BRICS: Bi-level feature Representation of Image CollectionS", preprint, 2023.

[Project page | arXiv | bibtex]

We present BRICS, a bi-level feature representation for image collections, which consists of a key code space on top of a feature grid space. Specifically, our representation is learned by an autoencoder to encode images into continuous key codes, which are used to retrieve features from groups of multi-resolution feature grids. Our key codes and feature grids are jointly trained continuously with well-defined gradient flows, leading to high usage rates of the feature grids and improved generative modeling compared to discrete Vector Quantization (VQ). Differently from existing continuous representations such as KL-regularized latent codes, our key codes are strictly bounded in scale and variance. |

|

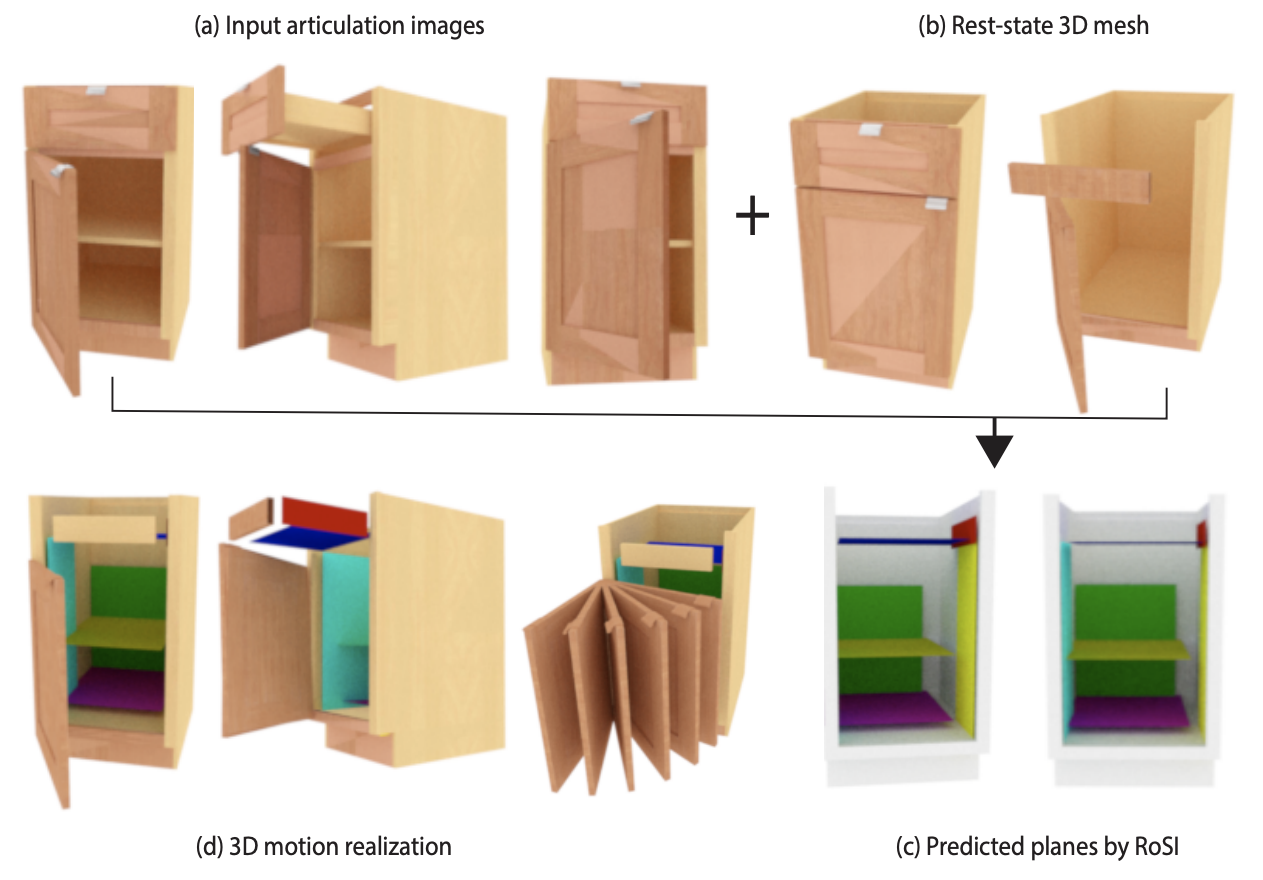

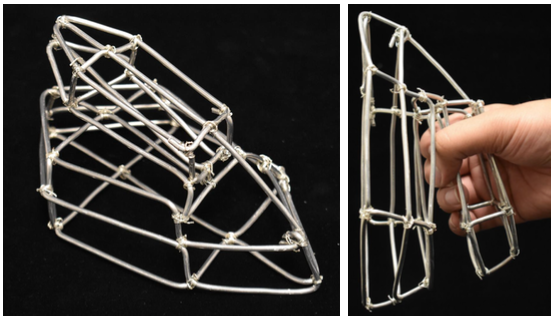

2. Akshay Gadi Patil,

Yiming Qian, Shan Yang, Brian Jackson, Eric Bennett, and Hao Zhang,

"RoSI: Recovering 3D Shape Interiors from Few Articulation Images", preprint, 2023.

[arXiv | bibtex]

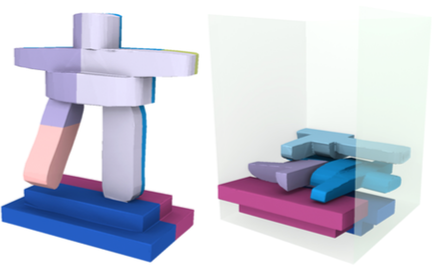

The dominant majority of 3D models that appear in gaming, VR/AR, and those we use to train geometric deep learning algorithms are incomplete, since they are modeled as surface meshes and missing their interior structures. We present a learning framework to recover the shape interiors (RoSI) of existing 3D models with only their exteriors from multi-view and multi-articulation images. Given a set of RGB images that capture a target 3D object in different articulated poses, possibly from only few views, our method infers the interior planes that are observable in the input images. |

|

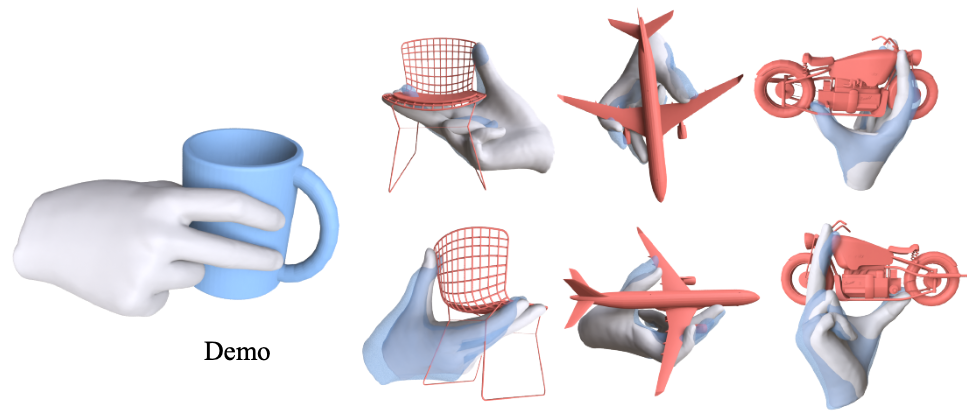

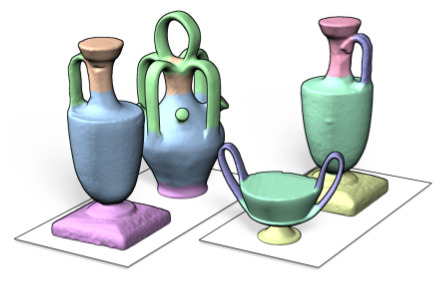

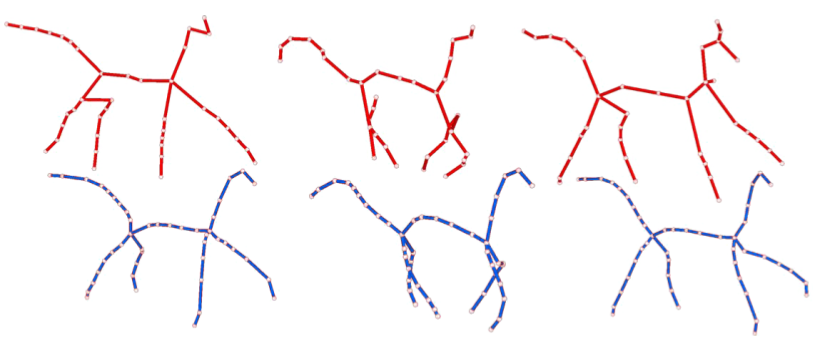

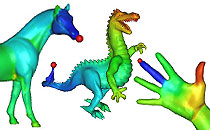

1. Zeyu Huang, Sisi Dai, Kai Xu, Hao Zhang,

Hui Huang,

and Ruizhen Hu,

"DINA: Deformable INteraction Analogy", Graphical Models, selected paper from Computational Visual Media (CVM), 2024.

[arXiv | bibtex]

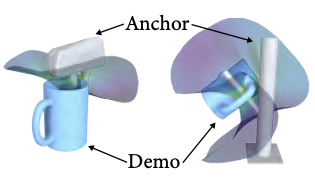

We introduce deformable interaction analogy (DINA) as a means to generate close interactions between two 3D objects. Given a single demo interaction between an anchor object (e.g., a hand) and a source object (e.g., a mug grasped by the hand), our goal is to generate many analogous 3D interactions between the same anchor object and various new target objects (e.g. a toy airplane), where the anchor object is allowed to be rigid or deformable. |

|

15. Aditya Vora, Akshay Gadi Patil, and Hao Zhang,

"DiViNeT: 3D Reconstruction from Disparate Views via Neural Template Regularization", NeurIPS, 2023.

[arXiv | bibtex]

We present a volume rendering-based neural surface reconstruction method that takes as few as three disparate RGB images as input. Our key idea is to regularize the reconstruction, which is severely ill-posed and leaving significant gaps between the sparse views, by learning a set of neural templates that act as surface priors. Our method, coined DiViNet, operates in two stages. The first stage learns the templates, in the form of 3D Gaussian functions, across different scenes, without 3D supervision. In the reconstruction stage, our predicted templates serve as anchors to help “stitch” the surfaces over sparse regions. |

|

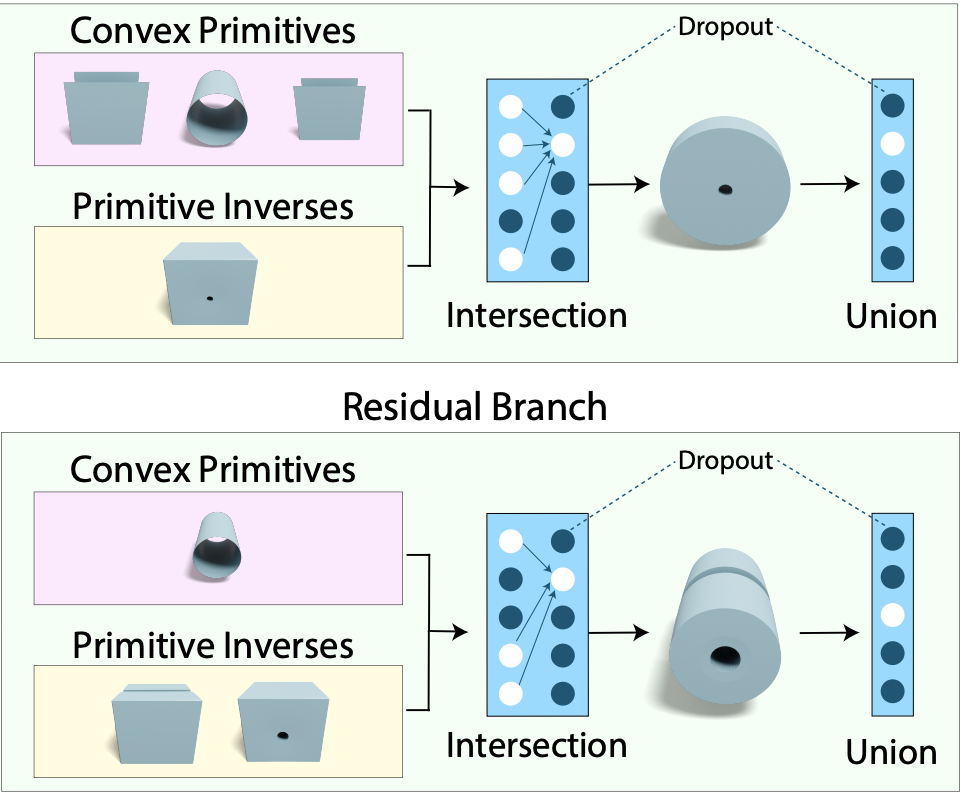

14. Fenggen Yu,

Qimin Chen,

Maham Tanveer,

Ali Mahdavi-Amiri, and

Hao Zhang,

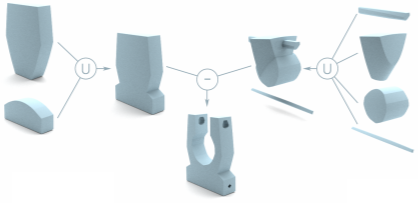

"D2CSG: Unsupervised Learning of Compact CSG Trees with Dual Complements and Dropouts",

NeurIPS, 2023.

[arXiv | bibtex]

We present D2CSG, a neural model composed of two dual and complementary network branches, with dropouts, for unsupervised learning of compact constructive solid geometry (CSG) representations of 3D CAD shapes. Our network is trained to reconstruct a 3D shape by a fixed-order assembly of quadric primitives, with both branches producing a union of primitive intersections or inverses. A key difference between D2CSG and all prior neural CSG models is its dedicated residual branch to assemble the potentially complex shape complement, which is subtracted from an overall shape modeled by the cover branch. With the shape complements, our network is provably general, while the weight dropout further improves compactness of the CSG tree by removing redundant primitives. |

|

13. Qimin Chen, Zhiqin Chen,

Hang Zhou, and Hao Zhang,

"ShaDDR: Real-Time Example-Based Geometry and Texture Generation

via 3D Shape Detailization and Differentiable Rendering", ACM SIGGRAPH Asia, 2023.

[arXiv | bibtex]

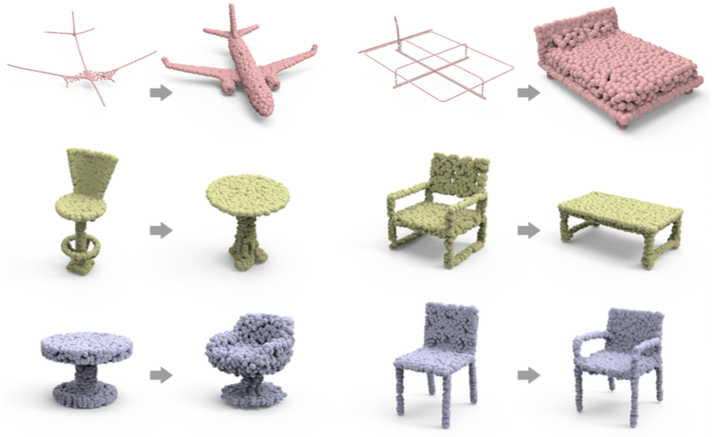

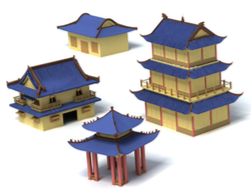

We present ShaDDR, an example-based deep generative neural network which produces a high-resolution textured 3D shape through geometry detailization and conditional texture generation applied to an input coarse voxel shape. Trained on a small set of detailed and textured exemplar shapes, our method learns to detailize the geometry via multi-resolution voxel upsampling and generate textures on voxel surfaces via differentiable rendering against exemplar texture images from a few views. The generation is realtime, taking less than 1 second to produce a 3D model with voxel resolutions up to 512^3. The generated shape preserves the overall structure of the input coarse voxel model, while the style of the generated geometric details and textures can be manipulated through learned latent codes. |

|

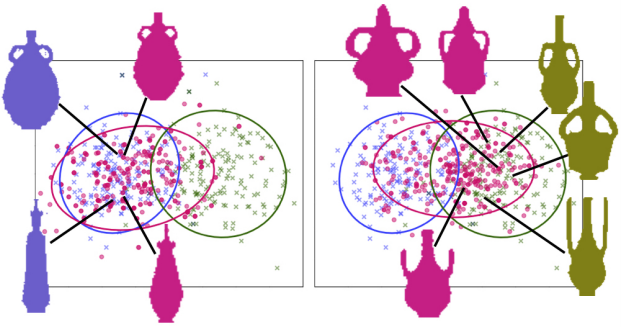

12. Jingyu Hu, Kai-Hei Hui, Zhengzhe liu,

Hao Zhang, and Chi-Wing Fu,

"CLIPXPlore: Coupled CLIP and Shape Spaces for 3D Shape Exploration",

ACM SIGGRAPH Asia, 2023.

[arXiv | bibtex]

This paper presents CLIPXPlore, a new framework that leverages a vision-language model to guide the exploration of the 3D shape space. Many recent methods have been developed to encode 3D shapes into a learned latent shape space to enable generative design and modeling. Yet, existing methods lack effective exploration mechanisms, despite the rich information. To this end, we propose to leverage CLIP, a powerful pre-trained vision-language model, to aid the shape-space exploration. Our idea is threefold. First, we couple the CLIP and shape spaces by generating paired CLIP and shape codes through sketch images and training a mapper network to connect the two spaces. Second, to explore the space around a given shape, we formulate a co-optimization strategy to search for the CLIP code that better matches the geometry of the shape. Third, we design three exploration modes, binary-attribute-guided, text-guided, and sketch-guided, to locate suitable exploration trajectories in shape space and induce meaningful changes to the shape. |

|

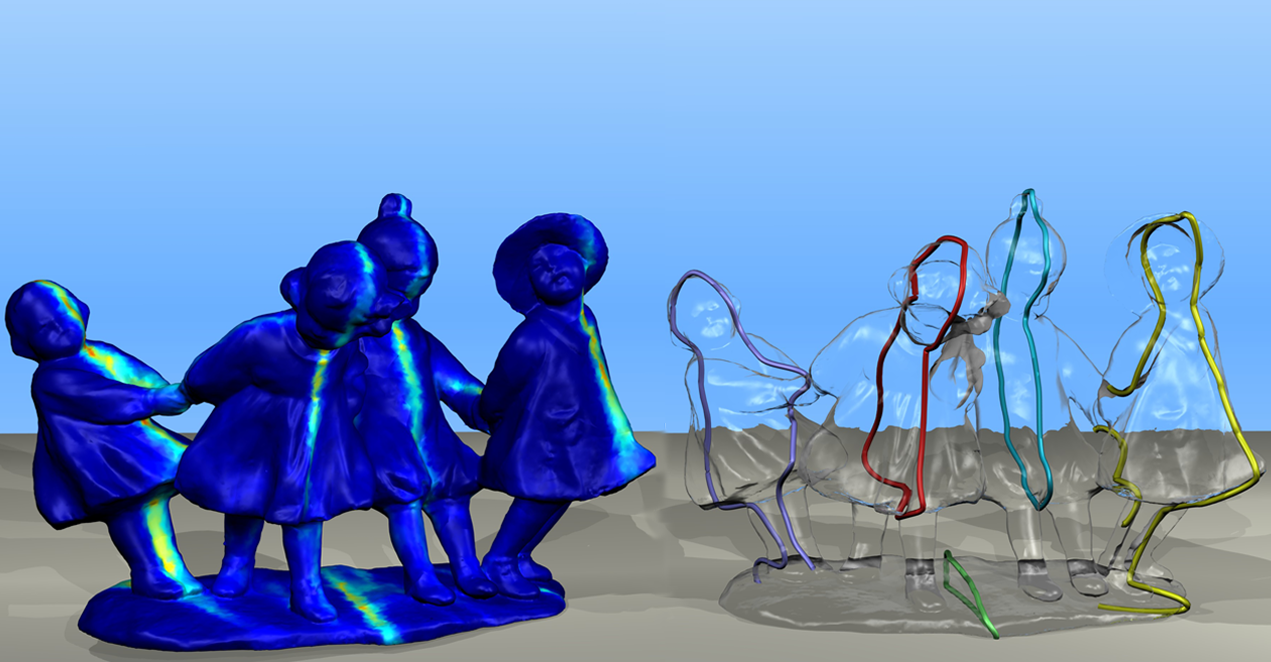

11. Zihao Yan, Fubao Su, Mingyang Wang, Ruizhen Hu, Hao Zhang, and Hui Huang,

"Interaction-Driven Active 3D Reconstruction with Object Interiors", ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), 2023.

[Project page | bibtex]

We introduce an active 3D reconstruction method which integrates visual perception, robot-object interaction, and 3D scanning to recover both the exterior and interior geometries of a target 3D object. Unlike other works in active vision which focus on optimizing camera viewpoints to better investigate the environment, the primary feature of our reconstruction is an analysis of the interactability of various parts of the target object and the ensuing part manipulation by a robot to enable scanning of occluded regions. As a result, an understanding of part articulations of the target object is obtained on top of complete geometry acquisition. Our method operates fully automatically by a Fetch robot with built-in RGBD sensors. It iterates between interaction analysis and interaction-driven reconstruction, scanning and reconstructing detected moveable parts one at a time, where both the articulated part detection and mesh reconstruction are carried out by neural networks. |

|

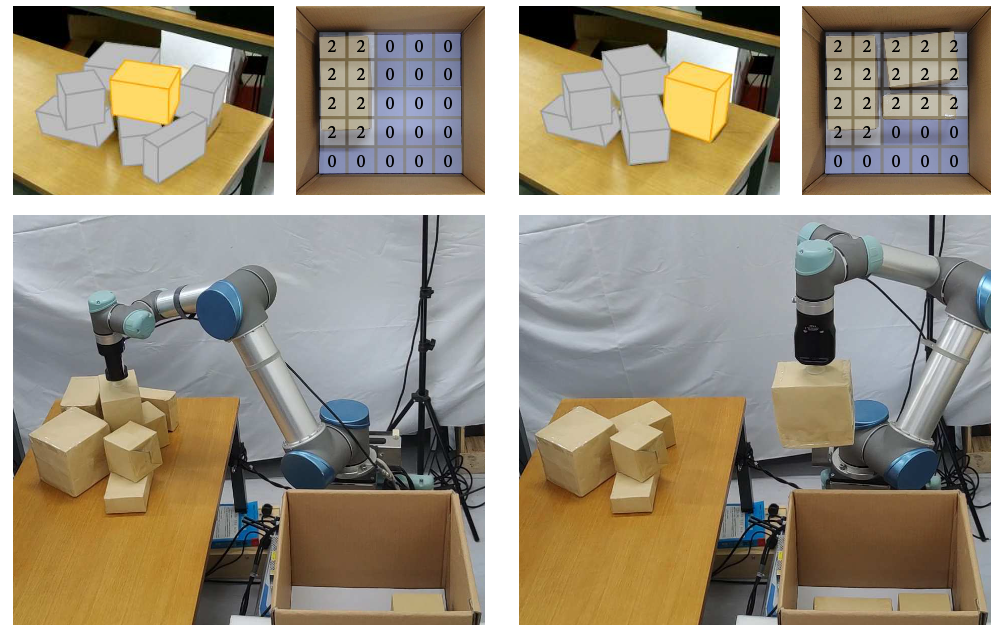

10. Juzhan Xu, Minglun Gong, Hao Zhang, Hui Huang, and Ruizhen Hu,

"Neural Packing: from Visual Sensing to Reinforcement Learning",

ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 42, No, 6, 2023.

[Project page | bibtex]

We present a complete learning framework to solve the real-world transport-and-packing (TAP) problem in 3D. It constitutes a full solution pipeline from partial observations of input objects via RGBD sensing and recognition to final box placement, via robotic motion planning, to arrive at a compact packing in a target container. The technical core of our method is a neural network for TAP, trained via reinforcement learning (RL), to solve the NP-hard combinatorial optimization problem. Our network simultaneously selects an object to pack and determines the final packing location, based on a judicious encoding of the continuously evolving states of partially observed source objects and available spaces in the target container, using separate encoders both enabled with attention mechanisms. |

|

9. Fenggen Yu,

Yiming Qian, Francisca Gil-Ureta, Brian Jackson, Eric Bennett,

and Hao Zhang,

"HAL3D: Hierarchical Active Learning for Fine-Grained 3D Part Labeling",

ICCV, 2023.

[arXiv | bibtex]

We present the first active learning tool for fine-grained 3D part labeling, a problem which challenges even the most advanced deep learning (DL) methods due to the significant structural variations among the small and intricate parts. For the same reason, the necessary data annotation effort is tremendous, motivating approaches to minimize human involvement. Our labeling tool iteratively verifies or modifies part labels predicted by a deep neural network, with human feedback continually improving the network prediction. To effectively reduce human efforts, we develop two novel features in our tool, hierarchical and symmetry-aware active labeling. Our human-in-the-loop approach, coined HAL3D, achieves 100% accuracy (barring human errors) on any test set with pre-defined hierarchical part labels, with 80% time-saving over manual effort. |

|

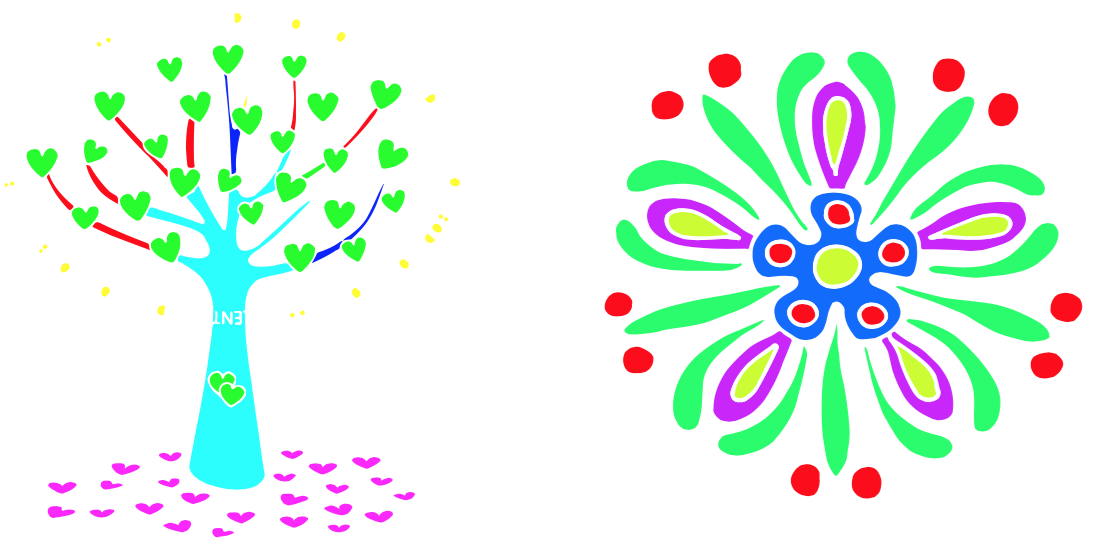

8. Maham Tanveer, Yizhi Wang,

Ali Mahdavi-Amiri,

and Hao Zhang,

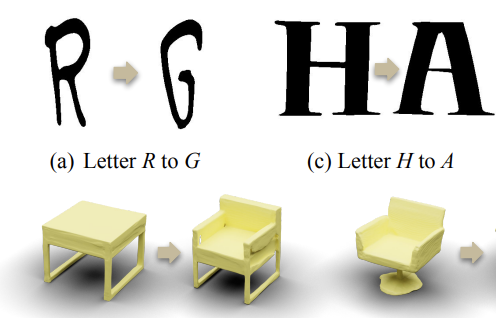

"DS-Fusion: Artistic Typography via Discriminated and Stylized Diffusion",

ICCV, 2023.

[Project page | arXiv | bibtex]

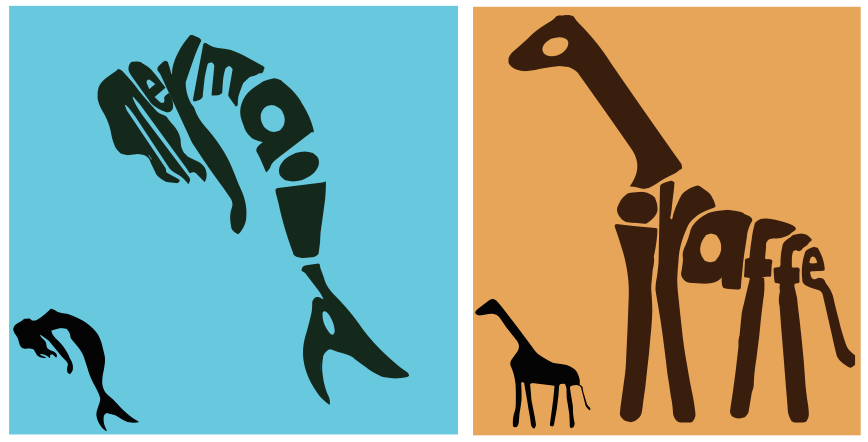

We introduce a novel method to automatically generate an artistic typography by stylizing one or more letter fonts to visually convey the semantics of an input word, while ensuring that the output remains readable. To address an assortment of challenges with our task at hand including conflicting goals (artistic stylization vs. legibility), lack of ground truth, and immense search space, our approach utilizes large language models to bridge texts and visual images for stylization and build an unsupervised generative model with a diffusion model backbone. Specifically, we employ the denoising generator in Latent Diffusion Model (LDM), with the key addition of a CNN-based discriminator to adapt the input style onto the input text. The discriminator uses rasterized images of a given letter/word font as real samples and output of the denoising generator as fake samples ... |

|

7. Yizhi Wang,

Zeyu Huang, Ariel Shamir,

Hui Huang,

Hao Zhang, and Ruizhen Hu,

"ARO-Net: Learning Implicit Fields from Anchored Radial Observations", CVPR, 2023.

[arXiv | bibtex]

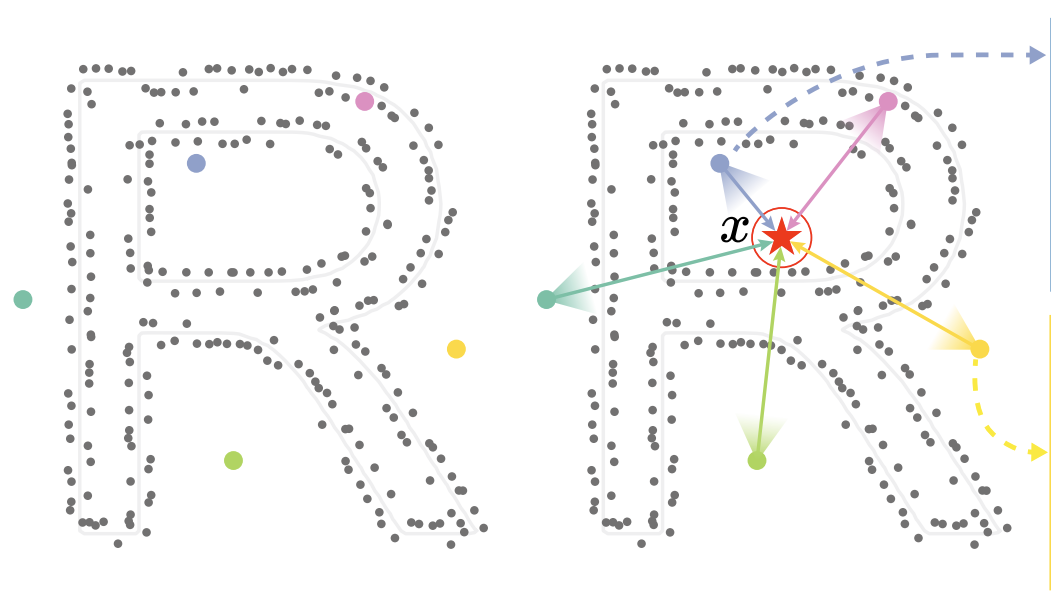

We introduce anchored radial observations (ARO), a novel shape encoding for learning neural field representation of shapes that is category-agnostic and generalizable amid significant shape variations. The main idea behind our work is to reason about shapes through partial observations from a set of viewpoints, called anchors. We develop a general and unified shape representation by employing a fixed set of anchors, via Fibonacci sampling, and designing a coordinate-based deep neural network to predict the occupancy value of a query point in space. Differently from prior neural implicit models, that use global shape feature, our shape encoder operates on contextual, query-specific features ... |

|

6. Akshay Gadi Patil, Supriya Gadi Patil, Manyi Li,

Matthew Fisher,

Manolis Savva, and Hao Zhang,

"Advances in Data-Driven Analysis and Synthesis of 3D Indoor Scenes",

Computer Graphics Forum (State-of-the-Art Report), 2023.

[arXiv | bibtex]

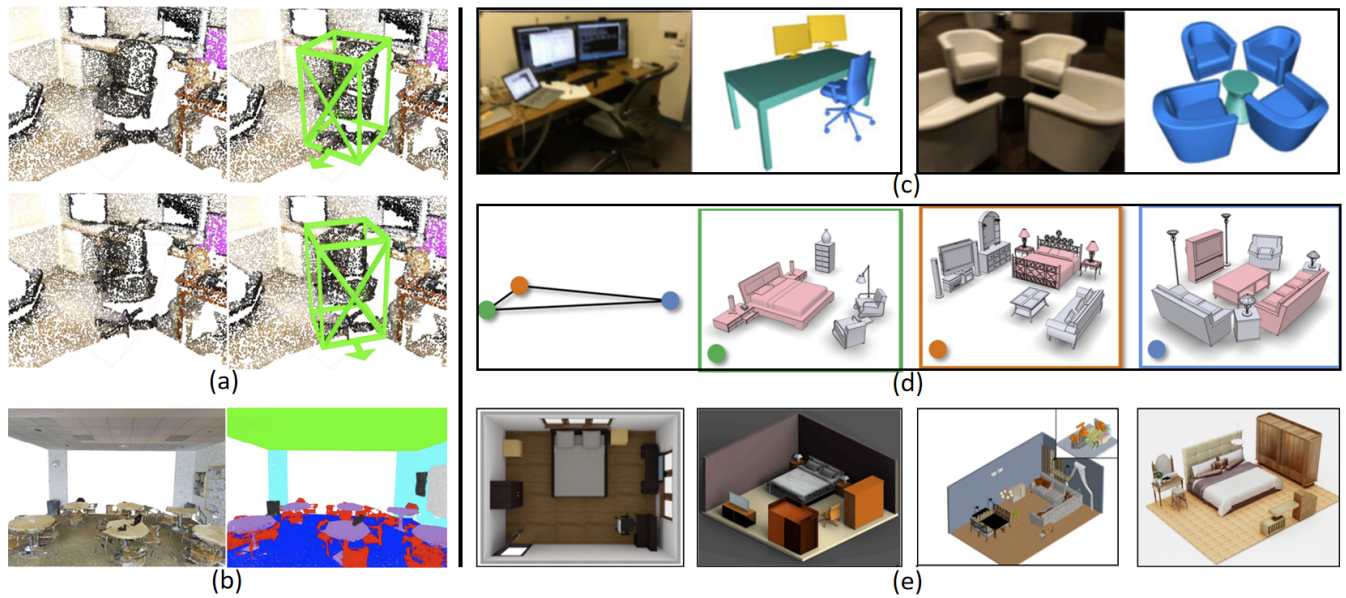

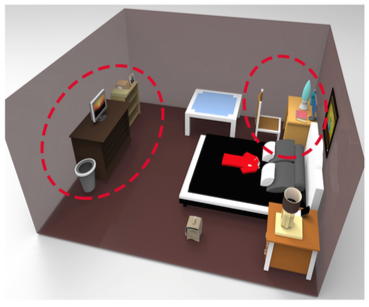

This report surveys advances in deep learning-based modeling techniques that address four different 3D indoor scene analysis tasks, as well as synthesis of 3D indoor scenes. We describe different kinds of representations for indoor scenes, various indoor scene datasets available for research in the aforementioned areas, and discuss notable works employing machine learning models for such scene modeling tasks based on these representations. Specifically, we focus on the analysis and synthesis of 3D indoor scenes. With respect to analysis, we focus on four basic scene understanding tasks – 3D object detection, 3D scene segmentation, 3D scene reconstruction and 3D scene similarity. And for synthesis, we mainly discuss neural scene synthesis works, though also highlighting model-driven methods that allow for human-centric, progressive scene synthesis ... |

|

5. Zeyu Huang, Juzhan Xu, Sisi Dai, Kai Xu, Hao Zhang,

Hui Huang,

and Ruizhen Hu,

"NIFT: Neural Interaction Field and Template for Object Manipulation", International Conference on Robotics and Automation (ICRA), 2023.

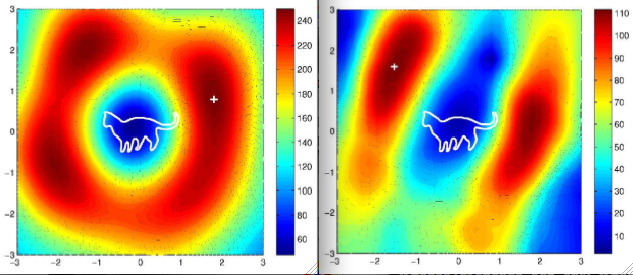

[arXiv | bibtex]

We introduce NIFT, Neural Interaction Field and Template, a descriptive and robust interaction representation of object manipulations to facilitate imitation learning. Given a few object manipulation demos, NIFT guides the generation of the interaction imitation for a new object instance by matching the Neural Interaction Template (NIT) extracted from the demos to the Neural Interaction Field (NIF) defined for the new object. Specifically, the NIF is a neural field which encodes the relationship between each spatial point and a given object, where the relative position is defined by a spherical distance function rather than occupancies or signed distances, which are commonly adopted by conventional neural fields but less informative ... |

|

4. Hang Zhou,

Rui Ma, Lingxiao Zhang,

Lin Gao,

Ali Mahdavi-Amiri,

and Hao Zhang,

"SAC-GAN: Structure-Aware Image Composition",

IEEE Trans. on Visualization and Computer Graphics (TVCG), 2023.

[arXiv | bibtex]

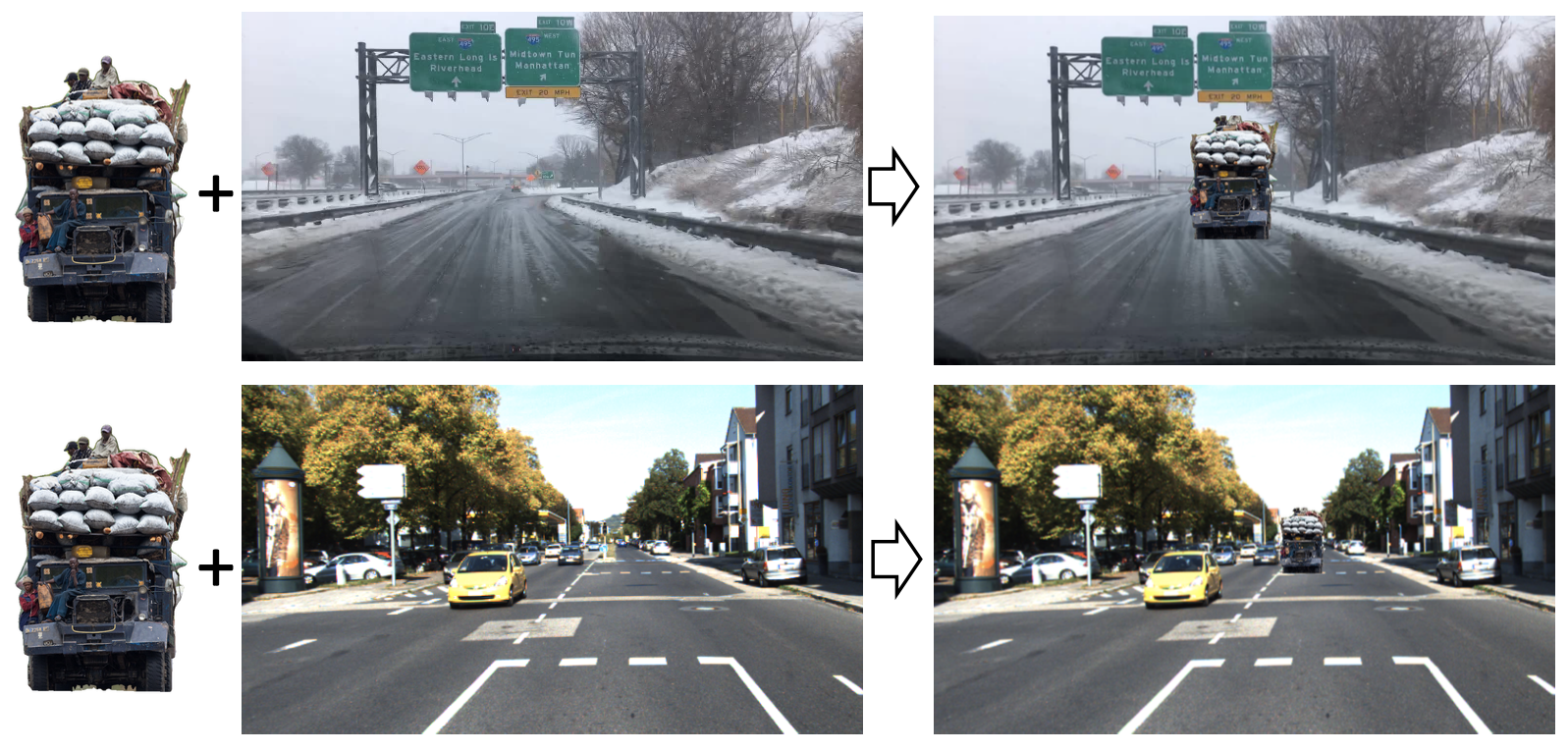

We introduce an end-to-end learning framework for image-to-image composition, aiming to seamlessly compose an object represented as a cropped patch from an object image into a background scene image. As our approach emphasizes more on semantic and structural coherence of the composed images, rather than their pixel-level RGB accuracies, we tailor the input and output of our network with structure-aware features and design our network losses accordingly, with ground truth established in a self-supervised setting through the object cropping. Specifically, our network takes the semantic layout features from the input scene image, features encoded from the edges and silhouette in the input object patch, as well as a latent code as inputs, and generates a 2D spatial affine transform defining the translation and scaling of the object patch. |

|

3. Liqiang Lin, Pengdi Huang, Chi-Wing Fu,

Kai Xu, Hao Zhang, and Hui Huang,

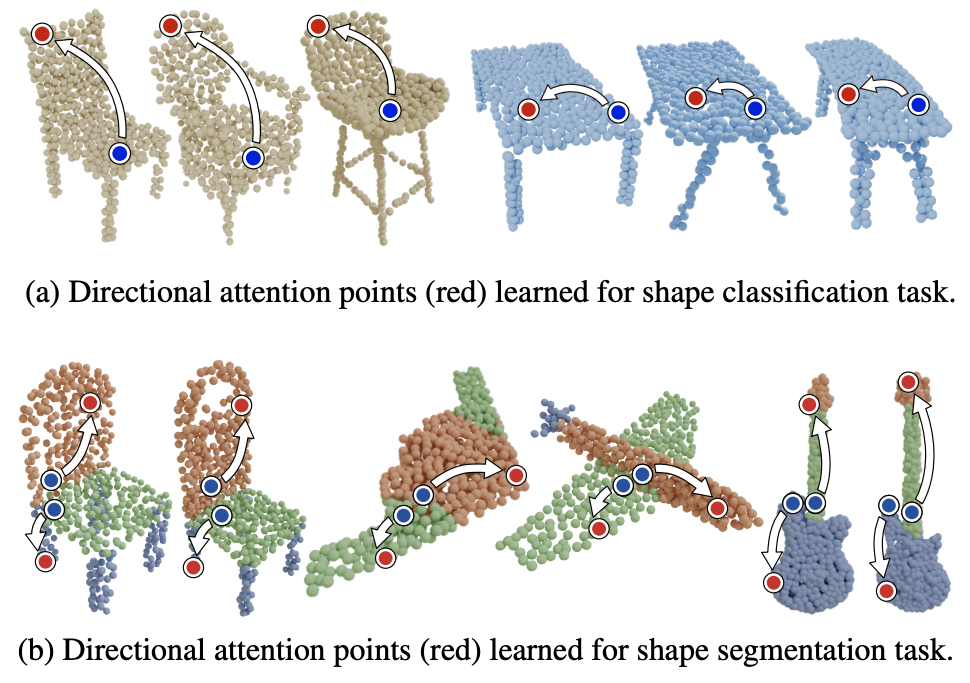

"One Point is All You Need: Directional Attention Point for Feature Learning",

Science China Information Sciences (SCIS), Vol. 66, No. 1, 2023.

[PDF | arXiv | bibtex]

We present a novel attention-based mechanism for learning enhanced point features for tasks such as point cloud classification and segmentation. Our key message is that if the right attention point is selected, then “one point is all you need” — not a sequence as in a recurrent model and not a pre-selected set as in all prior works. Also, where the attention point is should be learned, from data and specific to the task at hand. Our mechanism is characterized by a new and simple convolution, which combines the feature at an input point with the feature at its associated attention point. We call such a point adirectional attention point (DAP) ... |

|

2. Zhiqin Chen, Andrea Tagliasacchi, and Hao Zhang,

"Learning Mesh Representations via Binary Space Partitioning Tree Networks", IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI) (invited and extended article from CVPR 2020 as Best Student Paper Award winner), Vol. 45, No. 4, pp. 4870-4881, 2023.

[arXiv | Project page (code+video) | bibtex]

Polygonal meshes are ubiquitous, but have only played a relatively minor role in the deep learning revolution. State-of-the-art neural generative models for 3D shapes learn implicit functions and generate meshes via expensive iso-surfacing. We overcome these challenges by employing a classical spatial data structure from graphics, Binary Space Partitioning (BSP), to facilitate 3D learning. The core operation of BSP involves recursive subdivision of 3D space to obtain convex sets. By exploiting this property, we devise BSP-Net, a network that learns to represent a 3D shape via convex decomposition without supervision. The network is trained to reconstruct a shape using a set of convexes obtained from a BSP-tree built over a set of planes, where the planes and convexes are both defined by learned network weights. |

|

1. Tong Wu, Lin Gao, Lingxiao Zhang,

Yu-Kun Lai, and Hao Zhang,

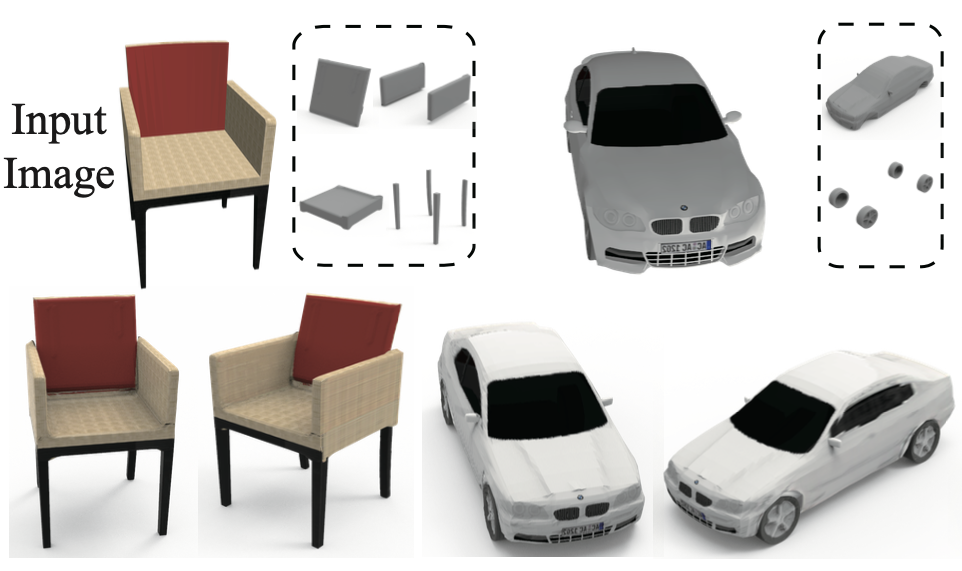

"STAR-TM: STructure Aware Reconstruction of Textured Mesh from Single Image",

IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), 2023.

[arXiv | bibtex]

We present a novel method for single-view 3D reconstruction of textured meshes, with a focus to address the primary challenge surrounding texture inference and transfer. Our key observation is that learning textured reconstruction in a structure-aware and globally consistent manner is effective in handling the severe ill-posedness of the texturing problem and significant variations in object pose and texture details. Specifically, we perform structured mesh reconstruction, via a retrieval-and-assembly approach, to produce a set of genus-zero parts parameterized by deformable boxes and endowed with semantic information. For texturing, we first transfer visible colors from the input image onto the unified UV texture space of the deformable boxes. Then we combine a learned transformer model for per-part texture completion with a global consistency loss to optimize inter-part texture consistency. Our texture completion model operates in a VQ-VAE embedding space and is trained end-to-end, with the transformer training enhanced with retrieved texture instances to improve texture completion performance amid significant occlusion. |

|

6. Yilin Liu, Liqiang Lin, Ke Xie,

Chi-Wing Fu,

Hao Zhang, and Hui Huang,

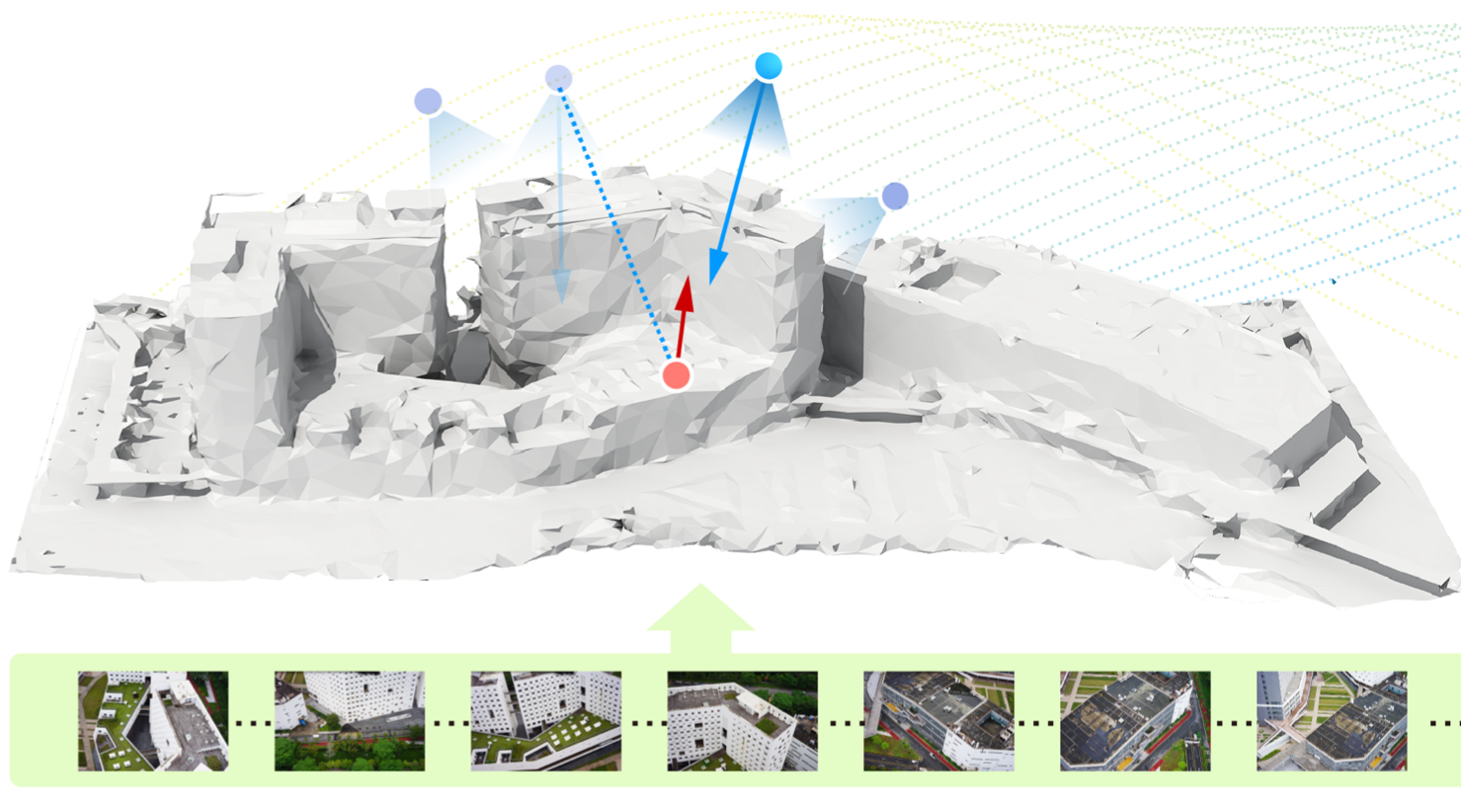

"Learning Reconstructability for Drone Aerial Path Planning", ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), 2022.

[Project | arXiv | bibtex]

We introduce the first learning-based reconstructability predictor to improve view and path planning for large-scale 3D urban scene acquisition using unmanned drones. In contrast to previous heuristic approaches, our method learns a model that explicitly predicts how well a 3D urban scene will be reconstructed from a set of viewpoints. To make such a model trainable and simultaneously applicable to drone path planning, we simulate the proxy-based 3D scene reconstruction during training to set up the prediction. Specifically, the neural network we design is trained to predict the scene reconstructability as a function of the proxy geometry, a set of viewpoints, and optionally a series of scene images acquired in flight ... |

|

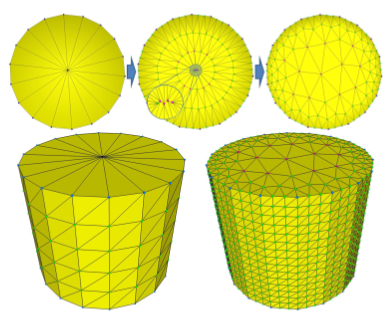

5. Zhiqin Chen,

Andrea Tagliasacchi,

Thomas Funkhouser,

and Hao Zhang,

"Neural Dual Contouring", ACM Transactions on Graphics (Special Issue of SIGGRAPH), 2022.

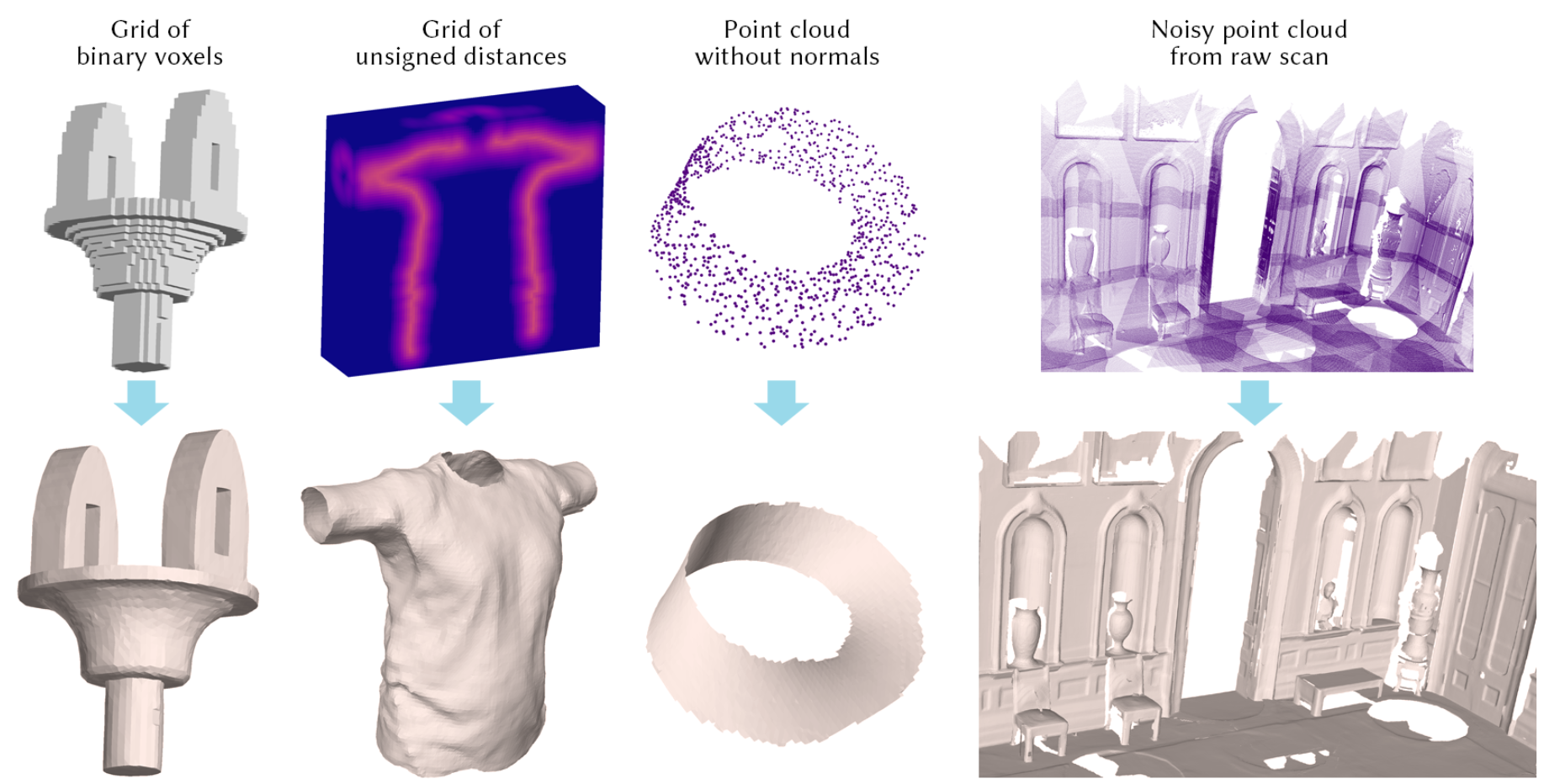

[arXiv | bibtex]

We introduce neural dual contouring (NDC), a new data-driven approach to mesh reconstruction based on dual contouring (DC). Like traditional DC, it produces exactly one vertex per grid cell and one quad for each grid edge intersection, a natural and efficient structure for reproducing sharp features. However, rather than computing vertex locations and edge crossings with hand-crafted functions that depend directly on difficult-to-obtain surface gradients, NDC uses a neural network to predict them. As a result, NDC can be trained to produce meshes from signed or unsigned distance fields, binary voxel grids, or point clouds (with or without normals); and it can produce open surfaces in cases where the input represents a sheet or partial surface. |

|

4. Fenggen Yu,

Zhiqin Chen,

Manyi Li, Aditya Sanghi, Hooman Shayani,

Ali Mahdavi-Amiri,

and Hao Zhang,

"CAPRI-Net: Learning Compact CAD Shapes with Adaptive Primitive Assembly", CVPR, 2022.

[arXiv | bibtex]

We introduce CAPRI-Net, a neural network for learning compact and interpretable implicit representations of 3D computer-aided design (CAD) models, in the form of adaptive primitive assemblies. Our network takes an input 3D shape that can be provided as a point cloud or voxel grids, and reconstructs it by a compact assembly of quadric surface primitives via constructive solid geometry (CSG) operations. The network is self-supervised with a reconstruction loss, leading to faithful 3D reconstructions with sharp edges and plausible CSG trees, without any ground-truth shape assemblies. |

|

3. Qimin Chen, Johannes Merz,

Aditya Sanghi, Hooman Shayani,

Ali Mahdavi-Amiri,

and Hao Zhang,

"UNIST: Unpaired Neural Implicit Shape Translation Network", CVPR, 2022.

[arXiv | bibtex]

We introduce UNIST, the first deep neural implicit model for general-purpose, unpaired shape-to-shape translation, in both 2D and 3D domains. Our model is built on autoencoding implicit fields, rather than point clouds which represents the state of the art. Furthermore, our translation network is trained to perform the task over a latent grid representation which combines the merits of both latent-space processing and position awareness, to not only enable drastic shape transforms but also well preserve spatial features and fine local details for natural shape translations. |

|

2. Chengjie Niu,

Manyi Li,

Kai Xu,

and Hao Zhang,

"RIM-Net: Recursive Implicit Fields for Unsupervised Learning of Hierarchical Shape Structures", CVPR, 2022.

[arXiv | bibtex]

We introduce RIM-Net, a neural network which learns recursive implicit fields for unsupervised inference of hierarchical shape structures. Our network recursively decomposes an input 3D shape into two parts, resulting in a binary tree hierarchy. Each level of the tree corresponds to an assembly of shape parts, represented as implicit functions, to reconstruct the input shape. At each node of the tree, simultaneous feature decoding and shape decomposition are carried out by their respective feature and part decoders, with weight sharing across the same hierarchy level ... |

|

1. Yanran Guan, Han Liu, Kun Liu, Kangxue Yin,

Ruizhen Hu,

Oliver van Kaick,

Yan Zhang, Ersin Yumer, Nathan Carr, Radomir Mech, and

Hao Zhang,

"FAME: 3D Shape Generation via Functionality-Aware Model Evolution",

IEEE Trans. on Visualization and Computer Graphics (TVCG), Vol. 28, No. 4, pp. 1758-1772, 2022.

[arXiv |

bibtex]

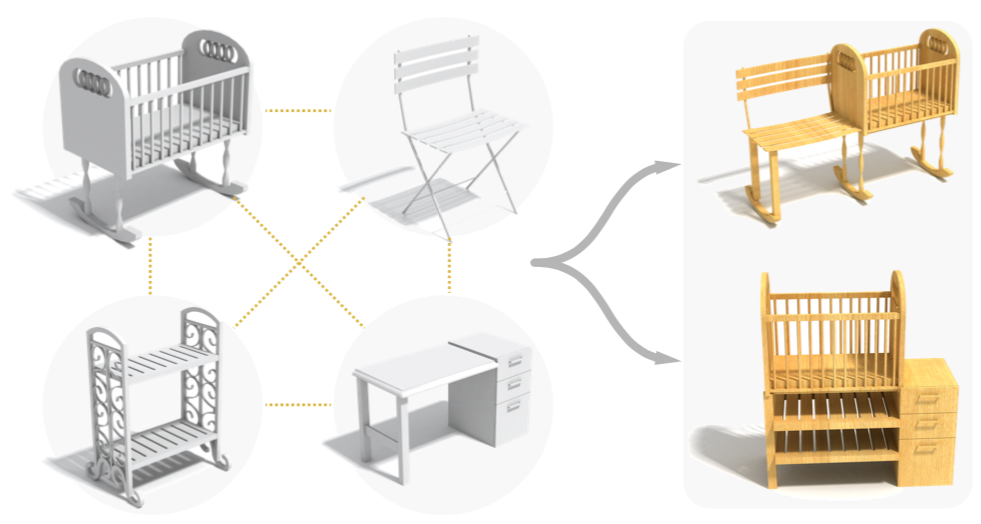

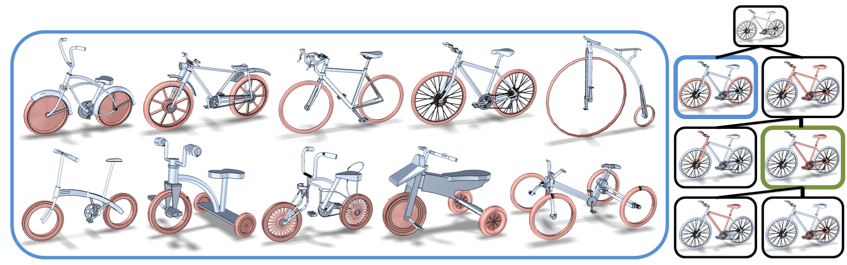

We introduce a modeling tool which can evolve a set of 3D objects in a functionality-aware manner. Our goal is for the evolution to generate large and diverse sets of plausible 3D objects for data augmentation, constrained modeling, as well as open-ended exploration to possibly inspire new designs. Starting with an initial population of 3D objects belonging to one or more functional categories, we evolve the shapes through part re-combination to produce generations of hybrids or crossbreeds between parents from the heterogeneous shape collection ... |

|

11. Zhiqin Chen and

Hao Zhang,

"Neural Marching Cubes", ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 40, No. 6, 2021.

[arXiv | code | bibtex]

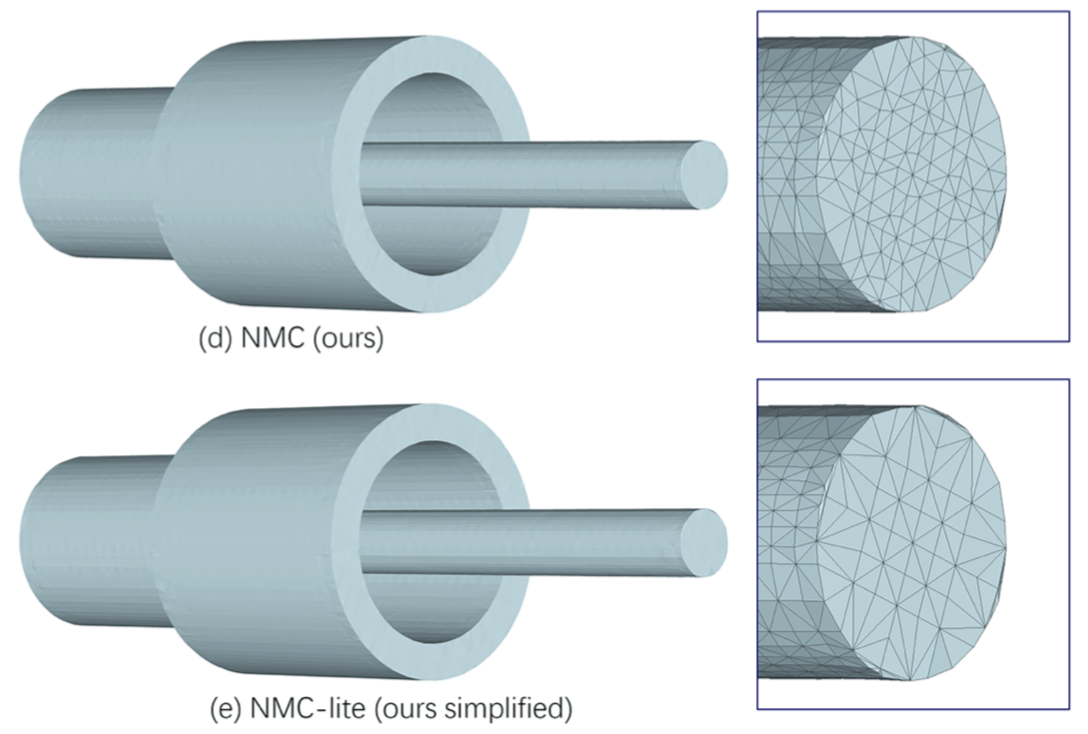

We introduce Neural Marching Cubes (NMC), a data-driven approach for extracting a triangle mesh from a discretized implicit field. We re-cast MC from a deep learning perspective, by designing tessellation templates more apt at preserving geometric features, and learning the vertex positions and mesh topologies from training meshes, to account for contextual information from nearby cubes. We develop a compact per-cube parameterization to represent the output triangle mesh, while being compatible with neural processing, so that a simple 3D convolutional network can be employed for the training. We evaluate our neural MC approach by quantitative and qualitative comparisons to all well-known MC variants, demonstrating its superiority in faithful reconstruction of sharp features and mesh topology. |

|

10. Jiongchao Jin, Arezou Fatemi (equal contribution),

Wallace Lira,

Fenggen Yu,

Biao Leng, Rui Ma,

Ali Mahdavi-Amiri,

and Hao Zhang,

"RaidaR: A Rich Annotated Image Dataset of Rainy Street Scenes",

Second ICCV Workshop on Autonomous Vehicle Vision (AVVision), 2021.

[dataset | arXiv | bibtex]

We introduce RaidaR, a rich annotated image dataset of rainy street scenes, to support autonomous driving research. The new dataset contains the largest number of rainy images (58,542) to date, 5,000 of which provide semantic segmentations and 3,658 provide object instance segmentations. The RaidaR images cover a wide range of realistic rain-induced artifacts, including fog, droplets, and road reflections, which can effectively augment existing street scene datasets to improve data-driven machine perception during rainy weather. |

|

9. Lin Gao,

Tong Wu, Yu-Jie Yuan, Ming-Xian Lin, Yu-Kun Lai, and Hao Zhang,

"TM-NET: Deep Generative Networks for Textured Meshes", ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 40, No. 6, 2021.

[arXiv | project page | bibtex]

We introduce TM-NET, a novel deep generative model for synthesizing textured meshes in a part-aware manner. Once trained, the network can generate novel textured meshes from scratch or predict textures for a given 3D mesh, without image guidance. Plausible and diverse textures can be generated for the same mesh part, while texture compatibility between parts in the same shape is achieved via conditional generation. Specifically, our method produces texture maps for individual shape parts, each as a deformable box, leading to a natural UV map with minimal distortion. The network separately embeds part geometry (via a PartVAE) and part texture (via a TextureVAE) into their respective latent spaces ... |

|

8. Han Zhang, Yusong Yao, Ke Xie, Chi-Wing Fu,

Hao Zhang, and Hui Huang,

"Continuous Aerial Path Planning for 3D Urban Scene Reconstruction", ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 40, No. 6, 2021.

[Project page | bibtex]

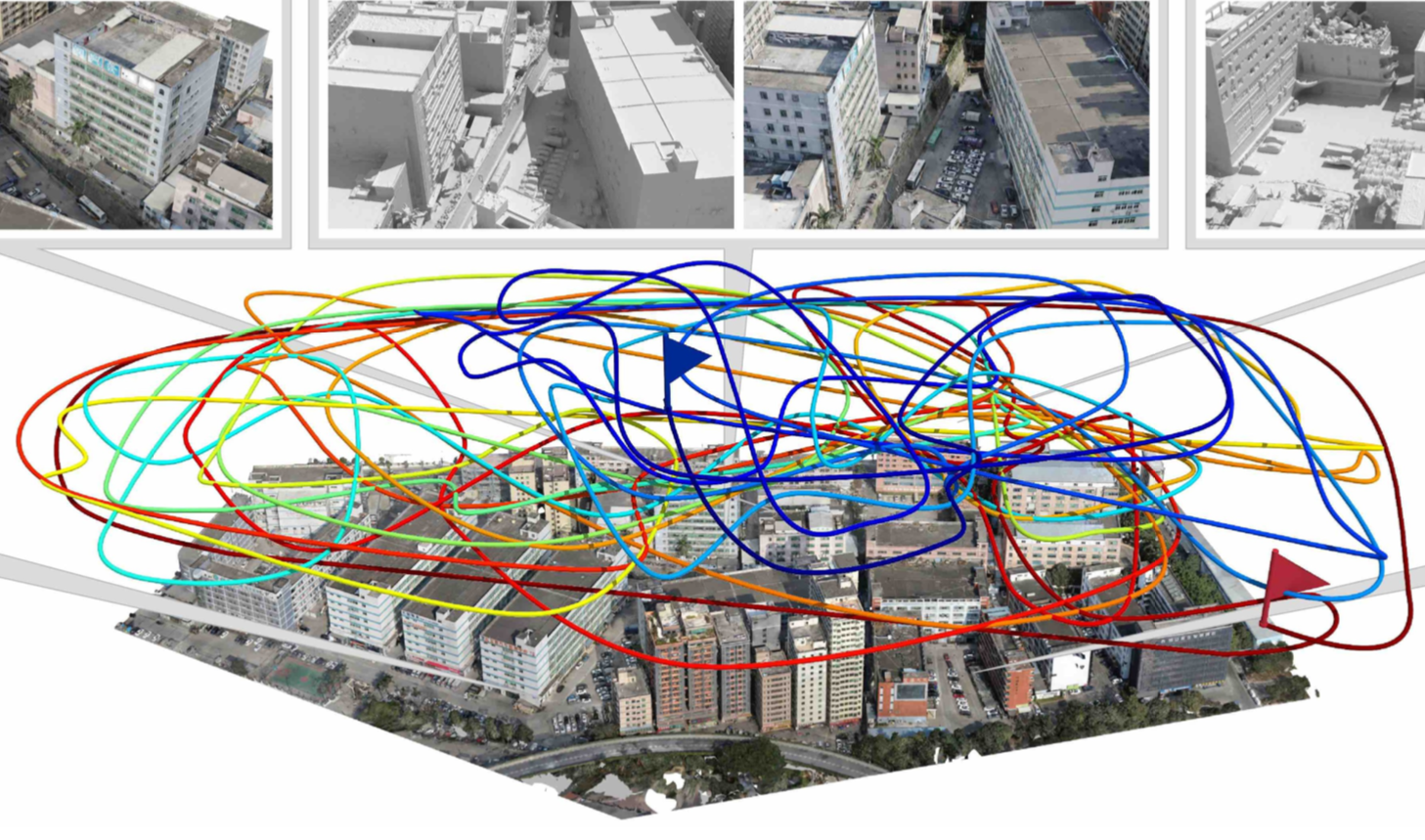

We introduce a path-oriented drone trajectory planning algorithm, which performs continuous image acquisition along an aerial path, aiming to optimize both the scene reconstruction quality and path quality. Specifically, our method takes as input a rough 3D scene proxy and produces a drone trajectory and image capturing setup, which efficiently yields a high-quality reconstruction of the 3D scene based on three optimization objectives: one maximize the amount of 3D scene information that can be acquired along the entirety of the trajectory, another one to optimize the scene capturing efficiency by maximizing the scene information that can be acquired per unit length along the aerial path, and the last one to minimize the total turning angles along the aerial path, so as to reduce the number of sharp turns. |

|

7. Huan Fu, Bowen Cai, Lin Gao,

Lingxiao Zhang, Cao Li, Zengqi Xun, Chengyue Sun, Yiyun Fei, Yu Zheng, Ying Li, Yi Liu, Peng Liu, Lin Ma, Le Weng,

Xiaohang Hu, Xin Ma, Qian Qian, Rongfei Jia, Binqiang Zhao,

and Hao Zhang,

"3D-FRONT: 3D Furnished Rooms with layOuts and semaNTics", ICCV, 2021.

[arXiv | bibtex]

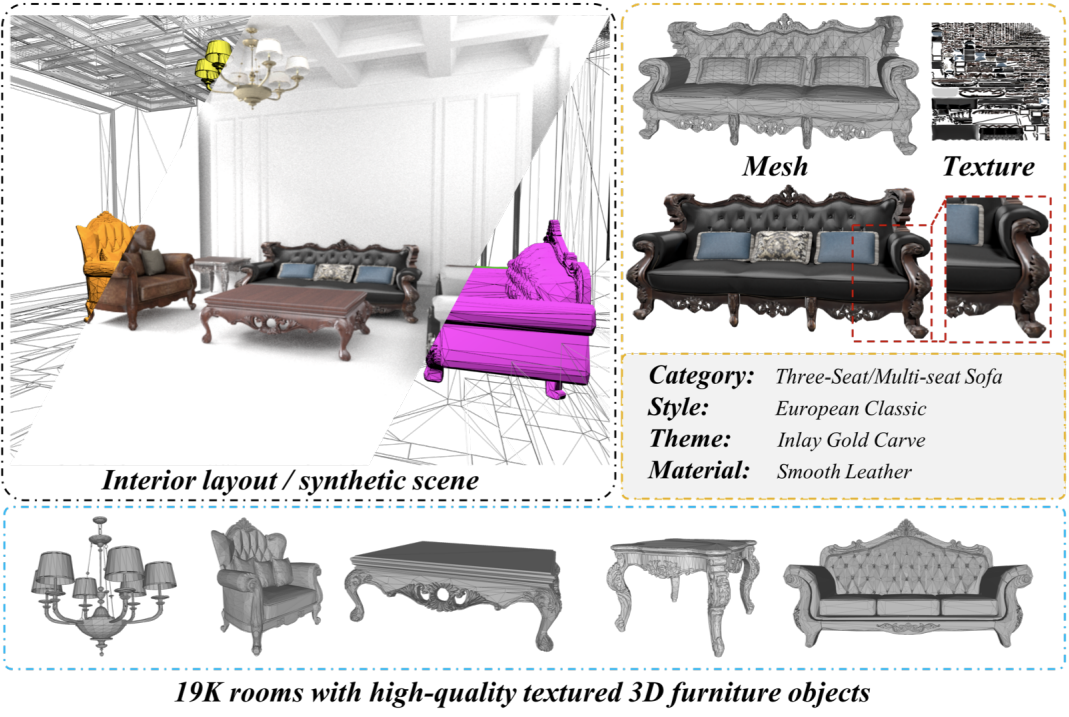

We introduce 3D-FRONT (3D Furnished Rooms with layOuts and semaNTics), a new, large-scale, and comprehensive repository of synthetic indoor scenes highlighted by professionally designed layouts and a large number of rooms populated by high-quality textured 3D models with style compatibility. From layout semantics down to texture details of individual objects, our dataset is freely available to the academic community and beyond. Currently, 3D-FRONT contains 18,797 rooms diversely furnished by 3D objects, far surpassing all publicly available scene datasets. In addition, the 7,302 furniture objects all come with high-quality textures ... |

|

6. Rinon Gal, Amit Bermano, Hao Zhang, and Daniel Cohen-Or,

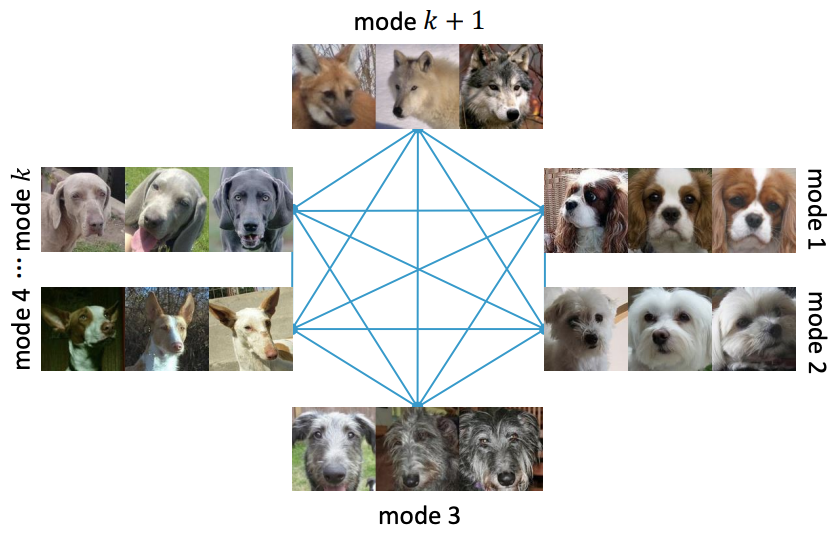

"MRGAN: Multi-Rooted 3D Shape Generation with Unsupervised Part Disentanglement",

ICCV Workshop on Structural and Compositional Learning on 3D Data (StruCo3D), 2021.

[arXiv | bibtex]

We present MRGAN, a multi-rooted adversarial network which generates part-disentangled 3D point-cloud shapes without part-based shape supervision. The network fuses multiple branches of tree-structured graph convolution layers which produce point clouds, with learnable constant inputs at the tree roots. Each branch learns to grow a different shape part, offering control over the shape generation at the part level. Our network encourages disentangled generation of semantic parts via two key ingredients: a root-mixing training strategy which helps decorrelate the different branches to facilitate disentanglement, and a set of loss terms designed with part disentanglement and shape semantics in mind. |

|

5. Manyi Li and Hao Zhang,

"D^2IM-Net: Learning Detail Disentangled Implicit Fields from Single Images", CVPR, 2021.

[arXiv | bibtex]

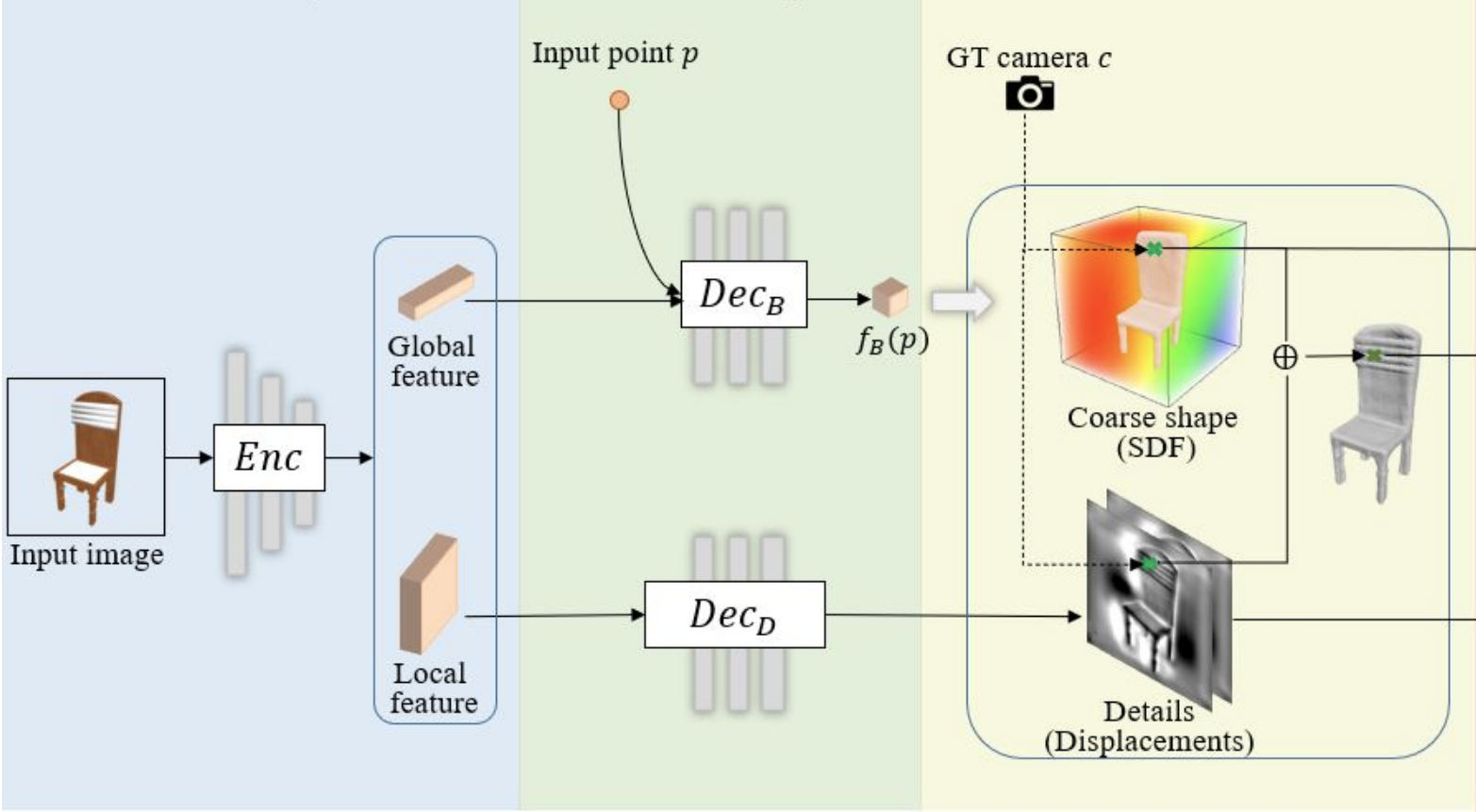

We present the first single-view 3D reconstruction network aimed at recovering geometric details from an input image which encompass both topological shape structures and surface features. Our key idea is to train the network to learn a detail disentangled reconstruction consisting of two functions, one implicit field representing the coarse 3D shape and the other capturing the details. Given an input image, our network, coined D2IM-Net, encodes it into global and local features which are respectively fed into two decoders. The base decoder uses the global features to reconstruct a coarse implicit field, while the detail decoder reconstructs, from the local features, two displacement maps, defined over the front and back sides of the captured object. The final 3D reconstruction is a fusion between the base shape and the displacement maps, with three losses enforcing the recovery of coarse shape, overall structure, and surface details via a novel Laplacian term. |

|

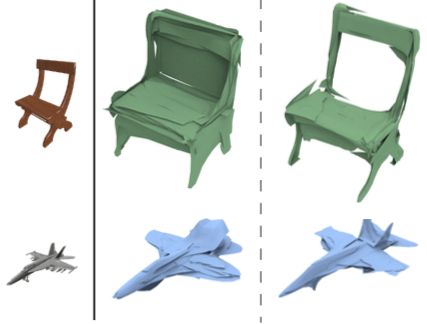

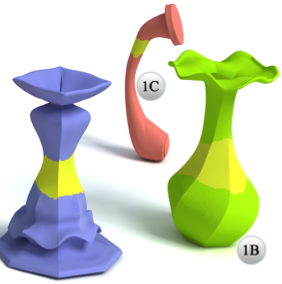

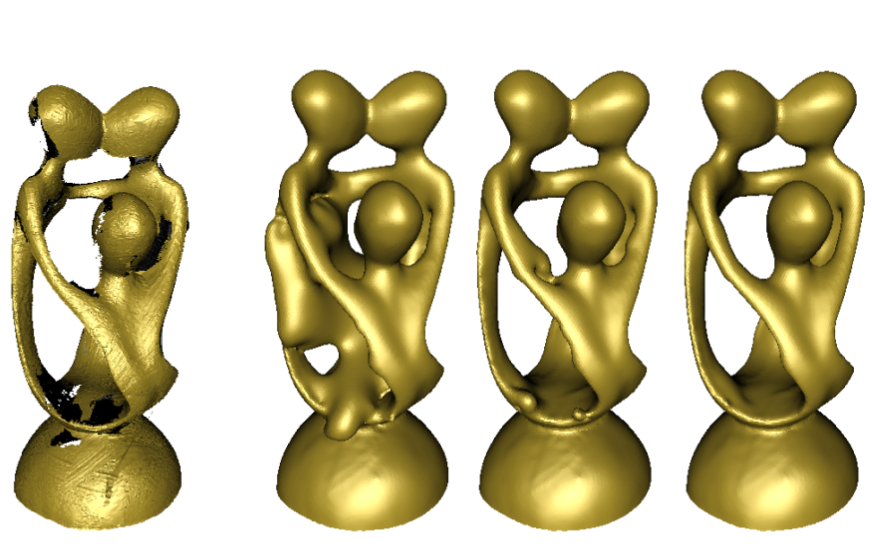

4. Zhiqin Chen,

Vladimir Kim,

Matthew Fisher,

Noam Aigerman, Hao Zhang, and

Siddhartha Chaudhuri,

"DECOR-GAN: 3D Shape Detailization by Conditional Refinement", CVPR (oral), 2021.

[arXiv | bibtex]

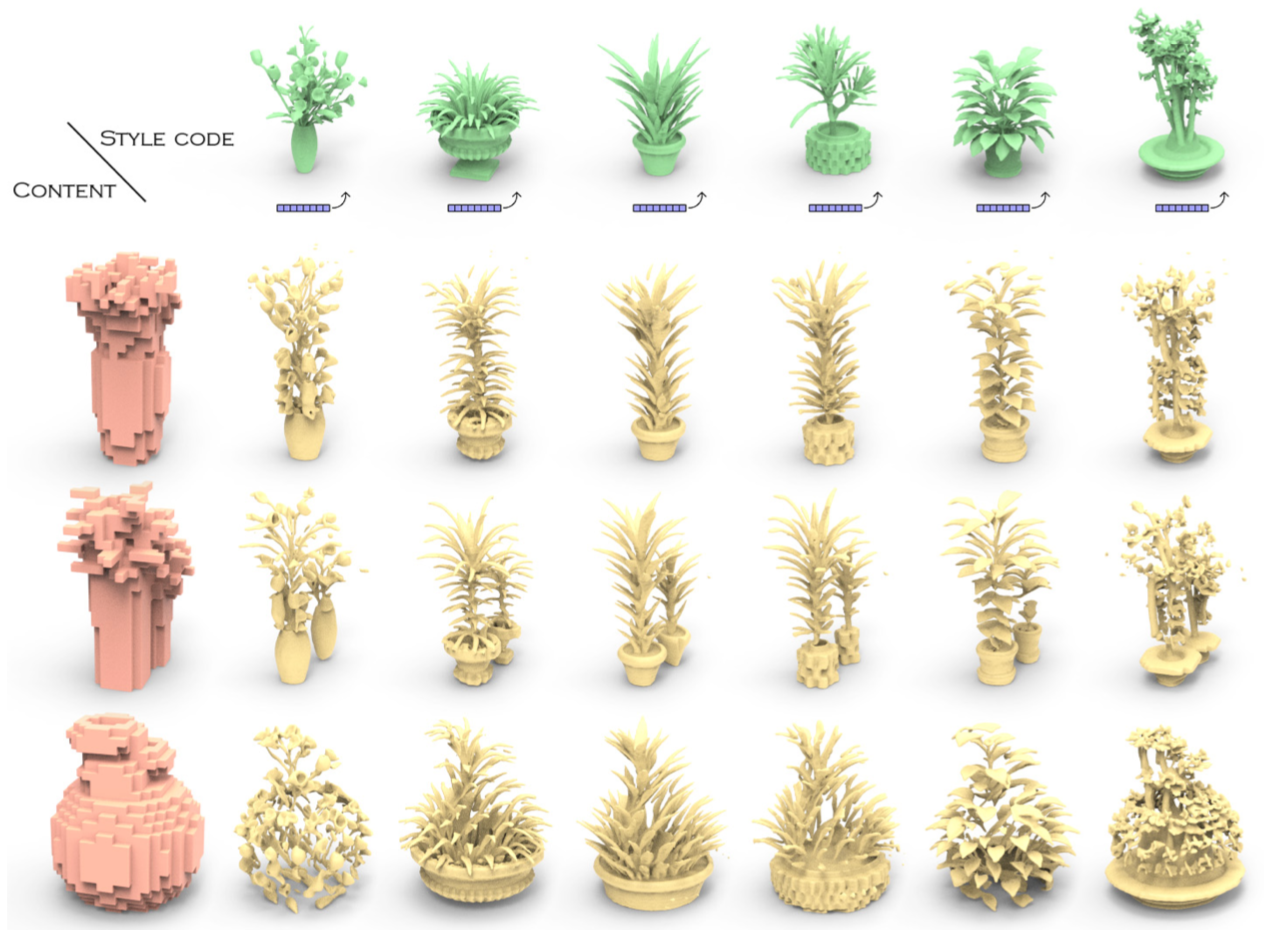

We introduce a deep generative network for 3D shape detailization, akin to stylization with the style being geometric details. We address the challenge of creating large varieties of high-resolution and detailed 3D geometry from a small set of exemplars by treating the problem as that of geometric detail transfer. Given a low-resolution coarse voxel shape, our network refines it, via voxel upsampling, into a higher-resolution shape enriched with geometric details. The output shape preserves the overall structure (or content) of the input, while its detail generation is conditioned on an input "style code" corresponding to a detailed exemplar. |

|

3. Akshay Gadi Patil, Manyi Li,

Matthew Fisher,

Manolis Savva, and Hao Zhang,

"LayoutGMN: Neural Graph Matching for Structural Layout Similarity", CVPR, 2021.

[arXiv | bibtex]

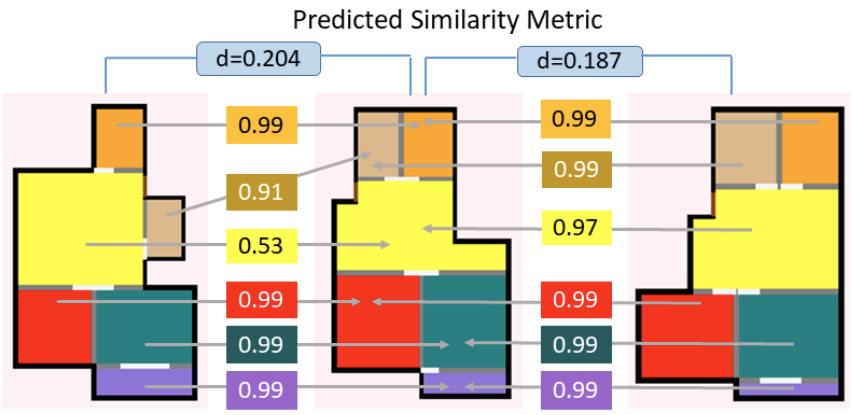

We present a deep neural network to predict structural similarity between 2D layouts by leveraging Graph Matching Networks (GMN). Our network, coined LayoutGMN, learns the layout metric via neural graph matching, using an attention-based GMN designed under a triplet network setting. To train our network, we utilize weak labels obtained by pixel-wise Intersection-over-Union (IoUs) to define the triplet loss. Importantly, LayoutGMN is built with a structural bias which can effectively compensate for the lack of structure awareness in IoUs. |

|

2. Yiming Qian, Hao Zhang, and Yasutaka Furukawa,

"Roof-GAN: Learning to Generate Roof Geometry and Relations for Residential Houses", CVPR, 2021.

[arXiv | bibtex]

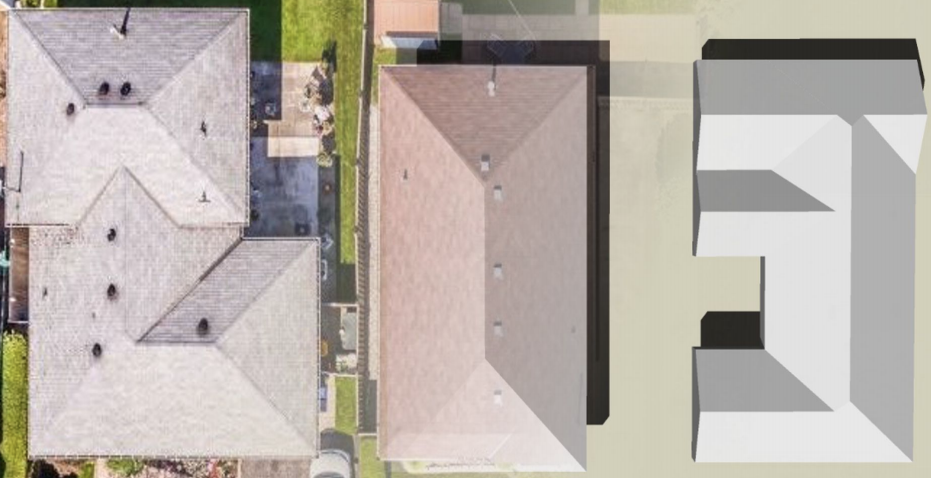

This paper presents Roof-GAN, a novel generative adversarial network that generates structured geometry of residential roof structures as a set of roof primitives and their relationships. Given the number of primitives, the generator produces a structured roof model as a graph, which consists of 1) primitive geometry as raster images at each node, encoding facet segmentation and angles; 2) inter-primitive colinear/coplanar relationships at each edge; and 3) primitive geometry in a vector format at each node, generated by a novel differentiable vectorizer while enforcing the relationships. |

|

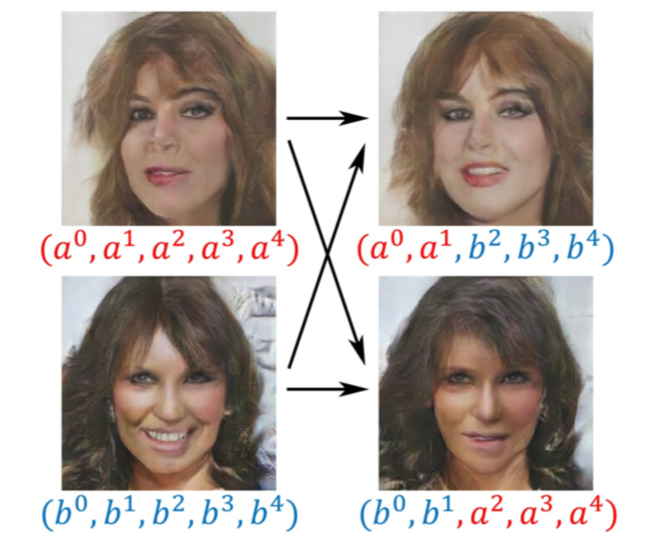

1. Or Patashnik, Dov Danon, Hao Zhang, and Daniel Cohen-Or,

"BalaGAN: Image Translation Between Imbalanced Domains via Cross-Modal Transfer", CVPR Workshop on

Learning from Limited and Imperfect Data (L2ID), 2021.

[Project page | arXiv | bibtex]

State-of-the-art image-to-image translation methods tend to struggle in an imbalanced domain setting, where one image domain lacks richness and diversity. We introduce a new unsupervised translation network, BalaGAN, specifically designed to tackle the domain imbalance problem. We leverage the latent modalities of the richer domain to turn the image-to-image translation problem, between two imbalanced domains, into a balanced, multi-class, and conditional translation problem, more resembling the style transfer setting. Specifically, we analyze the source domain and learn a decomposition of it into a set of latent modes or classes, without any supervision. This leaves us with a multitude of balanced cross-domain translation tasks, between all pairs of classes, including the target domain. During inference, the trained network takes as input a source image, as well as a reference or style image from one of the modes as a condition, and produces an image which resembles the source on the pixel-wise level, but shares the same mode as the reference. |

|

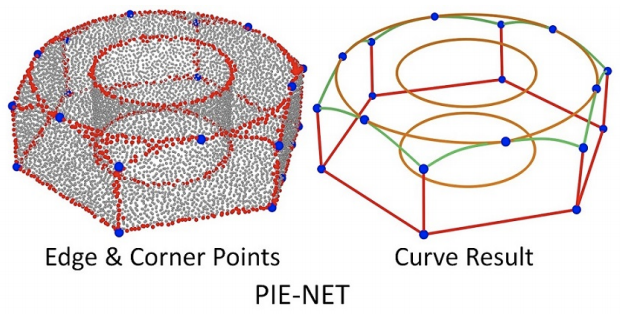

13. Xiaogang Wang, Yuelang Xu, Kai Xu, Andrea Tagliasacchi,

Bin Zhou, Ali Mahdavi-Amiri, and Hao Zhang,

"PIE-NET: Parametric Inference of Point Cloud Edges", NeurIPS, 2020.

[arXiv | bibtex]

We introduce an end-to-end learnable technique to robustly identify feature edges in 3D point cloud data. We represent these edges as a collection of parametric curves (i.e., lines, circles, and B-splines). Accordingly, our deep neural network, coined PIE-NET, is trained for parametric inference of edges. The network is trained on the ABC dataset and relies on a "region proposal" architecture, where a first module proposes an over-complete collection of edge and corner points, and a second module ranks each proposal to decide whether it should be considered. |

|

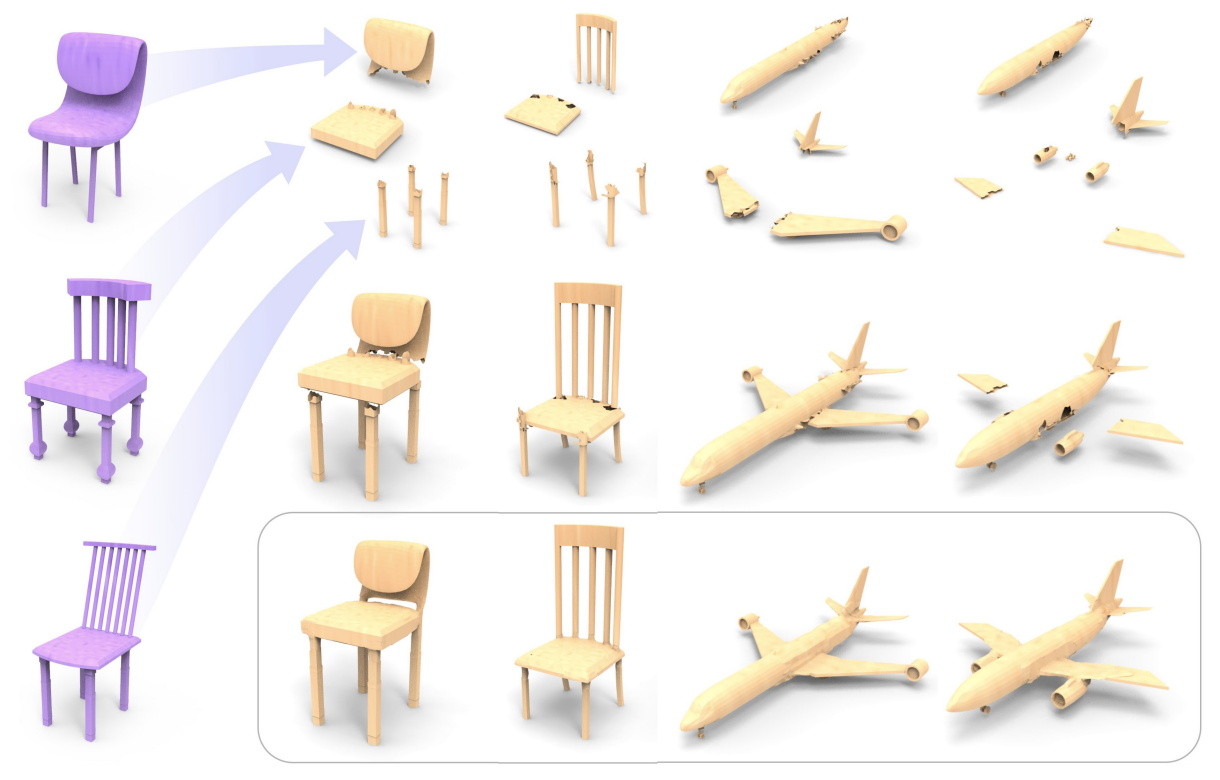

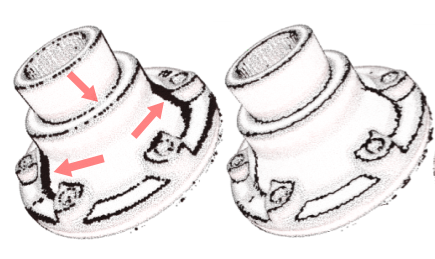

12. Kangxue Yin, Zhiqin Chen,

Siddhartha Chaudhuri,

Matt Fisher, Vladimir Kim,

and Hao Zhang,

"COALESCE: Component Assembly by Learning to Synthesize Connections", 3D Vision (3DV), (oral), 2020.

[arXiv | bibtex]

We introduce COALESCE, the first data-driven framework for component-based shape assembly which employs deep learning to synthesize part connections. To handle geometric and topological mismatches between parts, we remove the mismatched portions via erosion, and rely on a joint synthesis step, which is learned from data, to fill the gap and arrive at a natural and plausible part joint. Given a set of input parts extracted from different objects, COALESCE automatically aligns them and synthesizes plausible joints to connect the parts into a coherent 3D object represented by a mesh. The joint synthesis network, designed to focus on joint regions, reconstructs the surface between the parts by predicting an implicit shape representation that agrees with existing parts, while generating a smooth and topologically meaningful connection. |

|

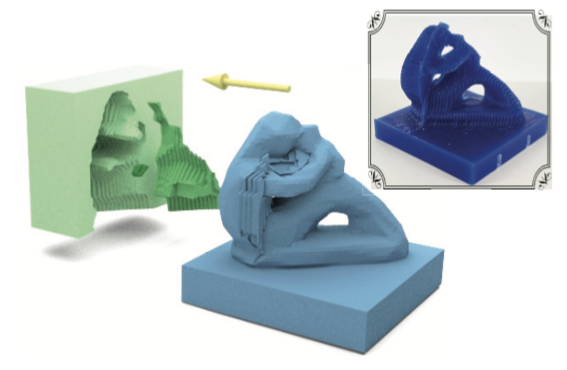

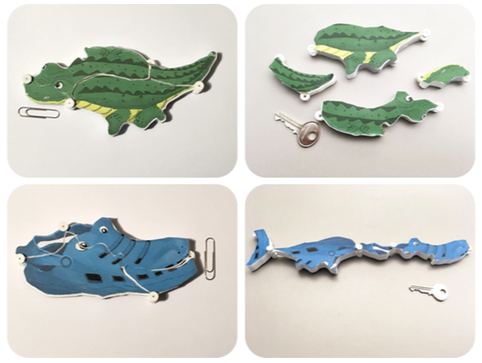

11. Ali Mahdavi-Amiri,

Fenggen Yu,

Haisen Zhao,

Adriana Schulz,

and Hao Zhang,

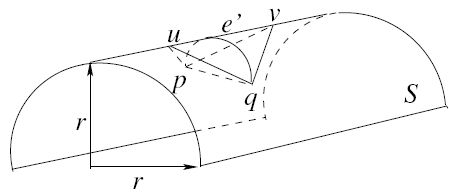

"VDAC: Volume Decompose-and-Carve for Subtractive Manufacturing",

ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 39, No. 6, 2020.

[PDF | Project page | bibtex]

We introduce carvable volume decomposition for efficient 3-axis CNC machining of 3D freeform objects, where our goal is to develop a fully automatic method to jointly optimize setup and path planning. We formulate our joint optimization as a volume decomposition problem which prioritizes minimizing the number of setup directions while striving for a minimum number of continuously carvable volumes, where a 3D volume is continuously carvable, or simply carvable, if it can be carved with the machine cutter traversing a single continuous path. Geometrically, carvability combines visibility and monotonicity and presents a new shape property which had not been studied before. |

|

10. Ruizhen Hu, Juzhan Xu, Bin Chen,

Minglun Gong, Hao Zhang,

and Hui Huang,

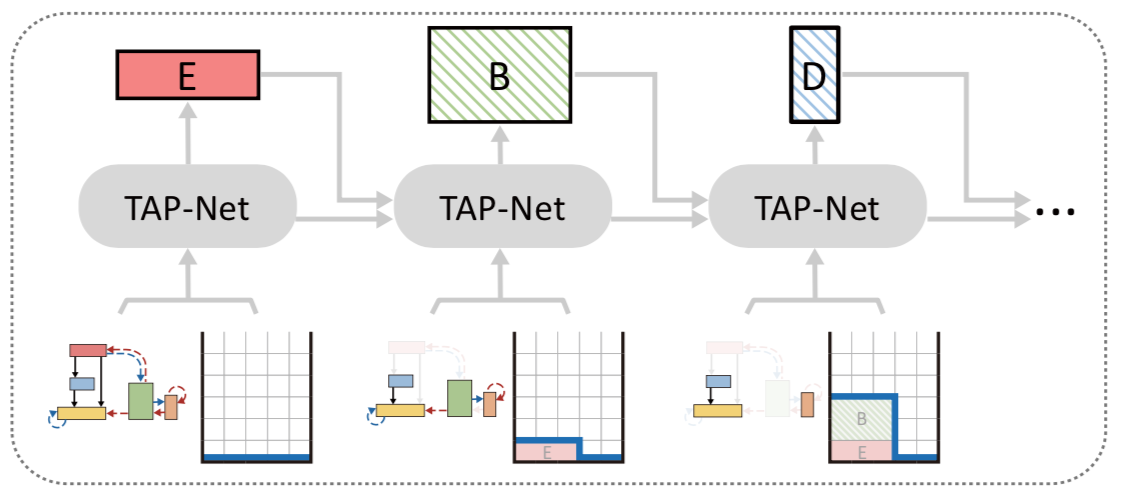

"TAP-Net: Transport-and-Pack using Reinforcement Learning",

ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 39, No. 6, 2020.

[Project page |

arXiv |

bibtex]

We introduce the transport-and-pack (TAP) problem, a frequently encountered instance of real-world packing, and develop a neural optimization solution based on reinforcement learning. Given an initial spatial configuration of boxes, we seek an efficient method to iteratively transport and pack the boxes compactly into a target container. Due to obstruction and accessibility constraints, our problem has to add a new search dimension, i.e., finding an optimal transport sequence, to the already immense search space for packing alone. Using a learning-based approach, a trained network can learn and encode solution patterns to guide the solution of new problem instances instead of executing an expensive online search. |

|

9. Zili Yi, Zhiqin Chen, Hao Cai, Wendong Mao,

Minglun Gong, and Hao Zhang,

"BSD-GAN: Branched Generative Adversarial Networks for Scale-Disentangled Learning and Synthesis of Images",

IEEE Trans. on Image Processing, Vol. 29, pp. 9073-9083, 2020.

[arXiv | code |

bibtex]

We introduce BSD-GAN, a novel multi-branch and scale-disentangled training method which enables unconditional Generative Adversarial Networks (GANs) to learn image representations at multiple scales, benefiting a wide range of generation and editing tasks. The key feature of BSD-GAN is that it is trained in multiple branches, progressively covering both the breadth and depth of the network, as resolutions of the training images increase to reveal finer-scale features. Specifically, each noise vector, as input to the generator network of BSD-GAN, is deliberately split into several sub-vectors, each corresponding to, and is trained to learn, image representations at a particular scale. During training, we progressively "de-freeze" the sub-vectors, one at a time, as a new set of higher-resolution images is employed for training and more network layers are added. |

|

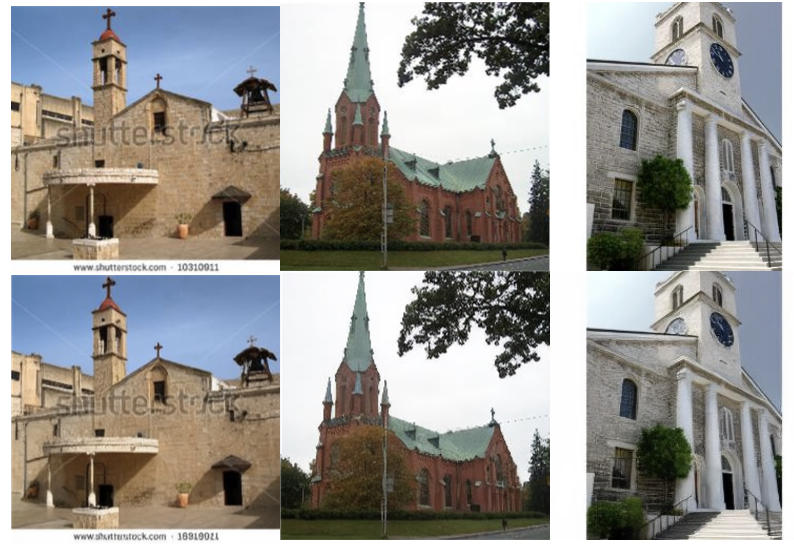

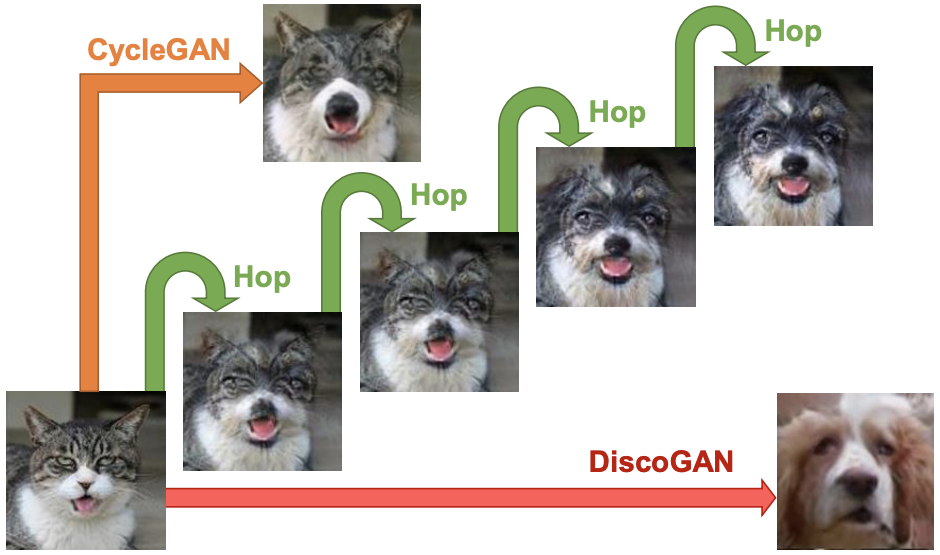

8. Wallace Lira, Johannes Merz, Daniel Ritchie,

Daniel Cohen-Or, and Hao Zhang,

"GANHopper: Multi-Hop GAN for Unsupervised Image-to-Image Translation", ECCV, 2020.

[arXiv | Youtube video | bibtex]

We introduce GANhopper, an unsupervised image-to-image translation network that transforms images gradually between two domains, through multiple hops. Instead of executing translation directly, we steer the translation by requiring the network to produce in-between images which resemble weighted hybrids between images from the two input domains. Our network is trained on unpaired images from the two domains only, without any in-between images. All hops are produced using a single generator along each direction. In addition to the standard cycle-consistency and adversarial losses, we introduce a new hybrid discriminator, which is trained to classify the intermediate images produced by the generator as weighted hybrids, with weights based on a predetermined hop count. |

|

7. Jiongchao Jin, Akshay Gadi Patil, Zhang Xiong, and Hao Zhang,

"DR-KFS: A Differentiable Visual Similarity Metric for 3D Shape Reconstruction", ECCV, 2020.

[arXiv | bibtex]

We introduce a differential visual similarity metric to train deep neural networks for 3D reconstruction, aimed at improving reconstruction quality. The metric compares two 3D shapes by measuring distances between multi-view images differentiably rendered from the shapes. Importantly, the image-space distance is also differentiable and measures visual similarity, rather than pixel-wise distortion. Specifically, the similarity is defined by mean-squared errors over HardNet features computed from probabilistic keypoint maps of the compared images. Our differential visual shape similarity metric can be easily plugged into various 3D reconstruction networks, replacing their distortion-based losses, such as Chamfer or Earth Mover distances, so as to optimize the network weights to produce reconstructions with better structural fidelity and visual quality. |

|

6. Hao Xu, Ka Hei Hui,

Chi-Wing Fu, and Hao Zhang,

"TilinGNN: Learning to Tile with Self-Supervised Graph Neural Network",

ACM Transactions on Graphics (Special Issue of SIGGRAPH), Vol. 39, No. 4, 2020.

[Project page | arXiv |

Code | bibtex]

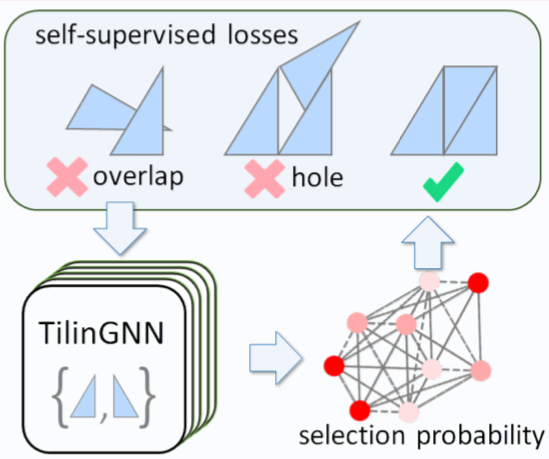

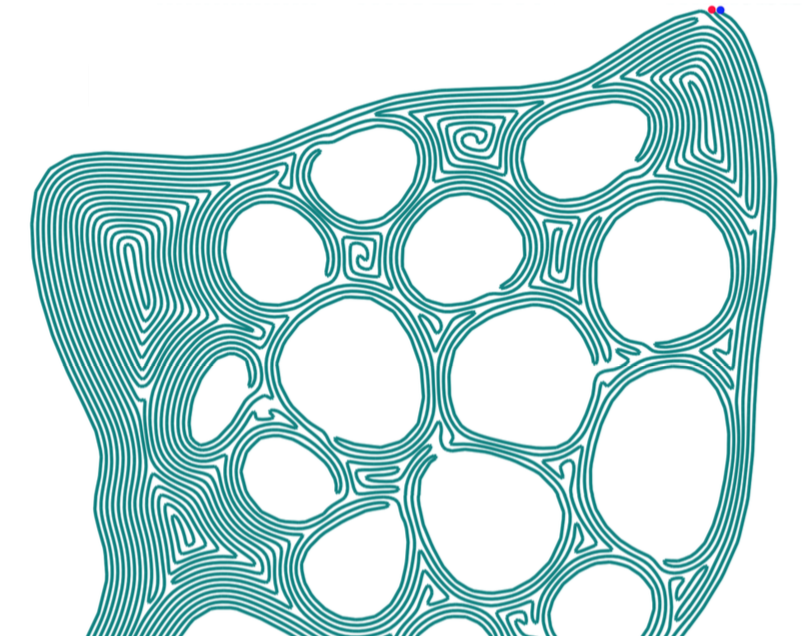

We introduce the first neural optimization framework to solve a classical instance of the tiling problem. Namely, we seek a non-periodic tiling of an arbitrary 2D shape using one or more types of tiles: the tiles maximally fill the shape’s interior without overlaps or holes. To start, we reformulate tiling as a graph problem by modeling candidate tile locations in the target shape as graph nodes and connectivity between tile locations as edges. We build a graph convolutional neural network, coined TilinGNN, to progressively propagate and aggregate features over graph edges and predict tile placements. Our network is self-supervised and trained by maximizing the tiling coverage on target shapes, while avoiding overlaps and holes between the tiles. After training, TilinGNN has a running time that is roughly linear to the number of candidate tile locations, significantly outperforming traditional combinatorial search. |

|

5. Ruizhen Hu, Zeyu Huang, Yuhan Tang,

Oliver van Kaick, Hao Zhang,

and Hui Huang,

"Graph2Plan: Learning Floorplan Generation from Layout Graphs",

ACM Transactions on Graphics (Special Issue of SIGGRAPH), Vol. 39, No. 4, 2020.

[Project page | arXiv | bibtex]

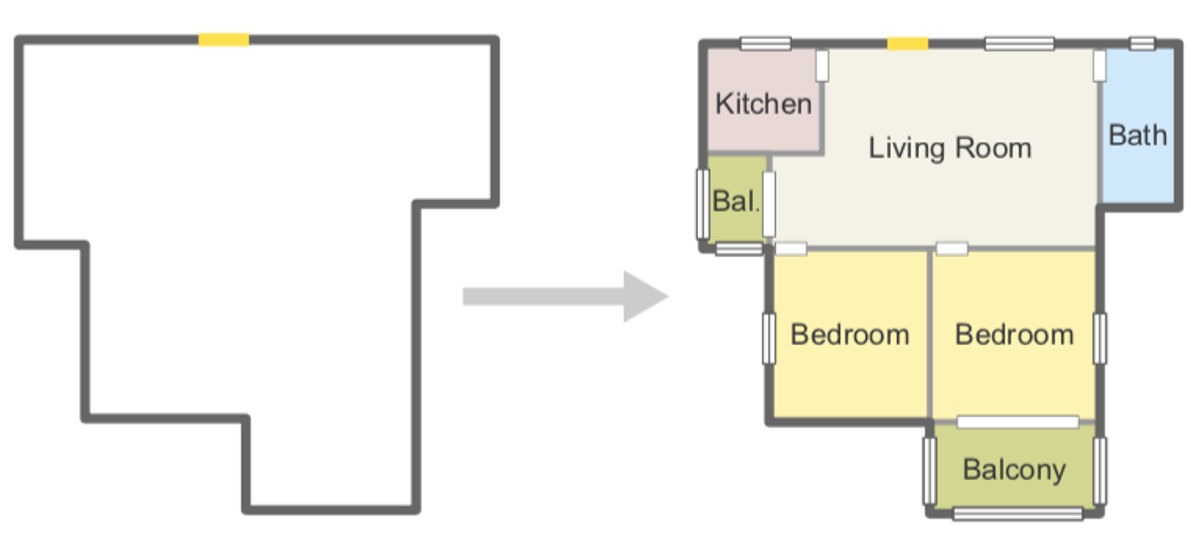

We introduce a learning framework for automated floorplan generation which combines generative modeling using deep neural networks and user-in-the-loop designs to enable human users to provide sparse design constraints. Such constraints are represented by a layout graph. The core component of our learning framework is a deep neural network, Graph2Plan, which is trained on RPLAN, a large-scale dataset consisting of 80K annotated, human-designed floorplans. The network converts a layout graph, along with a building boundary, into a floorplan that fulfills both the layout and boundary constraints. |

|

4. Zhiqin Chen, Andrea Tagliasacchi, and Hao Zhang,

"BSP-Net: Generating Compact Meshes via Binary Space Partitioning", CVPR, (oral), 2020. Best Student Paper Award.

[Project page (code+video) | arXiv | bibtex]

Polygonal meshes are ubiquitous in the digital 3D domain. Leading methods for learning generative models of shapes rely on implicit functions, and generate meshes only after expensive iso-surfacing routines. To overcome these challenges, we are inspired by a classical spatial data structure from computer graphics, Binary Space Partitioning (BSP), to facilitate 3D learning. The core ingredient of BSP is an operation for recursive subdivision of space to obtain convex sets. By exploiting this property, we devise BSP-Net, a network that learns to represent a 3D shape via convex decomposition. Importantly, BSP-Net is unsupervised since no convex shape decompositions are needed for training. The network is trained to reconstruct a shape using a set of convexes obtained from a BSP-tree built on a set of planes. The convexes inferred by BSP-Net can be easily extracted to form a polygon mesh, without any need for iso-surfacing. The generated meshes are compact (i.e., low-poly) and well suited to represent sharp geometry; they are guaranteed to be watertight and can be easily parameterized. |

|

3. Chenyang Zhu, Kai Xu,

Siddhartha Chaudhuri, Li Yi,

Leonidas J. Guibas, and Hao Zhang,

"AdaCoSeg: Adaptive Shape Co-Segmentation with Group Consistency Loss", CVPR, (oral), 2020.

[arXiv | bibtex]

We introduce AdaSeg, a deep neural network architecture for adaptive co-segmentation of a set of 3D shapes represented as point clouds. Differently from the familiar single-instance segmentation problem, co-segmentation is intrinsically contextual: how a shape is segmented can vary depending on the set it is in. Hence, our network features an adaptive learning module to produce a consistent shape segmentation which adapts to a set. |

|

2. Rundi Wu, Yixin Zhuang, Kai Xu, Hao Zhang, and Baoquan Chen,

"PQ-NET: A Generative Part Seq2Seq Network for 3D Shapes", CVPR, 2020.

[arXiv | bibtex]

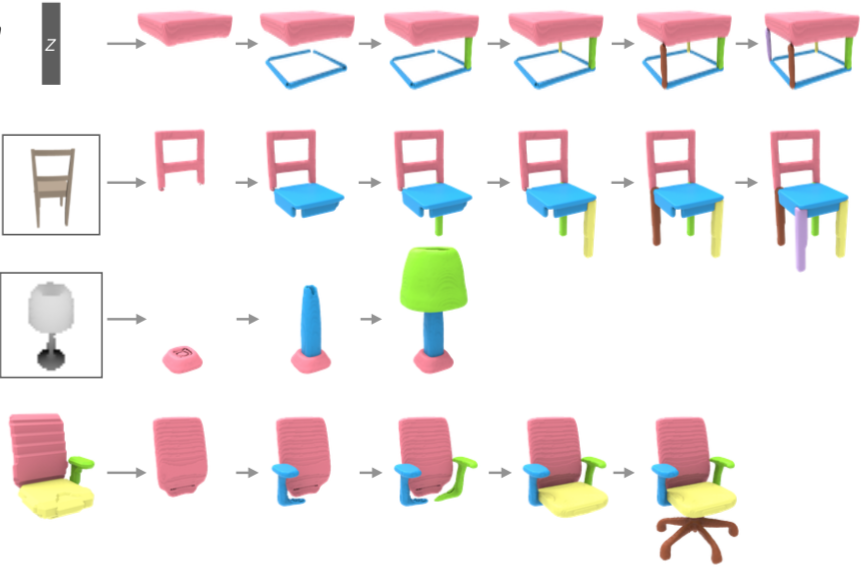

We introduce PQ-NET, a deep neural network which represents and generates 3D shapes via sequential part assembly. The input to our network is a 3D shape segmented into parts, where each part is first encoded into a feature representation using a part autoencoder. The core component of PQ-NET is a sequence-to-sequence or Seq2Seq autoencoder which encodes a sequence of part features into a latent vector of fixed size, and the decoder reconstructs the 3D shape, one part at a time, resulting in a sequential assembly. The latent space formed by the Seq2Seq encoder encodes both part structure and fine part geometry. The decoder can be adapted to perform several generative tasks including shape autoencoding, interpolation, novel shape generation, and single-view 3D reconstruction, where the generated shapes are all composed of meaningful parts. |

|

1. Siddhartha Chaudhuri,

Daniel Ritchie,

Jiajun Wu,

Kai Xu, and Hao Zhang,

"Learning Generative Models of 3D Structures",

Computer Graphics Forum (Eurographics STAR), 2020.

[Project page | PDF | bibtex]

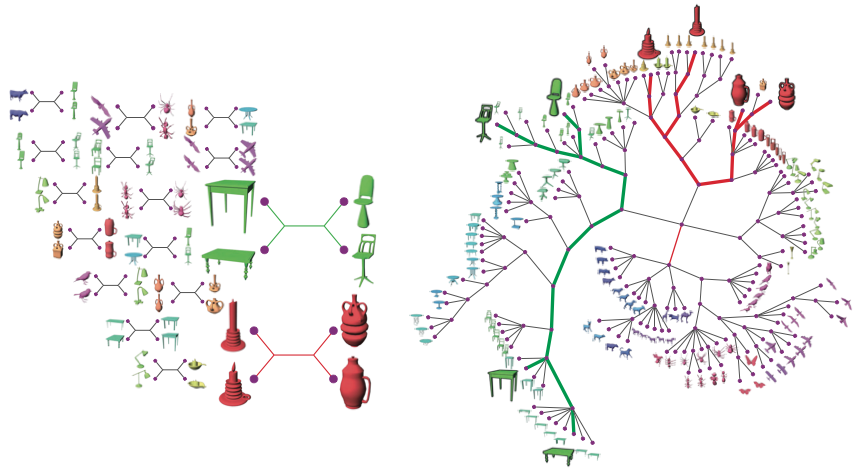

3D models of objects and scenes are critical to many academic disciplines and industrial applications. Of particular interest is the emerging opportunity for 3D graphics to serve artificial intelligence: computer vision systems can benefit from synthetically- generated training data rendered from virtual 3D scenes, and robots can be trained to navigate in and interact with real-world environments by first acquiring skills in simulated ones. One of the most promising ways to achieve this is by learning and applying generative models of 3D content: computer programs that can synthesize new 3D shapes and scenes. To allow users to edit and manipulate the synthesized 3D content to achieve their goals, the generative model should also be structure-aware: it should express 3D shapes and scenes using abstractions that allow manipulation of their high-level structure. This state-of-the- art report surveys historical work and recent progress on learning structure-aware generative models of 3D shapes and scenes. |

|

11. Kangxue Yin,

Zhiqin Chen,

Hui Huang,

Daniel Cohen-Or, and Hao Zhang,

"LOGAN: Unpaired Shape Transform in Latent Overcomplete Space",

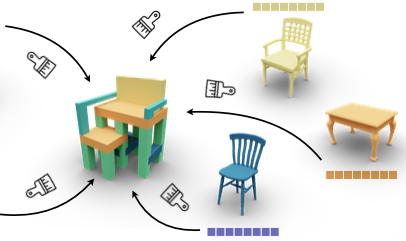

ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 38, No. 6, Article 198, 2019. One of six papers selected for press release at SIGGRAPH Asia.

[arXiv | code | bibtex]

We introduce LOGAN, a deep neural network aimed at learning general-purpose shape transforms from unpaired domains. The network is trained on two sets of shapes, e.g., tables and chairs, while there is neither a pairing between shapes from the domains as supervision nor any point-wise correspondence between any shapes. Once trained, LOGAN takes a shape from one domain and transforms it into the other. Our network consists of an autoencoder to encode shapes from the two input domains into a common latent space, where the latent codes concatenate multi-scale shape features, resulting in an overcomplete representation. The translator is based on a latent generative adversarial network (GAN), where an adversarial loss enforces cross-domain translation while a feature preservation loss ensures that the right shape features are preserved for a natural shape transform. |

|

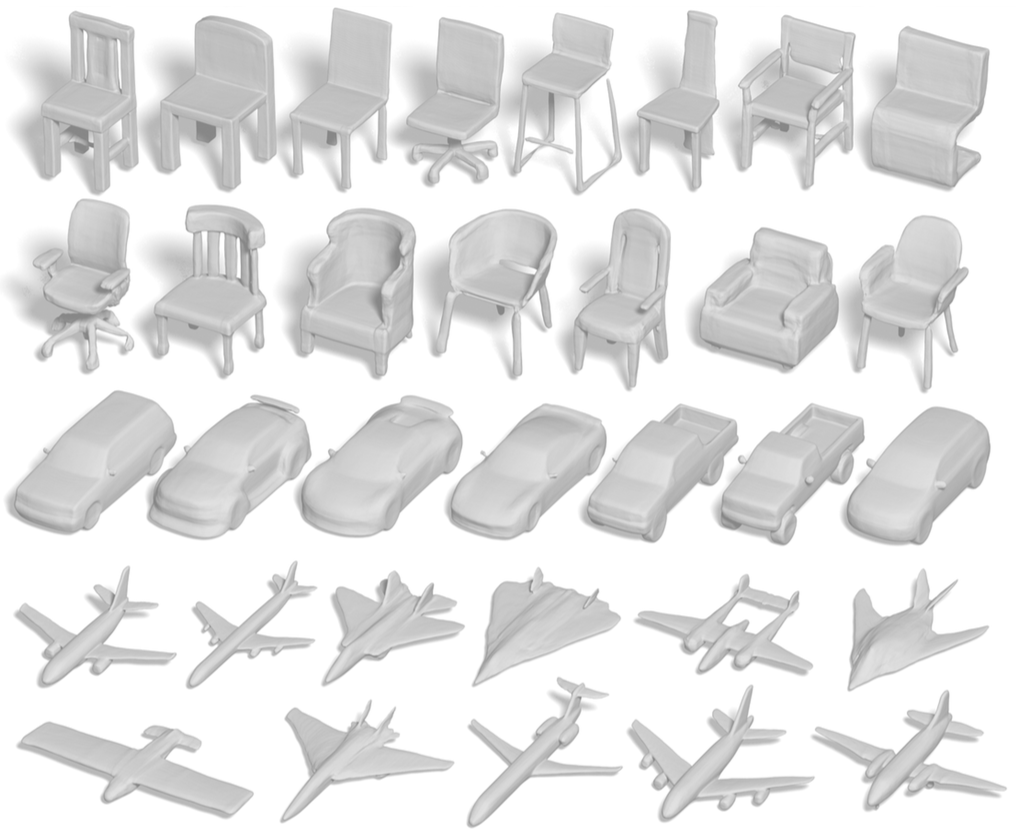

10. Lin Gao, Jie Yang, Tong Wu, Yu-Jie Yuan,

Hongbo Fu, Yu-Kun Lai,

and Hao Zhang,

"SDM-NET: Deep Generative Network for Structured Deformable Mesh",

ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 38, No. 6, Article 243, 2019.

[Project page | arXiv | bibtex]

We introduce SDM-NET, a deep generative neural network which produces structured deformable meshes. Specifically, the network is trained to generate a spatial arrangement of closed, deformable mesh parts, which respect the global part structure of a shape collection, e.g., chairs, airplanes, etc. Our key observation is that while the overall structure of a 3D shape can be complex, the shape can usually be decomposed into a set of parts, each homeomorphic to a box, and the finer-scale geometry of the part can be recovered by deforming the box. The architecture of SDM-NET is that of a two-level variational autoencoder (VAE). At the part level, a PartVAE learns a deformable model of part geometries. At the structural level, we train a Structured Parts VAE (SP-VAE), which jointly learns the part structure of a shape collection and the part geometries, ensuring a coherence between global shape structure and surface details. |

|

9. Hao Xu, Ka Hei Hui, Chi-Wing Fu, and Hao Zhang,

"Computational LEGO Technic Design",

ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 38, No. 6, Article 196, 2019. Included in the SIGGRAPH Asia 2019 technical paper trailer.

[arXiv | bibtex]

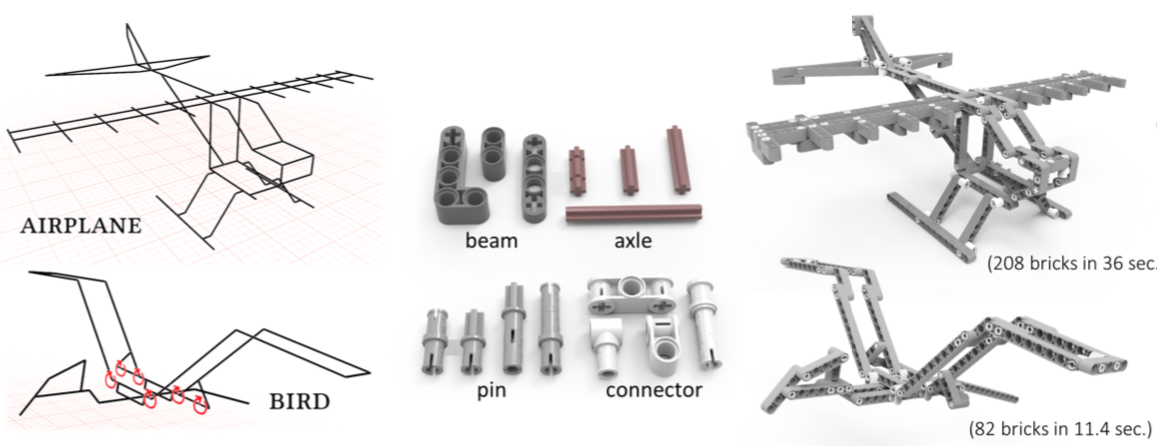

We introduce a method to automatically compute LEGO Technic models from user input sketches, optionally with motion annotations. The generated models resemble the input sketches with coherently-connected bricks and simple layouts, while respecting the intended symmetry and mechanical properties expressed in the inputs. This complex computational assembly problem involves an immense search space, and a much richer brick set and connection mechanisms than regular LEGO. To address it, we first comprehensively model the brick properties and connection mechanisms, then formulate the construction requirements into an objective function, accounting for faithfulness to input sketch, model simplicity, and structural integrity. Next, we model the problem as a sketch cover, where we iteratively refine a random initial layout to cover the input sketch, while guided by the objective. At last, we provide a working system to analyze the balance, stress, and assemblability of the generated model. |

|

8. Zhihao Yan, Ruizhen Hu, Xingguang Yan, Luanmin Chen,

Oliver van Kaick,

Hao Zhang, and Hui Huang,

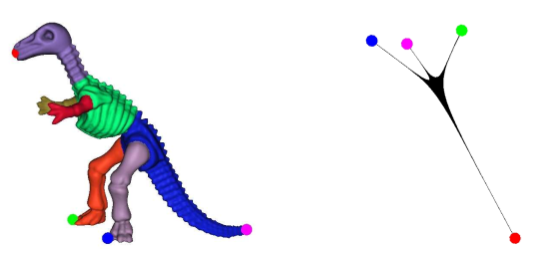

"RPM-Net: Recurrent Prediction of Motion and Parts from Point Cloud",

ACM Transactions on Graphics (Special Issue of SIGGRAPH Asia), Vol. 38, No. 6, Article 240, 2019.

[Project page | bibtex]

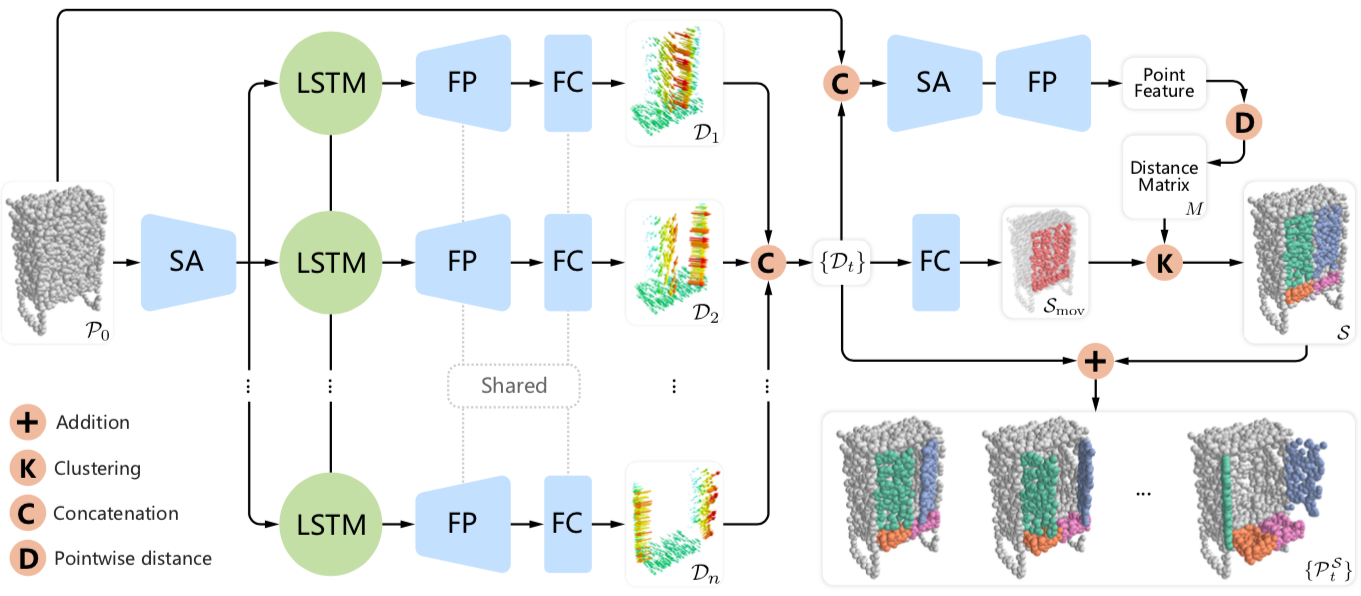

We introduce RPM-Net, a deep learning-based approach which simultaneously infers movable parts and hallucinates their motions from a single, un-segmented, and possibly partial, 3D point cloud shape. RPM-Net is a novel Recurrent Neural Network (RNN), composed of an encoder-decoder pair with interleaved Long Short-Term Memory (LSTM) components, which together predict a temporal sequence of point-wise displacements for the input shape. At the same time, the displacements allow the network to learn moveable parts, resulting in a motion-based shape segmentation. Recursive applications of RPM-Net on the obtained parts can predict finer-level part motions, resulting in a hierarchical object segmentation. Furthermore, we develop a separate network to estimate part mobilities, e.g., per part motion parameters, from the segmented motion sequence. |

|

7. Zhiqin Chen, Kangxue Yin,

Matt Fisher, Siddhartha Chaudhuri,

and Hao Zhang,

"BAE-NET: Branched Autoencoder for Shape Co-Segmentation", ICCV, 2019.

[arXiv | code | bibtex]

We treat shape co-segmentation as a representation learning problem and introduce BAE-NET, a branched autoencoder network, for the task. The unsupervised BAE-NET is trained with all shapes in an input collection using a shape reconstruction loss, without ground-truth segmentations. Specifically, the network takes an input shape and encodes it using a convolutional neural network, whereas the decoder concatenates the resulting feature code with a point coordinate and outputs a value indicating whether the point is inside/outside the shape. Importantly, the decoder is branched: each branch learns a compact representation for one commonly recurring part of the shape collection, e.g., airplane wings. By complementing the shape reconstruction loss with a label loss, BAE-NET is easily tuned for one-shot learning. |

|

6. Nadav Schor, Oren Katzier, Hao Zhang, and Daniel Cohen-Or,

"CompoNet: Learning to Generate the Unseen by Part Synthesis and Composition", ICCV, 2019.

[arXiv | code | bibtex]

Data-driven generative modeling has made remarkable progress by leveraging the power of deep neural networks. A reoccurring challenge is how to sample a rich variety of data from the entire target distribution, rather than only from the distribution of the training data. In other words, we would like the generative model to go beyond the observed training samples and learn to also generate "unseen" data. In our work, we present a generative neural network for shapes that is based on a part-based prior, where the key idea is for the network to synthesize shapes by varying both the shape parts and their compositions. |

|

5. Zhiqin Chen and Hao Zhang,

"Learning Implicit Fields for Generative Shape Modeling", CVPR,

arXiv:1812.02822, 2019.

[PDF |

code |

bibtex]

We advocate the use of implicit fields for learning generative models of shapes and introduce an implicit field decoder for shape generation, aimed at improving the visual quality of the generated shapes. An implicit field assigns a value to each point in 3D space, so that a shape can be extracted as an iso-surface. Our implicit field decoder is trained to perform this assignment by means of a binary classifier. Specifically, it takes a point coordinate, along with a feature vector encoding a shape, and outputs a value which indicates whether the point is outside the shape or not ... |

|

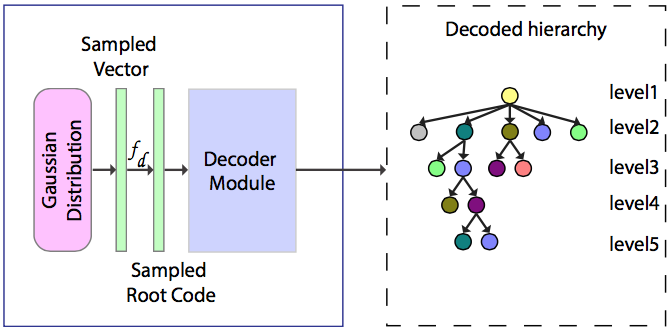

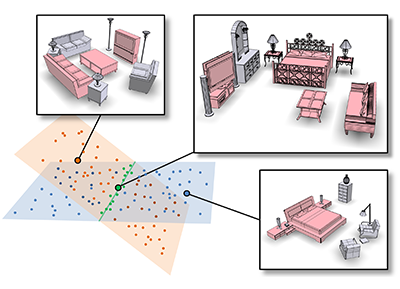

4. Manyi Li, Akshay Gadi Patil,

Kai Xu,

Siddhartha Chaudhuri, Owais Khan,

Ariel Shamir, Changhe Tu,

Baoquan Chen,

Daniel Cohen-Or, and Hao Zhang,

"GRAINS: Generative Recursive Autoencoders for INdoor Scenes",

ACM Transactions on Graphics, Vol. 38, No. 2, Article 12, presented at SIGGRAPH, 2019.

[arXiv |

bibtex]

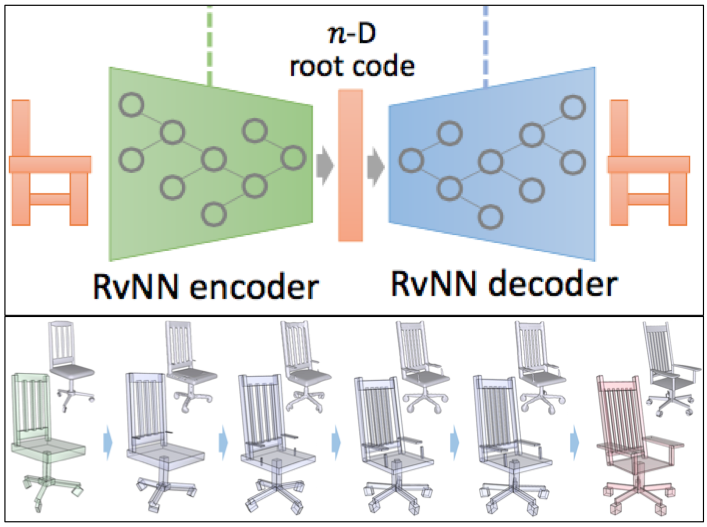

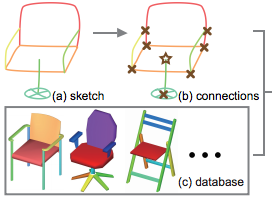

We present a generative neural network which enables us to generate plausible 3D indoor scenes in large quantities and varieties, easily and highly efficiently. Our key observation is that indoor scene structures are inherently hierarchical. Hence, our network is not convolutional; it is a recursive neural network or RvNN. Using a dataset of annotated scene hierarchies, we train a variational recursive autoencoder, or RvNN-VAE, which performs scene object grouping during its encoding phase and scene generation during decoding. |

|

3. Yuan Gan, Yan Zhang, and Hao Zhang,

"Qualitative Organization of Photo Collections via Quartet Analysis and Active Learning", Proc. of Graphics Interface, 2019.

[PDF]