Prev: Loop Invariants

Next: Elementary Data Structures

- by Brad Bart, Simon Fraser University

- Programming Using Induction

- Loop Invariants

- Invariants and Recursive Algorithms

Invariants and Recursive Algorithms

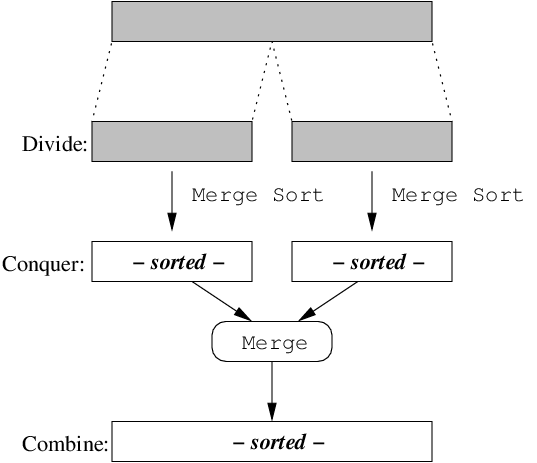

Recursive Algorithms often solve problems by the

Divide & Conquer paradigm,

which involves three stages:

divide, conquer and combine.

Divide the problem into 2 or more smaller subproblems, whose solutions are strongly related to the solution of the original problem.

Conquer by calling the recursive algorithm on each subproblem.

Combine together the subproblem solutions to form the solution to the original problem.

// sorts A[first..last]

void msort(int *A, int first, int last) {

// base case

if (first >= last) return;

// divide

int mid = (first+last) / 2;

// conquer

msort(A, first, mid);

msort(A, mid+1, last);

// combine

merge(A, first, mid, last);

}

Just like an inductive step for a strong induction,

you may assume that the algorithm is correct for all

smaller cases, and therefore the conquer stage

correctly sorts the two sublists A[first..mid] and

A[mid+1..last]. Since the merge(...) step

results in a sorted A[first..last], the algorithm

is correct.

The analysis of the running time is similar.

Let T(n)

represent the number of steps to Merge Sort an

n element array. When

n ≤ 1,

the array A

is trivially sorted (base case), and the running time

is

O(1). When

n > 1,

a certain number of steps are

taken for each of the Divide, Conquer and

Combine stages.

Divide: To compute the value of

midcosts O(1) time.Conquer: The lower half of the array has ⎡n/2⎤ elements and the upper half has ⎣n/2⎦. To recursively sort them will cost T(⎡n/2⎤) + T(⎣n/2⎦) steps.

Combine: What is the time required to merge n elements? At each step, the smallest un-merged element of the lower half is compared with the the smallest un-merged element of the upper half. The smaller of the two is the minimum of all un-merged elements, and is placed in its correct position. Since each element is placed exactly once, the number of steps is linear, i.e., O(n).

| T(n) = | { | O(1) if n ≤ 1 |

| O(1) + T(⎡n/2⎤) + T(⎣n/2⎦) + O(n) if n > 1 |

The algebra involved in the inductive step is beyond the scope

of CMPT 225: you will do proofs like this when you

take CMPT 307. If you just can't wait until then, click here.

You will write and prove your own loop invariants in CMPT 225: some will be about the algorithm's progress/correctness; some about its running time. Induction has another use however— as applied to the development and analysis of data structures. This is the subject of Part 3.

Next: Elementary Data Structures

Next: Elementary Data Structures