Group Activity Recognition using Context

Context relating the actions of individuals and groups of people is modelled for human activity recognition. The project develops two approaches to model context in a latent variable framework. The first approach is in structure-level, which adapts the latent connections between actions of individuals. The second approach is in feature-level, a context descriptor is proposed.

|

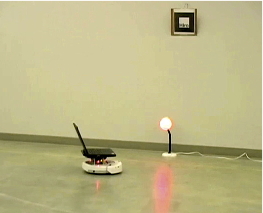

Selecting and Commanding Individual Robots Using Vision

In this work, we describe a human-robot interface designed to use face engagement as a method for selecting a particular robot from a group of robots. Face detection is performed by each robot; the resulting score of the detected face is used in a distributed leader election algorithm to guarantee a single robot is selected. Once selected, motion-based gestures are used to assign tasks to the robot.

|

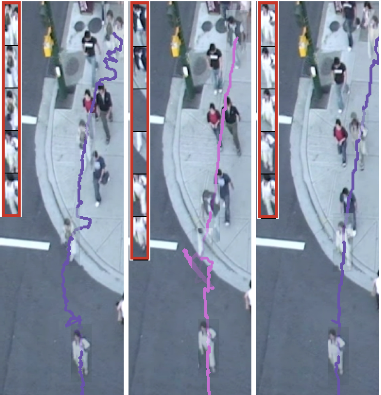

Pedestrian Tracking using Multiple Cues

In this project, we try to build a pedestrian tracking system; we take advantage of a max-margin criterion for jointly learning the system parameters.

|

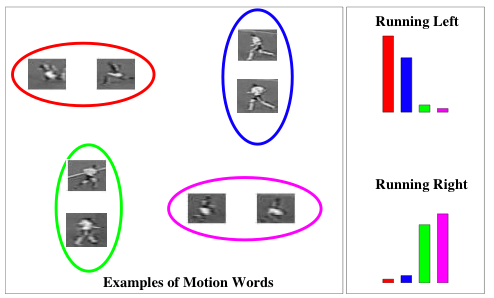

SFU at TRECVID 2009: Event Detection

TRECVID Event Detection evaluation is spondered by NIST, which is to evaluate the state-of-the-art event detection approaches on the most realistic airport surveillance video data(up to 100 hours). SFU team participated in the contest for Running, Embrace, and Poiting events.

|

Human Action Recognition by Semi-Latent Topic Models

In this project, we propose two topic models for recognizing human actions based on ``bag of words'' representation.

|

Real-time Motion-based Gesture Recognition using the GPU

We present a method for recognizing human gestures in real-time, making use of the GPU.

|

Bearcam: Automated Wildlife Monitoring At The Arctic Circle

We deployed a camera system called the "BearCam" to monitor the behaviour of grizzly bears at a remote location near the arctic circle. The system aided biologists in collecting the data for their study on bears' behavioural responses to ecotourists. We developed a novel algorithm for automatically detecting bears in the video captured by this camera system.

|

This project is presents an

automatic method for precisely tracking a person in an

image sequence.

|

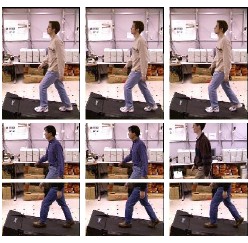

Responsive Video-Based Motion Synthesis

This project is about creating new videos of human motion by splicing together clips from

existing videos. |

Recognizing Fish in Underwater Video

The goal of this collaborative project is to develop vision techniques for recognizing different fish species in challenging underwater video footage. |

Action Recognition of Insects Using Spectral Clustering

This work is about finding different activites of insect (grasshopper) using the stereo track.

|

Unsupervised Discovery of Action Classes

This work is concerned with discovering repetitive human actions in an unsupervised way.

|

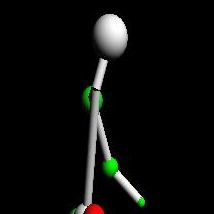

Human Body Pose Estimation

We are researching automatic methods for estimating the pose

of human figures in still images and video sequences.

|

Shape Matching and Breaking CAPTCHAs

We are studying shape representations, and their application to object recognition. We have used these techniques for breaking visual CAPTCHAs, word puzzles designed to tell the difference between humans and 'bots.

|

Lighting Invariance

This work is concerned with the derivation of illumination-invariant, and hence shadow-free, image representations.

|

Recognizing Action at a Distance

Our goal in this project is to recognize human actions at a distance, at resolutions where a whole person may be, say, 30 pixels tall. To classify the action being performed by a human figure in a query sequence, we retrieve nearest neighbor(s) from a database of stored, annotated video sequences. We can also use these retrieved exemplars to transfer 2D/3D skeletons onto the figures in the query sequence, as well as two forms of data-based action synthesis.

|

Image-based Music Generation

This study focus on converting image into music using various visual-auditory associations.

|