Home

Schedule

Reading List

Computing

Grading

Project

Presentation

|

|

|

What is the class about?

Are you interested in understanding the architecture of

cutting-edge systems that you will be programming in the future

and what challenges confront software?

In parallel computing, multiple processors compute sub-tasks

simultaneously so that work can be completed faster. Early

parallel computing has focused on solving large

computation-intensive problems like scientific simulations. With

the increasingly available commodity multiprocessors (like

multicores), parallel processing has penetrated into many areas

of general computing. In distributed computing, a set of

autonomous computers are coordinated to achieve unified goals.

More interestingly the community is increasingly turning to

parallel programming models (e.g., Google's Mapreduce,

Microsoft's Dryad Linq) to harness the capabilities of these

large scale distributed systems.

This course explores the paradigms of parallel and

distributed computing, their applications, and the

systems/architectures supporting them. This course is a hands-on,

programming-heavy course. Expect to get down and get your hands

dirty with concurrent C++ programming. We will discuss the

fundamental design and engineering trade-offs in parallel and

distributed systems at every level. We will study not only how

they work today, but also try to understand the design decisions

and how they are likely to evolve in the future. We will draw

examples from real-world parallel and distributed systems in this

course.

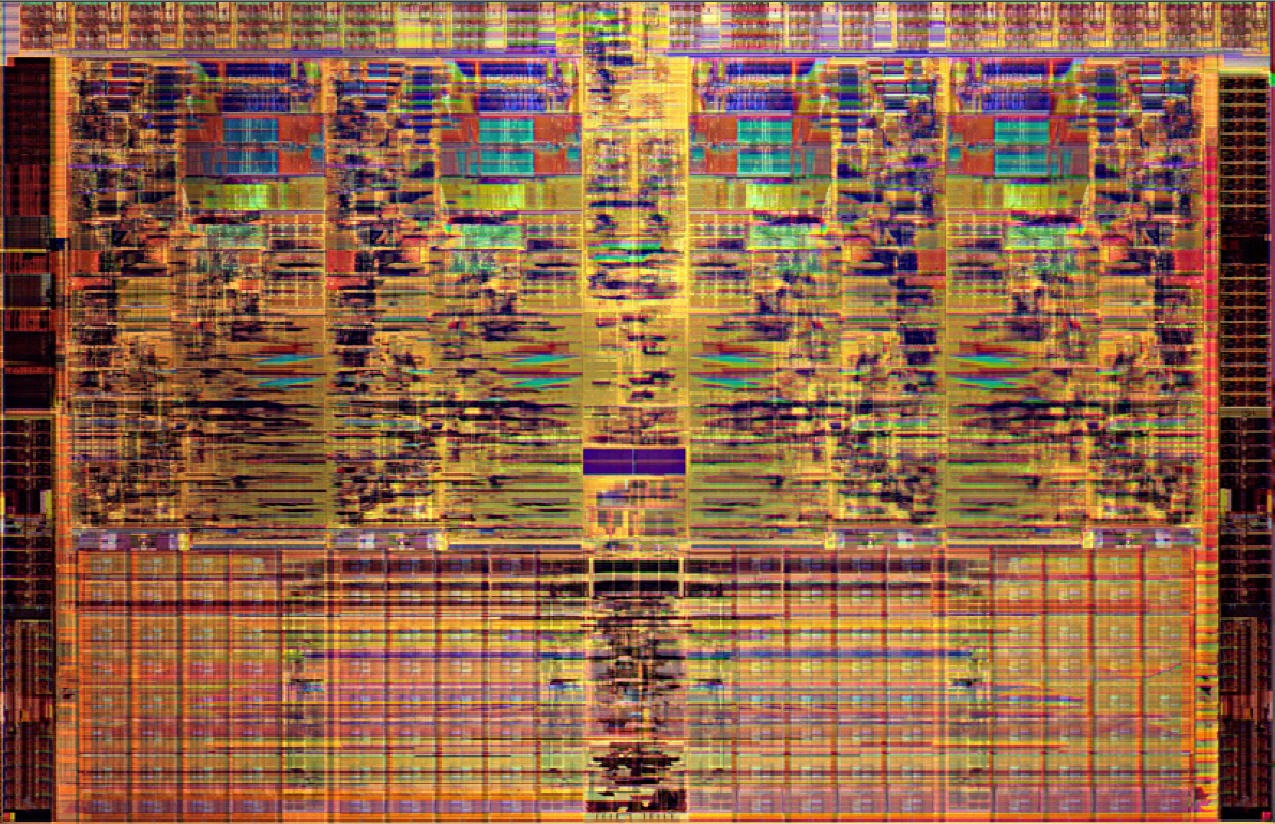

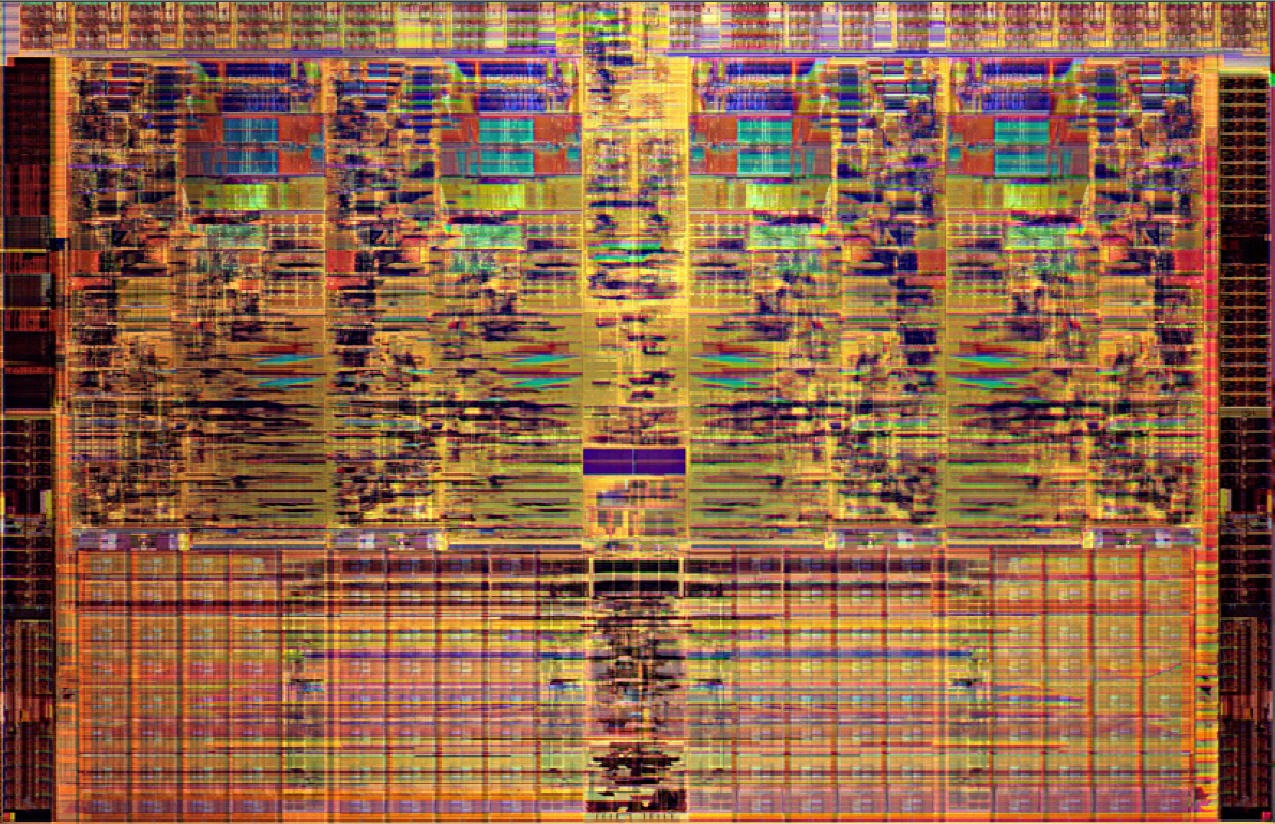

In the first half we will focus on the trend of

Parallelism . We will begin with a primer on multicore

manycore chips: chips that integrate multiple CPUs on the

same die. Currently, Intel is shipping 10 core (20 thread chips) and

our own AMD servers are 2 socket 24 thread systems. Harnessing these

new compute engines is an open challenge. We will focus on their

architectures and different programming models that empower us to tap

into this fountain of CPUs. We will study the system and

architectural support for shared memory parallel computing,

including support for synchronization, cache coherence, and

memory consistency. We will look at programming abstractions such

as locks, mutexes, conditional synchronization, and transactional

memory. At this stage we will also be exposed to the

manycore accelerators such as gaming processors and GPUs which

promise 100x performance improvement for "data parallel" workloads.

In the second half we will focus on massively distributed

systems which will look into the foundation of distributed

computing, including distributed consensus, fault-tolerance

and reliability. We will examing how distributed systems implement

the basic parallel programming constructs like atomicity and

mutual exclusion. We will examine the parallel computing models

like MPI and Mapreduce customized to these systems. Finally, we will

perform case studies of practical distributed systems and devote

significant attention to cluster-based server systems in large

Internet data centers (e.g., those running Google services).

|

Prereq

Undergraduate operating systems course (CMPT 300), basic computer

architecture course, solid programming skills (C/C++/Java). If unsure

whether you have the pre-requisites, talk to the instructor!

How is it related to CMPT 885 Summer 2011 ?

We will focus explicitly on parallel systems. Expect to learn

about cache coherence and memory consistency in detail. Different

programming models for current multicores. We will also learn about

the theoretical attributes and programming of distributed systems.

|

How is it related to CMPT 431 ?

We will focus explicitly on parallel systems. Expect to learn

about cache coherence and memory consistency in detail. Hardware

architectures of various parallel systems, multcores, manycores,

GPUs and Google clusters. Programming models for parallel and

distributed systems (i.e., Mapreduce, MPI, Transactional Memory and more...)

|

When and Where

Monday (2:30-3:20), Wednesdays, and Fridays (2:30-3:20)

Room 3170 @ Surrey.

Directions : Galleria on 3rd floor

|

|

|