Growth of Functions

- We will use something called big-O notation (and some siblings described later) to describe how a function grows.

- What we're trying to capture here is how the function grows.

- … without capturing so many details that our analysis would depend on processor speed, etc.

- … without worrying about what happens for small inputs: they should always be fast.

- For functions \(f(x)\) and \(g(x)\), we will say that “\(f(x)\) is \(O(g(x))\)” [pronounced “\(f(x)\) is big-oh of \(g(x)\)”] if there are positive constants \(C\) and \(k\) such that

\[|f(x)| \le C|g(x)|\text{ for all }x>k\,.\]

- The big-O notation will give us a order-of-magnitude kind of way to describe a function's growth (as we will see in the next examples).

- Roughly speaking, the \(k\) lets us only worry about big values (or input sizes when we apply to algorithms), and \(C\) lets us ignore a factor difference (one, two, or ten steps in a loop).

- I might also say “\(f(x)\) is in \(O(g(x))\)”, then thinking of \(O(g(x))\) as the set of all functions with that property.

Example: The function \(f(x)=2x^3+10x\) is \(O(x^3)\).

Proof: To satisfy the definition of big-O, we just have to find values for \(C\) and \(k\) that meet the condition.

Let \(C=12\) and \(k=2\). Then for \(x>k\), \[\begin{align*} |2x^3+10x| &= 2x^3+10x\\ &< 2x^3+10x^3\\ &= |12x^3|.\quad ∎ \end{align*}\]

- Note: there's nothing that says we have to find the best \(C\) and \(k\). Any will do.

- Also notice that the absolute value doesn't usually do much: since we're worried about running times, negative values don't usually come up. We can just demand that \(x\) is big enough that the function is definitely positive and then remove the \(|\cdots|\).

- Now it sounds too easy to put a function in a big-O class. But…

Example: The function \(f(x)=2x^3+10x\) is not in \(O(x^2)\).

Proof: Now we must show that no \(C\) and \(k\) exist to meet the condition from the definition.

For any candidate \(C\) and \(k\), we can take \(x>k\) and \(x>0\) and we would have to satisfy \[\begin{align*} |2x^3+10x| &< C|x^2| \\ 2x^3+10x &< Cx^2 \\ 2x^3 &< Cx^2 \\ x &< C/2 \\ \end{align*}\]

So no such \(C\) and \(k\) can exist to let the inequality hold for large \(x\).∎

Example: The function \(f(x)=2x^3+10x\) is \(O(x^4)\).

Proof idea: For large \(x\), we know that \(x^4>x^3\). We could easily repeat the \(O(x^3)\) proof above, applying that inequality in a final step.

-

Example: The function \(f(x)=5x^2 - 10000x + 7\) is \(O(x^2)\).

Proof: We have to be a little more careful about negative values here because of the “\(- 10000x\)” term, but as long as we take \(k\ge 2000\), we won't have any negative values since the \(5x^2\) term is larger there.

Let \(C=12\) and \(k=2000\). Then for \(x>k\), \[\begin{align*} |5x^2 - 10000x + 7| &= 5x^2 - 10000x + 7\\ &< 5x^2 + 7x^2\\ &= |12x^2|.\quad ∎ \end{align*}\]

- It probably wouldn't take many more proofs to convince you that \(x^n\) is always in \(O(x^n)\) but never in \(O(x^{n-1})\).

- We can actually do better than that…

- The big-O operates kind of like a \(\le\) for growth rates.

Big-O Results

-

Theorem: Any degree-\(n\) polynomial, \(f(x)=a_nx^n + a_{n-1}x^{n-1}+\cdots + a_1x+a_0\) is in \(O(x^n)\).

Proof: As before, we can assume that \(x>1\) and then, \[\begin{align*} |f(x)| &= |a_nx^n + a_{n-1}x^{n-1}+\cdots + a_1x+a_0| \\ &\le |a_n|x^n +|a_{n-1}|x^{n-1}+\cdots + |a_1|x+|a_0| \\ &= x^n(|a_n| +|a_{n-1}|/x+\cdots + |a_1|/x^{n-1}+|a_0|/x^n) \\ &\le x^n(|a_n| +|a_{n-1}|+\cdots + |a_1|+|a_0|)\,. \end{align*}\]

Now, if we let \(C=\sum |a_i|\) and \(k=1\), we have satisfied the definition for \(O(x^n)\).∎

-

Theorem: If we have two functions \(f_1(x)\) and \(f_2(x)\) both \(O(g(x))\), then \(f_1(x)+f_2(x)\) is also \(O(g(x))\).

Proof: From the definition of big-O, we know that there are \(C_1\) and \(k_1\) that make \(|f_1(x)| \le C|h(x)|\) for \(x>k_1\), and similar \(C_2\) and \(k_2\) for \(f_2(x)\).

Let \(C=C_1+C_2\) and \(k=\mathrm{max}(k_1,k_2)\). Then for \(x>k\), \[\begin{align*} |f_1(x)+f_2(x)| &\le |f_1(x)|+|f_2(x)| \\ &\le C_1|g(x)| + C_2|g(x)| \\ &= C|g(x)| \,. \end{align*}\]

Thus, \(f_1(x)+f_2(x)\) is \(O(g(x))\).∎

- The combination of functions under big-O is generally pretty sensible…

-

Theorem: If for large enough \(x\), we have \(f(x)\le g(x)\), then \(f(x)\) is \(O(g(x))\).

- Sometimes the big-O proof is even easier.

-

Theorem: If we have two functions \(f_1(x)\) which is \(O(g_1(x))\) and \(f_2(x)\) which is \(O(g_2(x))\), then \(f(x)+g(x)\) is \(O(\mathrm{max}(|g_1(x)|,|g_2(x)|))\).

- When adding, the bigger one wins.

-

Theorem: If we have three functions \(f,g,h\) where \(f(x)\) is \(O(g(x))\) and \(g(x)\) is \(O(h(x))\), then \(f(x)\) is \(O(h(x))\).

- Approximately: if \(h\) is bigger than \(g\) and \(g\) is bigger than \(f\), then \(h\) is bigger than \(f\).

-

Corollary: Given \(f_1(x)\) which is \(O(g_1(x))\) and \(f_2(x)\) which is \(O(g_2(x))\) and \(g_1(x)\) is \(O(g_2(x))\) then \(f_1(x)+f_2(x)\) is \(O(g_2(x))\).

- That is, if we have two functions we know a big-O bound for, and we add them together, we can ignore the smaller one in the big-O.

-

Theorem: If we have two functions \(f_1(x)\) which is \(O(g_1(x))\) and \(f_2(x)\) which is \(O(g_2(x))\), then \(f(x)g(x)\) is \(O(g_1(x)g_2(x))\).

- Multiplication happens in the obvious way.

-

Theorem: Any constant value is is \(O(1)\).

- Aside: You will often hear a constant running time algorithm described as \(O(1)\).

-

Corollary: Given \(f(x)\) which is \(O(g(x))\) and a constant \(a\), we know that \(af(x)\) is \(O(g(x))\).

- That is, if we have a function multiplied by a constant, we can ignore the constant in the big-O.

-

- All of that means that it's usually pretty easy to guess a good big-O category for a function.

\(f(x)=2^x+x^2\) is in \(O(\mathrm{max}(|2^x|,|x^2|))=O(2^x)\), since \(2^x\) is larger than \(x^2\) for large \(x\).

\(f(x)=\frac{1}{100}x^{12} + 100x^{11} - 87\) is in \(O(x^{12})\).

- Directly from the theorem about polynomials.

- For small \(x\), the \(100x^{11}\) is the largest, but as \(x\) grows, the \(\frac{1}{100}x^{12}\) term takes over.

\(f(x)=14x2^x + x\) is in \(O(x2^x)\).

- What is a good big-O bound for \(17x^4 - 12x^2 + \log_2 x\)?

- We can start with the obvious: \[17x^4 - 12x^2 + \log_2 x \text{ is in } O(17x^4 - 12x^2 + \log_2 x)\,.\]

- From the above, we know we can ignore smaller-order terms: \[17x^4 - 12x^2 + \log_2 x \text{ is in } O(17x^4)\,.\]

- And we can ignore leading constants: \[17x^4 - 12x^2 + \log_2 x \text{ is in } O(x^4)\,.\]

- The “ignore smaller-order terms and leading constants” trick is very useful and comes up a lot.

Big-Ω

- As mentioned earlier, big-O feels like \(\le\) for growth rates.

- … then there must be \(\ge\) and \(=\) versions.

- We will say that a function \(f(x)\) is \(\Omega(g(x))\) (“big-omega of \(g(x)\)”) if there are positive constants \(C\) and \(k\) such that when \(x\gt k\),\[|f(x)| \ge C|g(x)|\,.\]

- This is the same as the big-O definition, but with a \(\ge\) instead of a \(\le\).

-

Example: The function \(3x^2+19x\) is \(\Omega(x^2)\).

Proof: If we let \(C=3\) and \(k=1\) then for \(x>k\), \[\begin{align*} |3x^2+19x| &\ge 3x^2+19x \\ &\ge 3|x^2|\,. \end{align*}\]

From the definition, we have that \(3x^2+19x\) is \(\Omega(x^2)\).∎

- As you can guess, the proofs of big-Ω are going to look just about like the big-O ones.

- We have to be more careful with negative values: in the big-O proofs, we could just say that the absolute value was bigger and ignore it. Now we need smaller values, so can't be so quick.

- But the basic ideas are all the same.

-

Theorem: \(f(x)\) is \(O(g(x))\) iff \(g(x)\) is \(\Omega(f(x))\).

Proof: First assume we have \(f(x)\) in \(O(g(x))\). Then there are positive \(C\) and \(k\) so that when \(x\gt k\), we know \(|f(x)|\le C|g(x)|\). Then for \(x\gt k\), we have \(|g(x)|\ge \frac{1}{C}|f(x)|\) and we can use \(k\) and \(\frac{1}{C}\) as constants for the definition of big-Ω.

Similarly, if we assume that \(g(x)\) is \(\Omega(f(x))\), we have positive \(C\) and \(k\) so that when \(x\gt k\), we have \(|g(x)|\ge C|f(x)|\). As above we then have for \(x\gt k\), \(|f(x)|\le\frac{1}{C}|g(x)|\).∎

Big-Θ

- We will say that a function \(f(x)\) is \(\Theta(g(x))\) (“big-theta of \(g(x)\)”) if \(f(x)\) is both \(O(g(x))\) and \(\Omega(g(x))\).

- For a function that is \(\Theta(g(x))\), we will say that that function “is order \(g(x)\).”

-

Example: The function \(2^x+x^2\) is order \(2^x\).

Proof: To show that \(2^x+x^2\) is \(O(2^x)\), we can take \(C=2\) and \(k=4\). Then for \(x>k\), \[\begin{align*} |2^x+x^2| &= 2^x+x^2 \\ &\le 2\cdot 2^x\,. \end{align*}\]

To show that \(2^x+x^2\) is \(\Omega(2^x)\), we can use \(C=1\) and \(k=1\). For \(x>k\), \[\begin{align*} |2^x+x^2| &= 2^x+x^2 \\ &\ge 2^x\,. \end{align*}\]

Thus, \(2^x+x^2\) is \(\Theta(2^x)\).∎

- The above theorem gives another way to show big-Θ: if we can show that \(f(x)\) is \(O(g(x))\) and \(g(x)\) is \(O(f(x))\), then \(f(x)\) is \(\Theta(g(x))\).

-

Theorem: Any degree-\(n\) polynomial with \(a_n\ne 0\), \(f(x)=a_nx^n + a_{n-1}x^{n-1}+\cdots + a_1x+a_0\) with \(a_n\gt 0\) is in \(\Theta(x^n)\).

- A few results on big-Θ…

-

Theorem: If we have two functions \(f_1(x)\) which is \(\Theta(g_1(x))\) and \(f_2(x)\) which is \(\Theta(g_2(x))\), and \(g_2(x)\) is \(O(g_1(x))\), then \(f_1(x)+f_2(x)\) is \(\Theta(g_1(x)))\).

- That is, when adding two functions together, the bigger one “wins”.

-

Theorem: If we have two functions \(f_1(x)\) which is \(\Theta(g(x))\) and \(f_2(x)\) which is \(O(g(x))\), then \(f(x)+g(x)\) is \(\Theta(g(x)))\).

-

Theorem: for a positive constant \(a\), a function \(af(x)\) is \(\Theta(g(x))\) iff \(f(x)\) is \(\Theta(g(x))\).

- That is, leading constants don't matter.

-

Corollary: Any degree-\(n\) polynomial, \(f(x)=a_nx^n + a_{n-1}x^{n-1}+\cdots + a_1x+a_0\) with \(a_n\gt 0\) is in \(\Theta(x^n)\).

-

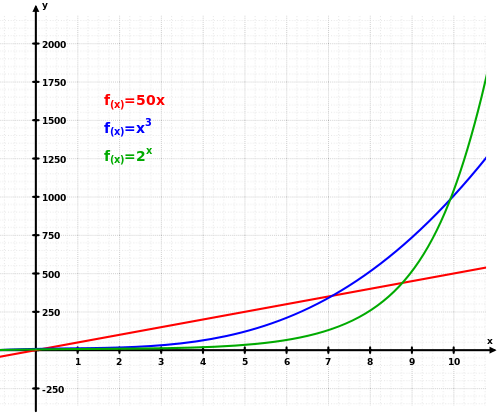

- What functions have a “higher” big-Θ than others is usually fairly obvious from a graph, but “I looked at a graph” isn't very much of a proof.

Source: Wikipedia Exponential.svg

- The big-O notation sets up a hierarchy of function growth rates. Here are some of the important “categories”:

\[n!\\2^n\\n^3\\n^2\\n\log n\\n\\\sqrt{n}=n^{1/2}\\\log n\\1\]

- Each function here is big-O of ones above it, but not below.

- e.g. \(n\log n\) is \(O(n^2)\), but \(n^2\) is not \(O(n\log n)\).

- So in some important way, \(n^2\) grows faster than \(n\log n\).

- Where we are headed: we will be able to look at an algorithm and say that one that takes \(O(n\log n)\) steps is faster than one that takes \(O(n^2)\) steps (for large input).

Growth of Logarithms

- A note about logarithm functions and big-O/Ω/Θ…

- You will often see statements like “the function is \(O(\log n)\)” or “is \(\Theta(n\log n)\)” with no base mentioned.

- Remember this fact about logarithms: \[\log_b x = \frac{\log_a x}{\log_a b} = \frac{1}{\log_a b}\log_a x \,.\]

- That is, changing the base changes the value by a constant factor.

- Since constant factors don't change the big-O/Ω/Θ category, we don't care which base.

- \(f(x)\) is \(\Theta(log_a x)\) iff \(f(x)\) is \(\Theta(log_b x)\).

- So we'll often get lazy and not bother writing the base.